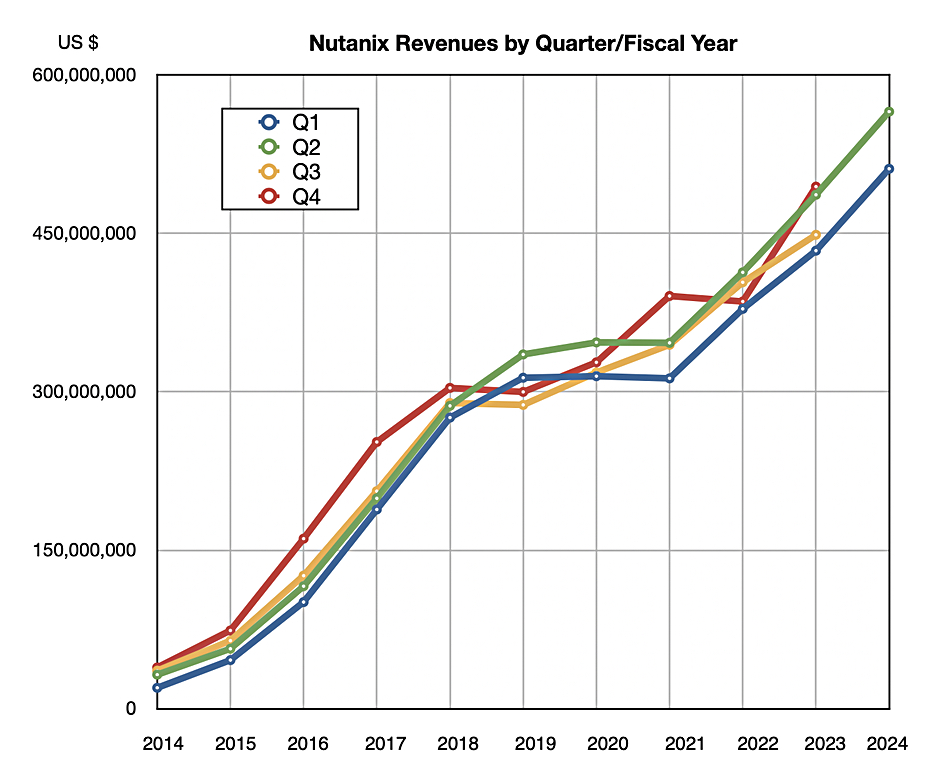

Nutanix has made its first ever profit as its revenues grow 16 percent year-on-year in its ninth successive growth quarter. VMware and CIsco represent clear opportunities while its Gen AI strategy is still emerging.

Fifteen years after being founded and eight years after its IPO, hyperconverged infrastructure vendor Nutanix has reached business maturity. Revenues in its second fiscal 2024 quarter, ended Jan 31, were $565.2 million compared to the year-ago $486.5 million, and there was a profit of $32.8 million. Quite a turnaround from the $70.8 million loss last year. The company is still growing its customer base, with 440 new customers in the quarter, taking the total to 25,370.

President and CEO Rajiv Ramaswami was, as usual, measured in his comment, saying: “We’ve delivered a solid second quarter, with results that came in ahead of our guidance. The macro backdrop in our second quarter remain uncertain, but stable relative to the prior quarter.”

He said: ”We achieved quarterly GAAP operating profitability for the first time in Q2, demonstrating the progress we continue to make on driving operating leverage in our subscription model.”

Financial summary

- Gross Margin: 85.6 percent vs 82.2 percent a year ago

- Annual Recurring Revenue: $1.74 billion

- Free cash flow: $162.6 million vs year-ago $63 million

William Blair analyst Jason Ader said it was another beat (the guidance) and raise quarter, with Nutanix “beating consensus across all key metrics, despite ongoing macro pressure.”

The future

Nutanix sees steady demand ahead. The Broadcom-VMware acquisition is still creating uncertainty for VMware customers and where else should a worried VMware customer go but to the safe and steady haven represented by Nutanix? This is a multi-year opportunity for Nutanix, especially with 3-tier (separate server, storage and client GUI access) client-server VMware customers who could embrace HCI for the first time with Nutanix instead of inside the VMware environment. And also, of course, vSphere and VSAN customers who could migrate fairly simply to Nutanix.

The Cisco alliance, replacing Cisco’s Hyperflex HCI with Nutanix’ offering is a second tailwind, set to become significant revenue-wise in fy2025. Gen AI is a third potential tailwind.

Nutanix’ presence in the GenAI market is represented by its GPT-in-a-box system on which to run Large Language Models (LLMs). This was announced six months ago. Nutanix still does not support Nvidia’s GPUDirect protocol and thus GPT-in-a-Box looks like a relatively slow deliverer of data to external Nvidia GPU servers.

Ramaswami said in the earnings call: “Moving on, adopting and benefiting from generating AI, is top of mind for many of our customers. As such, interest remains high in our GPT in-a-Box offering, which enables our customers to accelerate the use of generative AI across their enterprises, while keeping their data secure.” He talked about a federal agency customer for the system, along with other wins, and: “While it’s still early days, and the numbers remain small, I’m excited about the longer term potential for GPT in-a-Box.”

He added: “We think Nutanix systems could run Gen AI Inferencing on their X86 servers but are not suited to Gen AI training as they have no GPU support. Ramaswami said the GPT-in-a-Box use cases include “document summarization, search, analytics, co-piloting, customer service, [and] enhanced fraud detection.”

He characterized customer’s AI involvement as being hybrid multi-cloud in style: “It’s starting out with a lot of training happening in the public cloud, but it’s moving towards, okay, I’m going to run my AI, I’m going to fine tune the training, on my own data sets, which are proprietary that I want to keep carefully. And I’m going to have to potentially look at slightly different solutions for inferencing, which are going to be running closer to my edge locations.”

The proprietary dataset fine tuning could run on-premises if there were GPU servers there. The GPU-in-a-Box supports GPU-enabled server nodes. These are x86 servers with added GPU accelerators, such as HPE’s ProLiant DL320, DL380a and DL395 which come with Intel or AMD CPUs and Nvidia L4/L40/L40S GPUs. It could run in GPU server farms, such as ones operated by CoreWeave and Lambda Labs. The data would need to be sent there and, again, Nutanix does not support GPUDirect

Comment

In the Ramaswami era Nutanix is a judicious company. It has not yet seen a need to add GPUDirect support to pump files faster out to GPU servers. This could mean that it is effectively excluded from large-scale Gen AI training and is an on-premises Gen AI inferencing supporter and small-scale, fine tuning trainer instead.