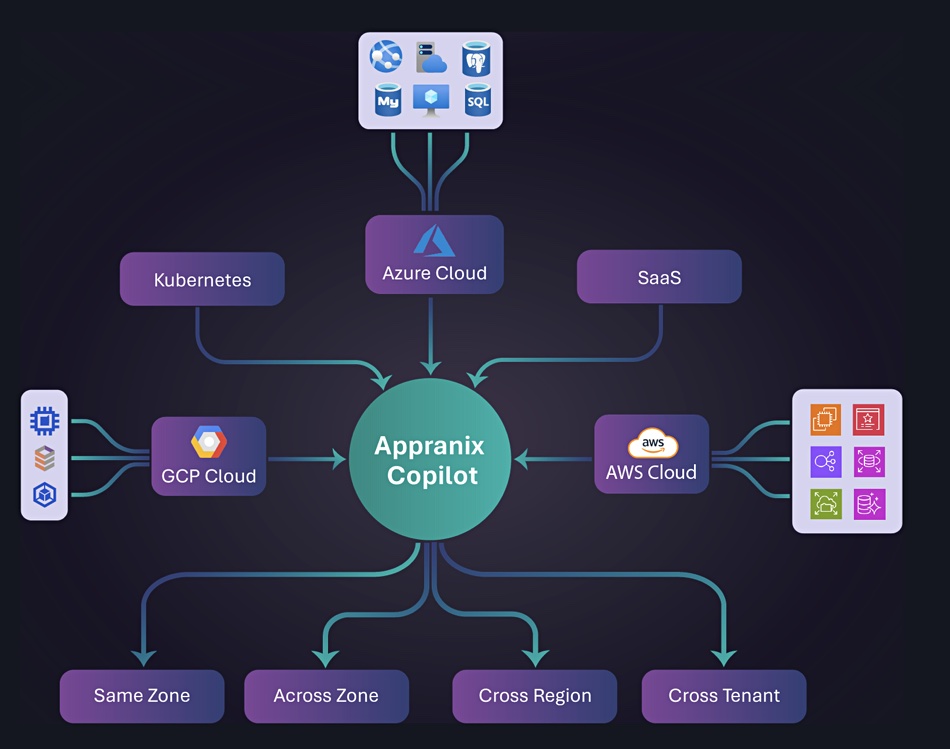

Nasuni customers can now integrate their data stores and workflows with customized Copilot assistants.

Microsoft’s Copilot is a GenAI chatbot integrated with Microsoft 365 apps such as Teams. Users can build their own customized Copilots using a Copilot Studio facility, which will work with their Microsoft app data. Nasuni provides distributed cloud-based File Data Platform services and stores its customers’ unstructured data in an Amazon S3 object store. It has developed a way for this data to be made available to customized Copilot chatbots to improve and broaden its ability to answer requests using Nasuni-stored unstructured data.

Jim Liddle, Nasuni’s chief innovation officer, said: “While Microsoft Copilot is an incredible general-purpose AI assistant, its true enterprise value is realized when it is infused with an organization’s domain-specific data. File data is typically locked up in siloed environments, making AI impossible. With Nasuni, customers can consolidate their data in the cloud and then leverage AI.”

To demonstrate this, Nasuni developed a version of Copilot for internal use. “We created our own Copilot chatbot that leverages our Nasuni data, called ‘Ask Nasuni,’ that is deployed within the Microsoft Teams environment for our employees to interact with. It only makes sense that our customers would want to do something similar to leverage their own corporate information.”

The Copilot Studio facility enables customers to produce their own specific versions of the chatbot. Nasuni has a white paper guide to giving such customized Copilot chatbots Nasuni data access:

It suggests such Copilots “work particularly well for static data sets that change infrequently” and typical use cases include:

- Domain-specific assistance: Create Copilots specialized in specific domains (e.g., healthcare, legal, finance) to provide accurate and relevant information

- Custom FAQs: Build Copilots that answer frequently asked questions, reducing the load on human support teams

- Content recommendations: Develop Copilots that recommend relevant articles, products, or services based on user queries

- Process automation: Copilot Studio can guide users through complex processes or workflows

- Personalized conversations: Customize Copilots to engage in natural conversations with users, enhancing user experience

Nasuni wants clients who are also Microsoft customers adopting Copilot chatbot technology to have Copilot-mediated natural language interaction with data in Nasuni-stored documents and files.

Comment

This seems like a “no-brainer” issue. We could see all unstructured data storage suppliers adopting a similar approach and ensuring that data held in their stores is made available to their Microsoft-using customers’ Copilot-based chatbots.