The Compute Express Link (CXL) bus has won the post-PCIe war and will enable disaggregated systems technology.

Alex McDonald, EMEA Chair of the SNIA, told a press briefing in London last week that AMD, ARM, and IBM have joined Intel aboard the CXL bus technology bandwagon. That’s all four main CPU vendors.

Only interconnect

We looked CXL a year ago and saw it then as a fourth future high-speed interconnect technology alongside the Gen-Z Consortium, OpenCAPI and CCIX initiatives.

CXL has the full backing of Intel and we suggested “that the sooner CCIX, Gen-Z and OpenCAPI combine the better for them. An Intel steamroller is coming their way and a single body will be harder to squash”.

They haven’t combined and in our view Gen Z, OpenCAPI and CCIX are dead in the water. They have not attracted the same degree of CPU manufacturer support – which means CXL has won the bus war.

Any post-PCIe bus technology for interconnecting processors to DRAM, FPGAs and other dedicated processors must have the support of the server CPU suppliers. It’s a sine qua non.

CXL bus features

Composable system vendors such as Liqid that use the PCIe bus are nicely placed to move into CXL bus technologies and increase the granularity of their composed systems. Blocks & Files thinks all composable systems vendors will have to support the CXL bus because of this.

In his presentation, McDonald discussed CXL bus features which make it attractive to CPU vendors and how the CXL bus could help shatter or disaggregate the integrated server (compute + DRAM + storage + networking) model which is already under attack from GPUs as well as assorted ASICs and FPGAs.

Disaggregated systems, featuring computational storage, persistent memory and server composability, all need a high-speed bus to work. This requires better-than-PCIe technology because today’s PCIe bus is too slow and does not support distributed memory.

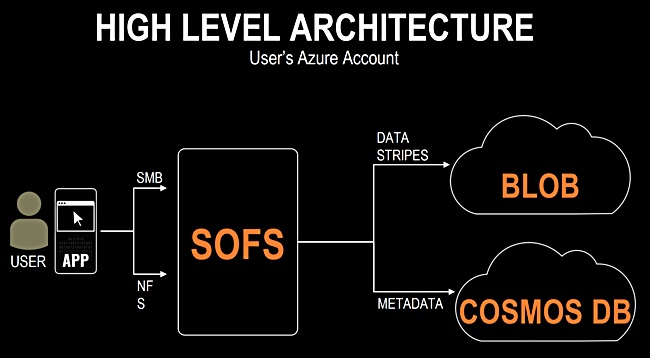

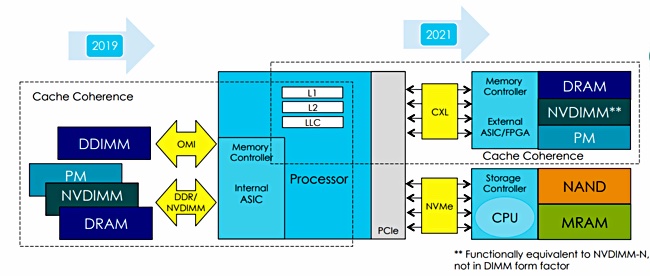

McDonald said current servers have memory directly connected to the CPU with storage connected over buses such as SAS and SATA and, lately, the PCIe bus using NVMe. That’s the left side of the diagram below.

The right side of the diagram shows the CXL bus being used to link processors to memory, including persistent memory and NVMe, hooking up storage with a storage controller / CPU present. This storage controller can be a dedicated storage processor carrying out some form of computational storage, offloading the host server CPU.

The CXL bus can be used in systems with distributed memories and its cache coherency ensures that item updates in one part of the distributed memory system are rippled through to the copies, or caches. The current PCIe bus is not cache coherent. (Cache coherency applies to two or more memory resources, such as main memory and a cache, holding copies of data that are identical or coherent. With multi-core CPUs each core has its own cache. A cache coherent system causes cached copies of data in main memory to be invalidated when the main memory item is updated, forcing the caches to be reloaded from main memory.)

When PCIe Gen 5 with its 32Gbit/s bandwidth arrives the CXL bus protocol can run across it. The PCIe 5.0 spec was published in May last year. PCIe 4.0’s spec was finalised in 2017 and systems featuring the technology are just about to hit the market in 2020. The earliest PCIe Gen 5 systems, and hence CXL, will arrive is 2021. The general idea is that PCIe 5.0 + CXL will be used for higher performance data centre servers, while PCIe 4.0 is relegated to lower-performance servers and desktop/notebook/workstation systems.

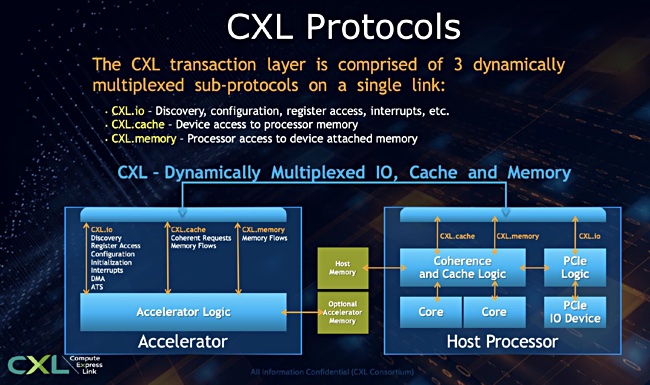

CXL protocol elements

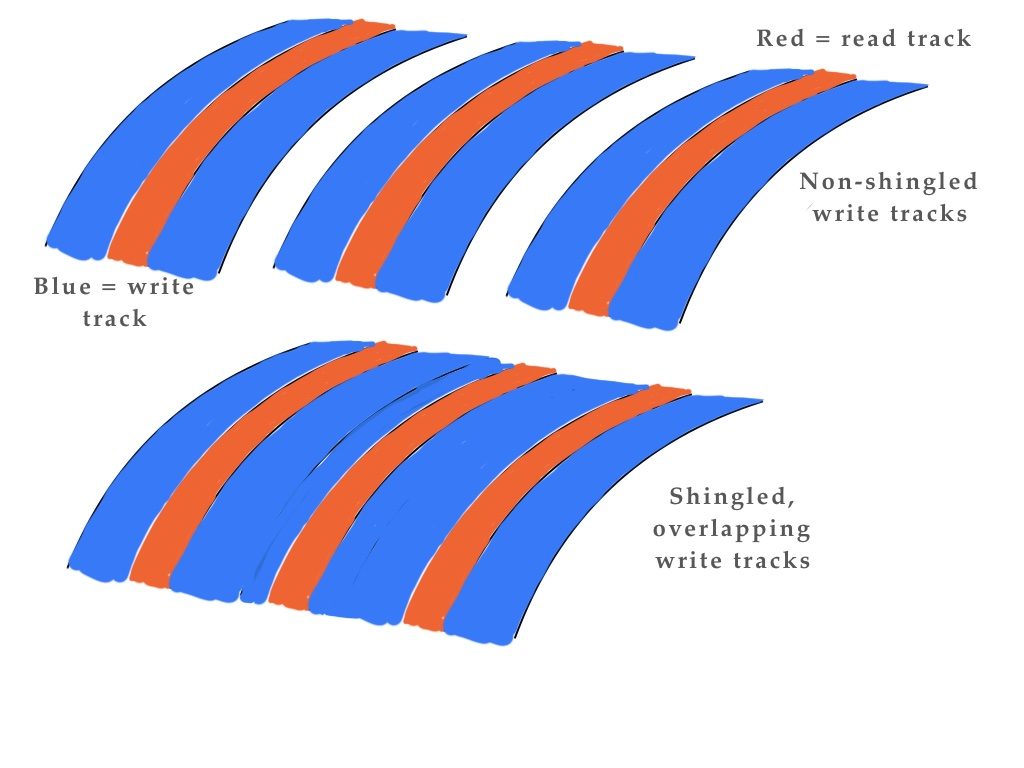

There are three sub-protocols in the CXL scheme and they can be used at the same time on a CXL wire. They are CXL.io, CXL.cache and CXL.memory. A diagram shows how data and processing logic blocks are connected in the CXL world;

CXL.io is basically PCIe Gen 5 and all PCIE services will work. CXL.memory enables a host CPU to access persistent memory while CXL.cache connects a host CPU to cached memory in external processing devices such as accelerators like smart NICs, GPUs, FPGAs, ASICs, dedicated storage processors – think Pensando and Pliops. It can also be used to link computational storage devices to a host server – think Eideticom, NETINT Technologies, NGD Systems and ScaleFlux.

Lastly, CXL.memory can interconnect memory buffers when memory is expanded, providing direct memory access (DMA).