Sponsored Intel® Optane™ is a memory technology that has the potential to boost performance in key areas of the data centre, thanks to attributes that combine access speeds close to that of DRAM with the non-volatility or persistence characteristics of a storage medium.

This means Optane™ technology can be used in a number of ways – to build high-speed storage products or to create a new tier in the memory hierarchy between DRAM and storage. The latter option boosts application performance in a more cost-effective manner than simply throwing more highly expensive DRAM at the problem.

But what is Optane™? The underlying technology is based on an undisclosed material that changes its resistance in order to store bits of information. The key fact is that it is unrelated to the way that DRAM or flash memory is manufactured and does not rely on transistors or capacitors to store charge. This is why it has such different characteristics.

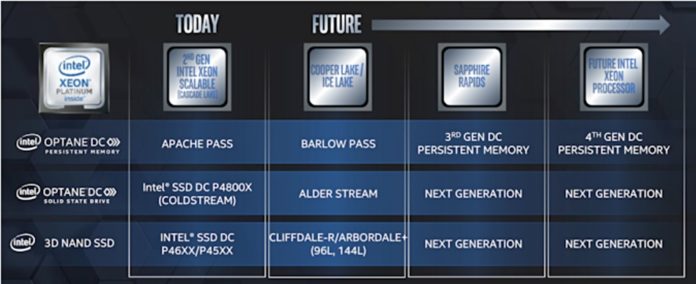

The past few years have seen Intel bring to market a number of Optane™-based products, and these have inevitably caused a little confusion because they all carry the Optane™ branding even though they are aimed at different use cases.

For example, Intel® Optane™ Memory is a consumer product in an M.2 module format that slots into a PC and is used to accelerate disk access for end user applications. It does this through the Intel® Rapid Storage Technology driver which recognises the most frequently used applications and data and automatically stores these in the Optane™ memory for speedier subsequent access.

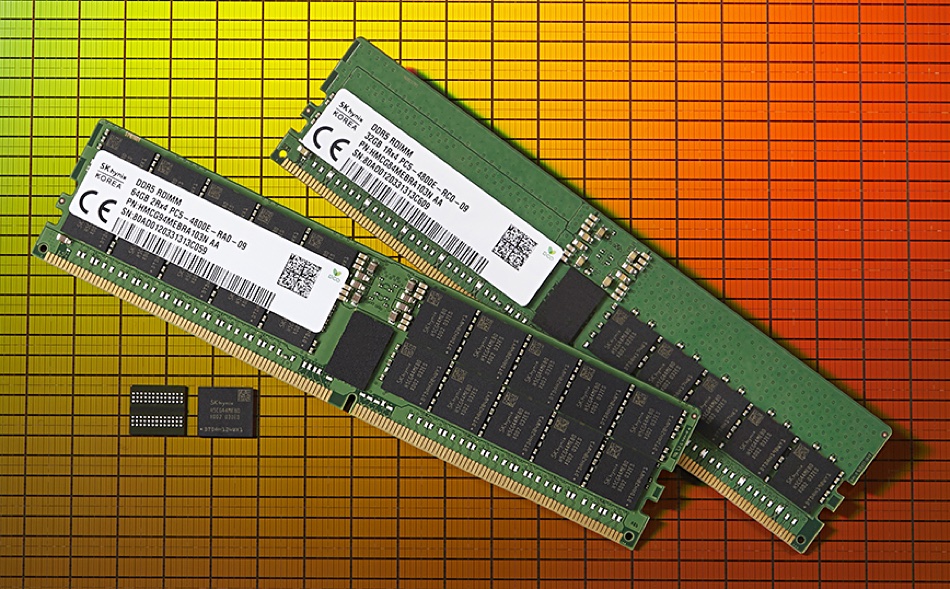

This should not be confused with Optane™ DC Persistent Memory, a data centre product designed for Intel servers which fits into standard DIMM slots alongside DDR4 DRAM memory modules (more on this, below).

Both of these memory-focused products are distinct from the solid state drive (SSD) storage product lines that Intel manufactures using Optane™ silicon. These are split into Optane™ SSDs for Client systems and server focused Optane™ SSDs for Data Centre. Both essentially use Optane™ memory components as block-based storage like the flash memory components in standard SSDs but with the advantage of lower latency.

Expanding your memory options

Optane™ DC Persistent Memory is perhaps the most interesting use of this type of memory technology. Support is built into the memory controller inside the Second Generation and Third Generation Intel® Xeon® Scalable processors, so that it can be used in combination with standard DDR4 memory modules.

It should be pointed out that Optane™ can be used like memory because of the way it is architected. The memory cells are addressable at the individual byte level, rather than having to be written or read in entire blocks as is the case with NAND flash.

The characteristics of Optane™ are such that its latency is higher than DDR4 DRAM, meaning it is slower to access, but close enough to DRAM speed that it can be treated as a slower tier of main memory. For example, Optane™ DC Persistent Memory has latency up to about 350ns, compared with 10 to 20ns for DDR4, but this still makes it up to a thousand times faster than the NAND flash used in the vast majority of SSDs.

Another key attribute of Optane™ DC Persistent Memory is that is currently available in higher capacities than DRAM modules, at up to 512GB. It also costs less than DRAM, with some sources putting the cost of a 512GB Optane™ DIMM at about the same price as the highest capacity 256GB DRAM DIMM.

This combination of higher capacity and lower price allows Intel customers to expand the available memory for applications at a lower overall cost. An example configuration could be six 512GB Optane™ DIMMs combined with six 256GB DDR4 DIMMs to provide up to 4.5TB of memory per CPU socket, which would mean 18TB for a four-socket server.

Having a larger memory space improves the performance of workloads that involve large datasets. More of the data is held in memory at any given time rather than having to be constantly fetched from the storage, which is much slower, even if in the case of all-flash storage.

However, it should be remembered that Optane™ is not quite like standard memory, in that it is also persistent, and can therefore keep its contents even without power. For this reason, Intel supports different modes through which the memory controller in Xeon® Scalable processors can operate Optane™ DC Persistent Memory modules in different ways to suit the application.

In Memory Mode, the Optane™ modules are treated as memory, with the DDR4 DRAM used to cache them. The advantage of this mode is that it is transparent to existing applications, which will just see the larger memory space presented by the Optane™ DIMMS, although it does not take advantage of the persistent capabilities of Optane™.

When configured in App Direct Mode, the Optane™ modules and DRAM are treated as separate memory pools, and the operating system and applications must be aware of this. However, this means that software has the flexibility to use the Optane™ memory pool in several ways, for instance, as a storage area for data too large for DRAM or for data that needs to be persistent – such as metadata or recovery information that will not be lost in the event of a power failure.

Alternatively, the Optane™ memory pool in App Direct Mode can be accessed using a standard file system and treated as if it were a small, very fast SSD.

It is tempting to compare Optane™ DC Persistent Memory with non-volatile DIMMs (NVDIMMs), which also retain data without power, but in reality these are very different technologies. A typical NVDIMM combines standard DRAM with flash memory, and is used exactly like standard memory, except that in the event of a power failure, the NVDIMM copies the data from DRAM to flash to preserve it. Once power is restored, the data is copied back again.

Another type of NVDIMM simply puts flash memory onto a DIMM, but as it is not addressable at the individual byte level like DRAM, it is effectively storage and must be accessed via a special driver that exposes it to a file system API.

Building a better, faster SSD

Another attribute of Optane™ technology worth noting is that it has a higher endurance than many other technologies such as the NAND Flash found in SSDs. Endurance is a measure of how many times a storage medium can be written to and reliably retain information, and is a key consideration for storage in data centres, especially for workloads such as databases, analytics, or virtual machines, which perform a large number of random reads and writes.

Endurance is commonly measured either as average drive writes per day (DWPD), or as the total number of lifetime writes. Intel claims that a typical half terabyte flash SSD with a warranty covering three DWPD over five years provides three petabytes of total writes, whereas a half terabyte Optane™ SSD has a warranty of 60 DWPD for the same period, equating to 55 petabytes of total writes.

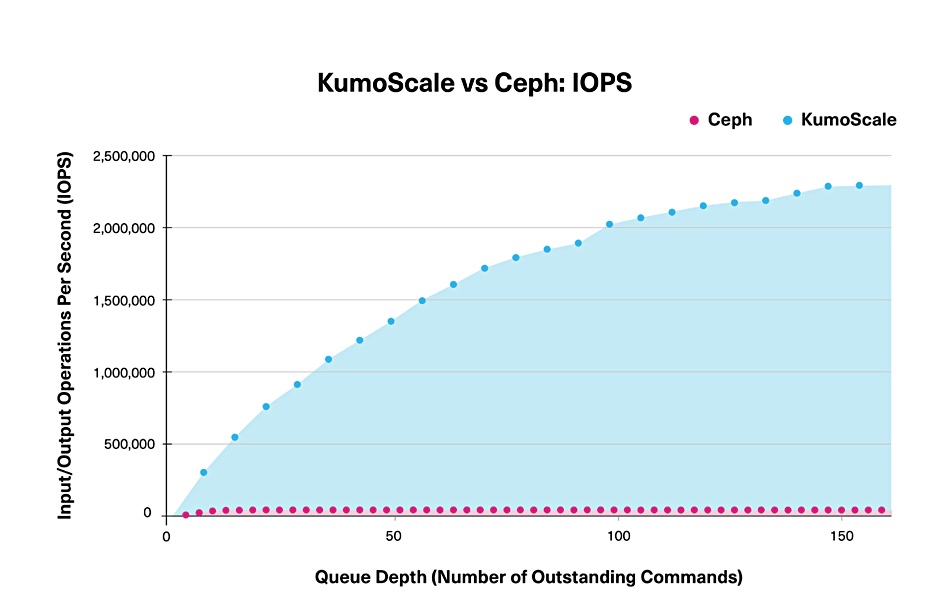

When Optane™ technology is used in SSDs, it also has a speed advantage over rival drives based on NAND Flash. Products such as the Intel® Optane™ SSD DC P4800X series have a read and write latency of 10 microseconds, compared with a write latency of about 220 microseconds for a typical NAND flash SSD. This means that although there may be a price premium for an Optane™ SSD, it is a much better choice for areas where performance is critical, such as the cache tier in storage arrays or hyperconverged infrastructure (HCI).

Intel also has a second generation of Optane™ SSDs in the pipeline that is set to offer even greater performance. One way in which this will be delivered is via a new SSD controller supporting the PCIe 4.0 interface, which the company claims will more than double the throughput when compared with existing Optane™ SSDs.

To summarise, Intel® Optane™ is an advanced memory technology that can be used like DRAM, but which has the non-volatility of storage. It is, however, much faster than flash, while also being cheaper than DRAM. This combination of characteristics means that it can be used to boost performance in the data centre by fitting into DIMM slots alongside DRAM to cost-effectively expand memory, or in the form of super-fast SSDs to boost the speed of the storage layer.

With enterprise workloads becoming more demanding and data intensive thanks to the incorporation of advanced analytics and AI techniques into applications, organisations need to be evaluating emerging technologies such as Intel® Optane™ to assess how these can help them build an IT infrastructure that will allow them to meet future challenges.

This article is sponsored by Intel.