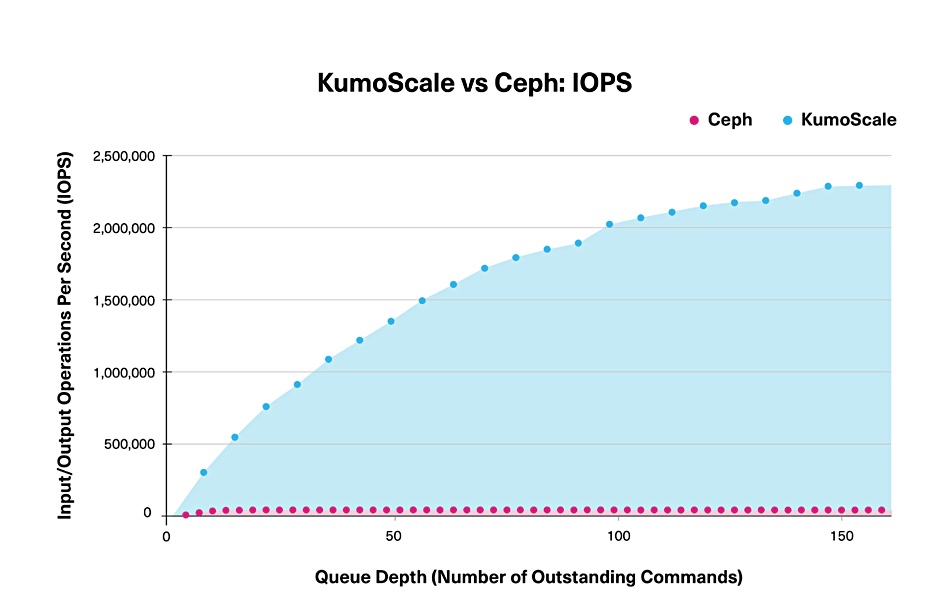

Kioxia today published test results showing its KumoScale storage software runs block access on an all-flash array up to 60 times faster than Ceph, thanks to NVMe/TCP transport.

Update: Block mode test details from Joel Warford added. 5.31 pm BST October 8.

Ceph is based on an object store with file and block access protocols layered on top and does not support NVMe-oF access. The Red-Hat backed open source software is enormously scalable and provides a single distributed pool of storage, with three copies of data kept for reliability.

Joel Dedrick, GM for networked storage software at Kioxia America, said in a statement: “Storage dollars per IOPS and read latency are the new critical metrics for the cloud data centre. Our recent test report highlights the raw performance of KumoScale software, and showcases the economic benefits that this new level of performance can deliver to data centre users.”

Kioxia says KumoScale software can support about 52x more clients per storage node in IOPS terms than Ceph software. This is at much lower latency and requires fewer storage nodes, resulting in a KumoScale $/IOPS cost advantage.

We think comparing Ceph with KumoScale is like seeing how much faster a sprint athlete runs than a person on crutches. NVMe-oF support is the crucial difference. Ceph will get NVMe-oF support one day – for example, an SNIA document discusses how to accomplish this. And at that point a KumoScale vs Ceph performance comparison would be more even.

But until that point, using Ceph block access with performance-critical applications looks to be less than optimal.

KumoScale benchmarks

The Kioxia tests covered random read and write IOPS and latencies and the findings were;

- KumoScale software read performance is 12x faster than Ceph software while reducing latency by 60 per cent.

- KumoScale software write performance is 60x faster than Ceph software while reducing latency by 98 per cent.

- KumoScale software supports 15x more clients per storage node than Ceph at a much lower latency in the testing environment.

At maximum load KumoScale delivered 2,293,860 IOPS compared to Ceph’s 43,852 IOPS. This is about 52x better performance.

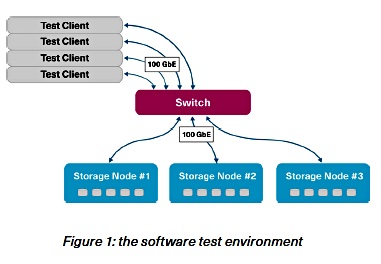

According to the Kioxia report, the tests used an identical benchmark process, server clusters and SSDs, with a networked environment consisting of three storage nodes – each containing five Kioxia CM5 SSDs, with 3.84 TB capacities – 20TB of storage per node.

The test stimulus and associated measurements were provided by four test clients. All storage nodes used 100 GbitE via a single 100GbitE network switch. An identical hardware configuration was used for all tests, and four logical 200GiB volumes were created – one assigned to each of the test clients.

The software was KumoScale v3.13) and Ceph v14.2.11 with NVMe-oF v1.0a and a TCP/IP transport. For KumoScale software, volumes were triply replicated (i.e. a single logical volume with one replica mapped to each of three storage nodes). For Ceph software, volumes were sharded using the ‘CRUSH’ hashing scheme, with a replication factor set to three.

Kioxia’s Director of Business Development Joel Warford told us; “The performance benchmarks were run in block mode on both products for a direct comparison. … Our native mapper function uses NVMe-oF to provision block volumes over the network which provides the lowest latency and best performance results. KumoScale can also support shared files and objects using hosted open source software on the KumoScale storage node for non-block applications, but those are not high performance use cases.”

Also; “We used FIO as the benchmark to generate the workloads.”

Ceph’s IO passes through the host Linux OS IO stack whereas NVMe/TCP uses much faster RDMA (Remote Direct Memory Access) to the KumoScale volumes, which bypasses the Linux IO stack.