This week’s data storage standouts include Intel spinning out a fast interconnect business; HPE and Marvell’s high-availability NVMe boot drive kit for ProLiant servers; and Fujitsu going through its own, branded digital transformation

Intel spins out Cornelis

Intel has spun out Cornelis Networks, founded by former Intel interconnect veterans Philip Murphy Jr., Vladimir Tamarkin and Gunnar Gunnarsson.

Cornelis will compete with Nvidia’s Mellanox business unit and technology, and possibly also HPE’s Slingshot interconnect. The latter is used in Cray Shasta supercomputers and HPE’s Apollo high performance computing servers.

Cornelis aims to commercialise Intel’s Omni-Path Architecture (OPA), a low-latency HPC-focused interconnect technology. The technology comes from Intel’s 2012 acquisition of QLogic’s TrueScale InfiniBand technology and Cray’s Aries interconnect IP and engineers, acquired by Intel in April 2012.

Corneli’s initial funding is a $20m A-round led by Intel Capital, Downing Ventures, and Chestnut Street Ventures.

Fujitsu’s digital twin

Fujitsu is investing $1bn in a massive digital transformation project, which it is calling “Fujitra”.

The aim is to transform rigid corporate cultures such as “vertical division between different units” and “overplanning” by utilising frameworks such as Fujitsu’s Purpose, design-thinking, and agile methodology. Fujitsu’s purpose or mission is “to make the world more sustainable by building trust in society through innovation,” which seems entirely Japanese in its scope and seriousness.

Fujitsu will introduce a common digital service throughout the company to collect and analyse quantitative and qualitative data frequently and to manage actions based on such data. All information is centralised in real time to create a Fujitsu digital twin.

Fujitsu has appointed DX officers for each of the 15 corporate and business units as well as five overseas regions. They will be responsible for promoting reforms across divisions, advance company-wide measures in each division and region, and lead DX at each division level.

Fujitra will be introduced at Fujitsu ActivateNow, an online global event, on Wednesday, October 14.

HPE and Marvell’s NVMe boot switch

Marvell’s 88NR2241 is an intelligent NVMe switch that enables data centres to aggregate, increase reliability and manage resources between multiple NVMe SSD controllers. The 88NR2241 creates enterprise-class performance, system reliability, redundancy, and serviceability with consumer-class NVMe SSDs linked by PCIe. This NVMe switch has a DRAM-less architecture and supports low latency NVMe transactions with minimum overhead.

HPE has implemented a customised version of the 88NR2241 for ProLIant servers, calling it an NVMe RAID 1 accelerator. One HPE NS204i-p NVMe OS Boot Device is a PCIe add-in card that includes two 480GB M.2 NVMe SSDs. This enables customers to mirror their OS through dedicated RAID 1.

The accelerator’s dedicated hardware RAID 1 OS boot mirroring eliminates downtime due to a failed OS drive – if one drive fails the business continues running. HPE OS Boot Devices are certified for VMware and Microsoft Azure Stack HCI for increased flexibility.

AWS Partner network news

- Data protector Druva has achieved Amazon Web Services (AWS) Digital Workplace Competency status. This is Druva’s third AWS Competency designation. Druva has also been certified as VMware Ready for VMware Cloud.

- Cloud file storage supplier Nasuni has achieved AWS Digital Workplace Competency status. This status is intended to help customers find AWS Partner Network (APN) Partners offering AWS-based products and services in the cloud.

- Kubernetes storage platform supplier Portworx has achieved Advanced Technology Partner status in the AWS Partner Network (APN).

The shorter items

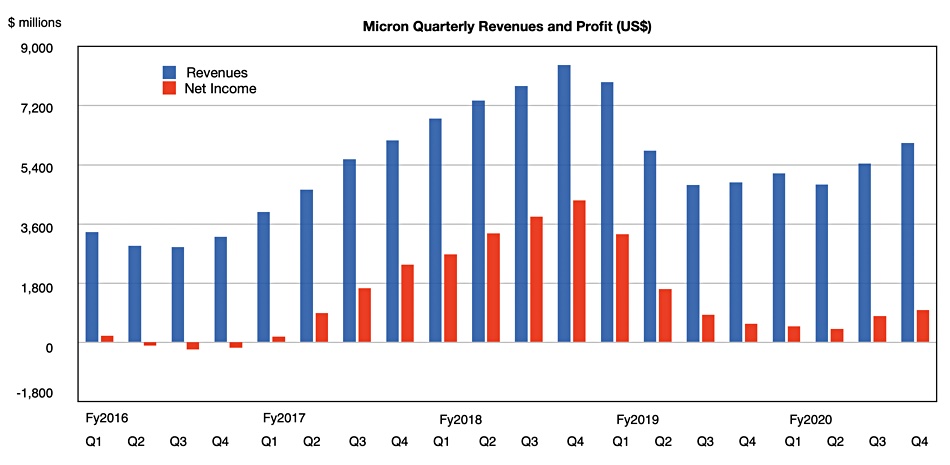

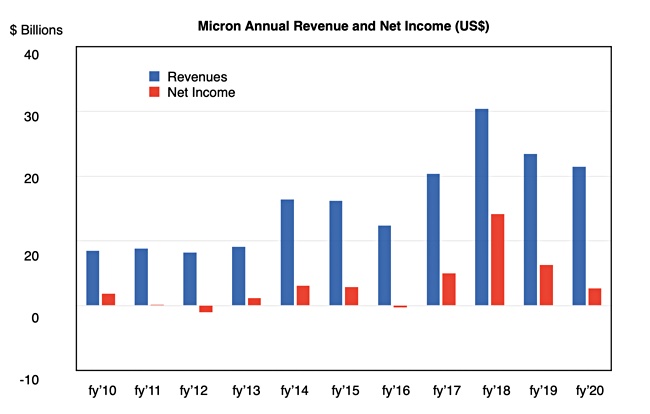

DigiTimes speculates that China-based memory chipmakers ChangXin Memory Technologies (CXMT) and Yangtze Memory Technologies (YMTC) could be added to the US trade ban export list. This list is currently restricting deliveries of US technology-based semiconductor shipments to Huawei.

The Nikkei Asian Review reports SK hynix wanted to buy some more shares in Kioxia, taking its stake from 14.4 per cent to 14.96 per cent stake from its existing 14.4 per cent holding. That would link Kioxia and SK hynix in a defensive pact against emerging Chinese NAND suppliers. This plan isnow delayed as Kioxia has postponed its IPO.

Veritas has bought data governance supplier Globalnet to extend its digital compliance and governance portfolio, with visibility into 80-plus new content sources. These include Microsoft Teams, Slack, Zoom, Symphony and Bloomberg.

Dell has plunked Actifio‘s Database Cloning Appliance (DCA) and Cloud Connect products on Dell EMC PowerEdge servers, VxRail and PowerFlex. Sales are fulfilled by Dell Technologies OEM Solutions.

Enmotus has launched an AI-enabled FuzeDrive SSD with 900GB and 1.6TB capacity points. It blends high-speed, high endurance static SLC (1 bit/cell) with QLC (4bits/cell) on the same M.2 board. AI code analyses usage patterns and automatically moves active and write intensive data to the SLC portion of the drive. This speeds drive response and lengthens its endurance.

Exagrid claims it has the only non-network-facing tiered backup storage solution with delayed deletes and immutable deduplication objects. When a ransomware attack occurs, this approach ensures that data can be recovered or VMs booted from the ExaGrid Tiered Backup Storage system. Not only can the primary storage be restored, but all retained backups remain intact. Check out an Exagrid 2 minute video.

Deduplicating storage software supplier FalconStor has announced the integration between AC&NC’s JetStor hardware with StorSafe, its long-term data retention and reinstatement offering, and StorGuard, its business continuity product.

HCL Technologies has brought its Actian Avalanche data warehouse migration tool to the Google Cloud Platform.

MemVerge has announced the general availability of its Memory Machine software which transforms DRAM and persistent memory such as Optane into a software-defined memory pool. The software provides access to persistent memory without changes to applications and speeds persistent memory with DRAM-like performance. Penguin Computing use Optane Persistent Memory and Memory Machine software to reduce Facebook Deep Learning Recommendation (DLRM) inference times by more than 35x over SSD.

Western Digital’s SanDisk operation has announced a new line of Extreme portable SSDs with nearly twice the speed of the previous generation. The Extreme and Extreme PRO products use the NVMe interface and with capacities up to 2TB. They offer a password interface and encryption. The Extreme reads and writes at up to 1,000 MB/s while the Extreme PRO achieves upon to 2,000MB/sec.