The new Unity 4000 is a flexible, multi-port, straightforward storage array from Nexsan.

The company has a focus on three storage product lines: E-Series block storage for direct-attached or SAN use, Assureon immutable storage to keep data compliant and safe in the face of malware, and the Unity NV series of unified file, block, and object systems. There are three Unity systems, with the NV10000 topping the range as an all-flash, 24-drive system. It is followed by the mid-range 60-disk drive NV6000 with a massive 5.18 PB capacity maximum, and now we have the NV4000 for branch offices and small/medium businesses.

CEO Vincent Phillips tells us: “Unity is versatile storage with unmatched value. We support multiple protocols, we support the flexibility to configure flash versus spinning drive in the mix, and that works for whatever the enterprise needs. Every system has dual controllers and redundancy for high availability and non-destructive upgrades. There’s no upcharges for individual features and unlike the cloud, the Unity is cost-effective for the life of the product and even as you grow.”

Features include immutable snapshots and S3 object-locking. The latest v7.2 OS release adds a “cloud connector, which allows bi-directional syncing of files and directories with AWS S3, with Azure, Unity Object Store and Google Cloud coming soon.”

There are non-disruptive upgrades and an Assureon connector. With the latest v7.2 software, admins can now view file access by user via the SMB protocol as well as NFS.

Phillips said: “Every system has dual controllers and redundancy for high availability and non-destructive upgrades, but we also let customers choose between hardware and encryption on the drive with SED or they can utilize more cost-effective software-based encryption.”

All NV series models have FASTier technology to boost data access by caching. It uses a modest amount of solid-state storage to boost the performance of underlying hard disk drives by up to 10x, resulting in improved IOPS and throughput while maintaining cost-effectiveness and high capacity. FASTier supports both block (e.g. iSCSI, Fibre Channel) and file (e.g. NFS, SMB, FTP) protocols, allowing unified storage systems to handle diverse workloads, such as random I/O workloads in virtualized environments, efficiently.

The NV10000 flagship model “is employed by larger enterprises. It supports all flash or hybrid with performance up to 2 million IOPS, and you can expand with our 78-bay JBODs and scale up to 20 petabytes. One large cancer research facility that we have is installed at 38 petabytes and growing. They’re working on adding another 19 petabytes.”

The NV6000 was “introduced in January, and it’s been a mid-market workhorse. It can deliver one and a half million IOPS and it can scale up to five petabytes. We’ve seen the NV 6000 manage all kinds of customer workloads, but one that we consistently see is it utilized as a backup target for Veeam, Commvault and other backup software vendors.”

Phillips said: “The product family needed a system that met the requirements at the edge or for smaller enterprises. That’s where the NV4000 that we’re talking about today comes in. It has all the enterprise features of the prior two models, but in a cost-effective model for the small organization or application or for deployment at the edge of an enterprise. The NV4000 is enterprise class, but priced and sized for the small or medium enterprise. It manages flexible workloads, backups, and S3 connections for hybrid solutions, all in one affordable box. The NV4000 can be configured in all flash or hybrid and it can deliver up to 1 million IOPS and it has the same connectivity flexibility as its bigger sisters.”

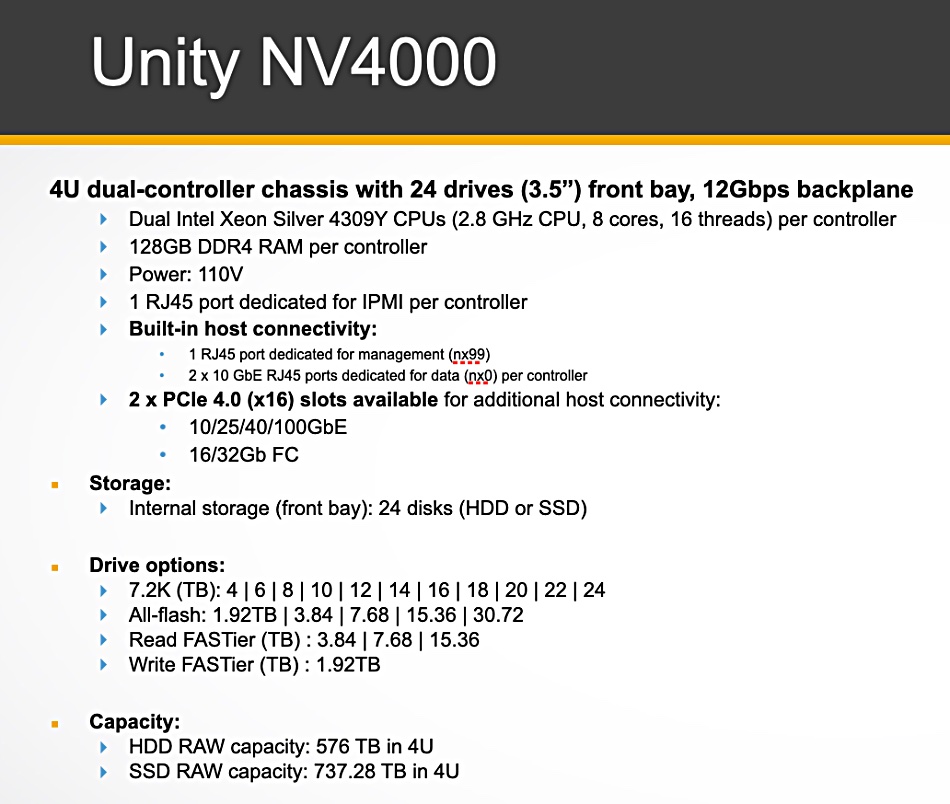

The NV4000 comes in a 4RU chassis with front-mounted 24 x 3.5-inch drive bays. The system has dual Intel Xeon Silver 4309Y CPUs (2.8 GHz, 8 cores, 16 threads) and 128 GB DDR4 RAM per controller. There is a 2 Gbps backplane and connectivity options include 10/25/40/100 GbE and 16/32 Gb Fibre Channel. The maximum flash capacity is 737.28 TB while the maximum capacity with spinning disk is 576 TB. The highest-capacity HDD available is 24 TB whereas Nexsan supplies SSDs with up to 30.72 TB.

That disparity is set to widen as disk capacities approach 30 TB and SSD makers supply 61 and 122 TB capacities. Typically these use QLC (4 bits/cell flash). Phillips said: “We don’t support the QLC today. We are working with one of the hardware vendors and we’ll begin testing those very shortly.”

Nexsan has a deduplication feature in beta testing and it’s also testing Western Digital’s coming HAMR disk drives.

There are around a thousand Nexsan channel partners and its customer count is in the 9,000-10,000 area. Partners and customers should welcome the NV4000 as a versatile edge, ROBO, and SMB storage workhorse.