Magneto-Resistive Random Access Memory (MRAM) has so far generally failed to replace SRAM because its Spin Transfer Torque (STT-MRAM) implementation is too slow and doesn’t last long enough. A new variant — Spin Orbit Transfer MRAM (SOT-MRAM) — promises to be faster, have a longer life and use less power.

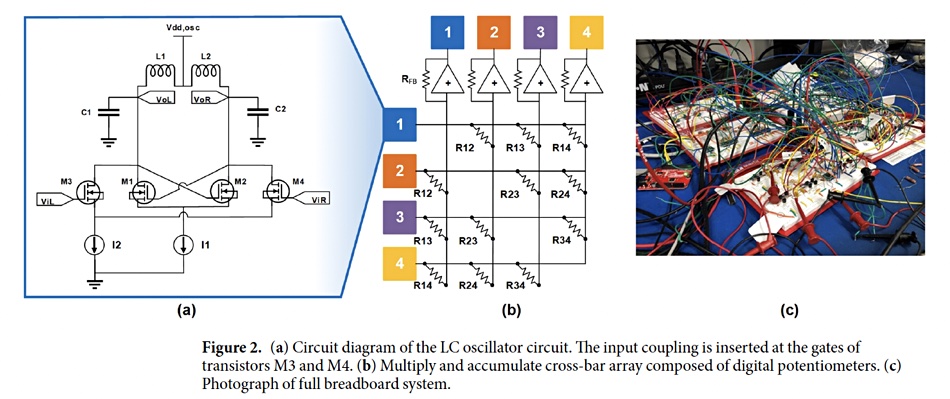

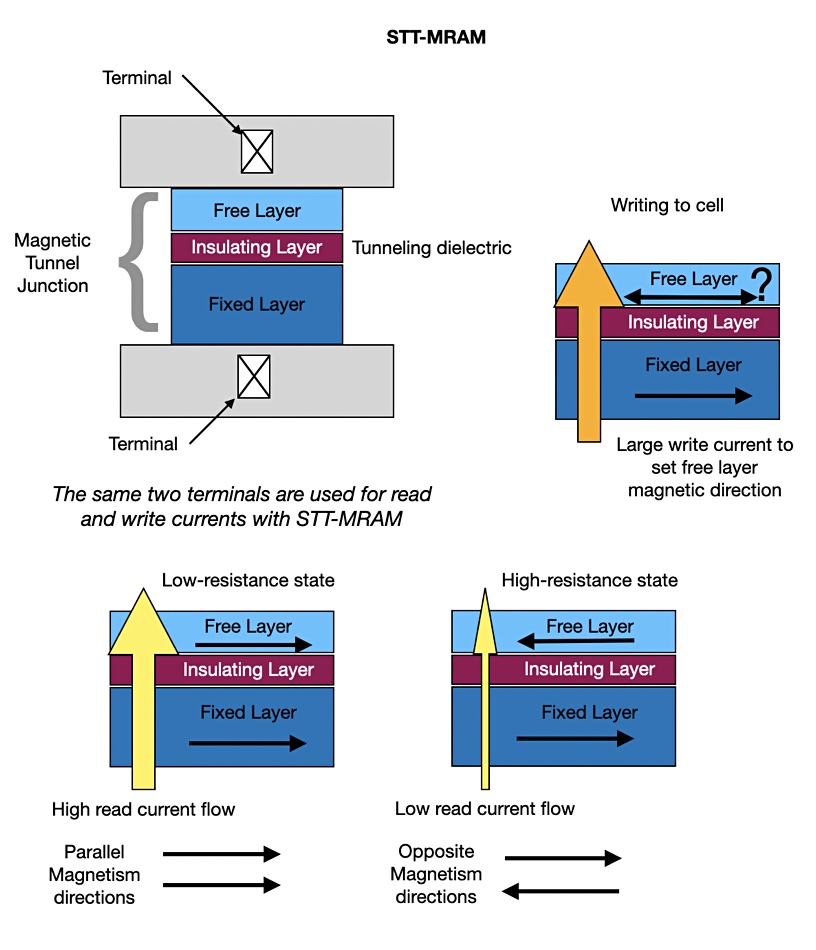

To better understand what’s going on, we have to delve into how STT-MRAM works. This is my understanding and I am neither a physicist nor a CMOS electrical engineer, so bear with me. STT-MRAM is based on a magnetic tunnel junction. This is a three-layer CMOS (Complementary Metal Oxide Semiconductor) device with a dielectric or partially insulating layer between two ferromagnetic plates or layers. The thicker layer has a fixed or pinned magnetic direction.

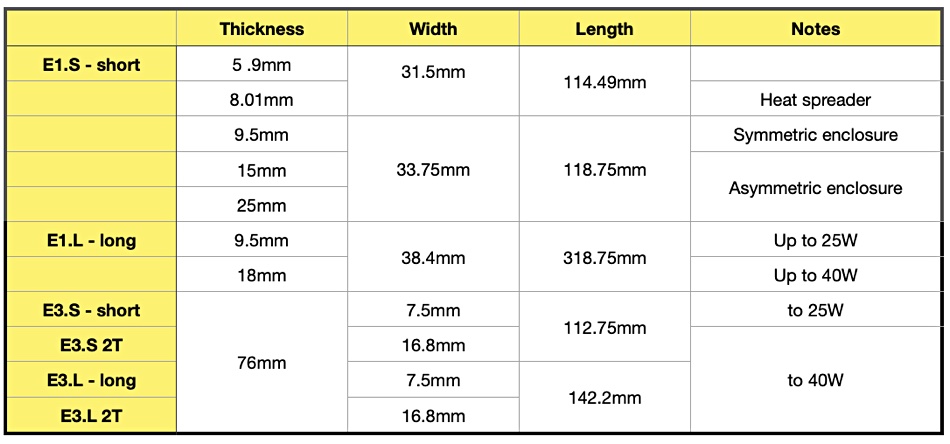

The upper and thinner layer is called a free layer and its magnetic polarity can be set either way. When the magnetic polarity of both layers is in sync (parallel) then the electrical resistance of the device is lower than when the polarities are opposite, as shown in the diagram above. High or low resistance signals binary one or zero. The resistance is made high or low with a write current, stronger than the resistance-sensing read current, sent through the device.

Electrons in the magnetic layers have spins (angular momentum) which can be in an up or down direction. The current sent through the fixed layer is spin-polarised so that the bulk of its electrons spin in one direction. Some of these travel — or rather tunnel — through the dielectric layer into the free layer and can change its magnetic polarity, and thus the device’s resistance. Hence the name, magneto-resistive RAM. The change is permanent — MRAM is non-volatile, but repeated writes degrade the device’s tunnel barrier material and reduce its life.

STT-MRAM can deliver higher speed by using a larger write current, but that shortens the device’s endurance. Or it can deliver longer endurance at the cost of slower speed.

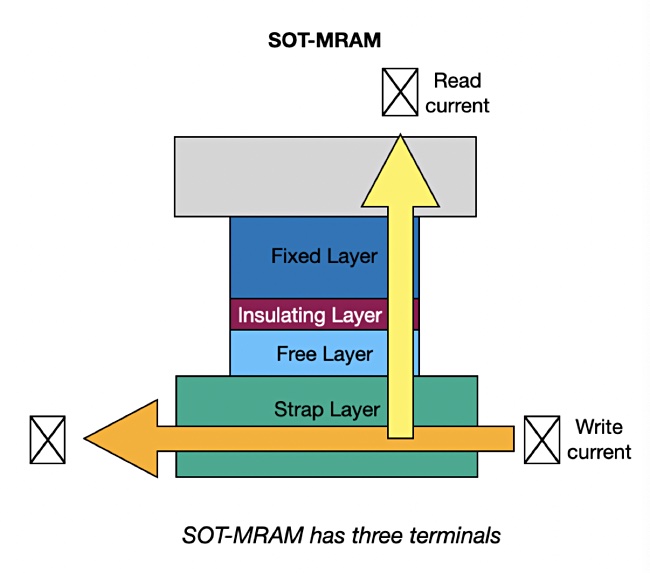

Proponents of SOT-MRAM say the problem is due to the write and read currents using the same path, from terminal to terminal, across the device. By separating the two currents you can raise both speed and endurance. But you still need to set the magnetic polarity of the free layer.

This is done by passing the write current through a so-called strap layer set adjacent to the free layer.

This means the device now has three terminals — a point to which we will return.

According to Bryon Moyer Semiconductor Engineering cited in Semiengineering.com, writing requires either a special asymmetric shaped strap layer or an externally-applied magnetic field. Research is progressing into field-free switching using using atomic-scale phenomena such as the Rashba effect, which is concerned with spin orbits and crystal asymmetry, and also the Dzyaloshinskii–Moriya effect related to magnetic vortices.

A particle can spin on its own axis or it can spin — orbit — around some other particle.

We can say that electron spin orbits represent the interaction of a particle’s spin with its motion inside an electrical field, without actually understanding what that means. A “Nanoscale physics and electronics“ scientific paper stated: “Spin orbit coupling (SOC) can be regarded as a form of effective magnetic field ‘seen’ by the spin of the electron in the rest frame. Based on the notion of effective magnetic field, it will be straightforward to conceive that spin orbit coupling can be a natural, non-magnetic means of generating spin-polarized electron current.”

It may be “straightforward” to CMOS-level electric engineers and scientists but this writer is now operating way out of any mental comfort zone.

Bryon Moyer Semiconductor Engineering says that researchers realised that with the right layering and combination of ferroelectric or ferrielectric materials, and with the right spin-index relationships, the magnetic symmetry can be broken to drive the desired [magnetic] orientation. In other words you can use spin orbits to set the free layer’s magnetic field in the desired direction.

Whatever the method, the magnetic polarity of the free layer can be set and the tunnel junction’s resistance be read as with STT-MRAM.

The three-terminal point means that an SOT-MRAM cell requires an extra select transistor — one per terminal — and this makes an SOT cell bigger than an STT cell. Unless this conundrum can be solved, SOT-MRAM may be restricted to specific niche markets within the overall SRAM market.

It is likely to be three years or more — perhaps ten years — before any SOT-MRAM products will be ready for testing by customers. In the meantime organisations like Intel, the Taiwan Semiconductor Research Institute (TSRI) and Belgium’s IMEC are researching SOT technology. We’ll keep a watch on what’s going on.