Komprise automated AI data pipelines can now detect and protect personally identifiable information (PII).

The unstructured data manager has a Smart Data Workflow Manager product, aimed at orgs wanting to prevent the leakage of PII and other sensitive data. It says IT teams fear the risks of sensitive data “lurking where it shouldn’t be” and these risks are increasing as unstructured data grows. The popularity of GenAI is exacerbating the issue.

Komprise points out that storage admins are generally responsible for data governance and compliance but lack ways to do this systematically across their data estate. CEO Kumar Goswami stated: “The risk of sensitive data breaches is escalating and thereby paralyzing organizations from using AI. We are pleased to systematically reduce sensitive data risks so that our customers can improve their cybersecurity and provide data governance for AI ingestion with the new sensitive data detection and mitigation capabilities in Komprise Smart Data Workflow Manager.”

Co-founder, president, and COO Krishna Subramanian said this feature was coming in an interview with Blocks & Files earlier this month. Smart Data Workflow Manager now includes:

- Standard PII detection: Select which PII data types to scan for such as national IDs, credit card numbers and email addresses. Multiple classifications are supported to identify multiple types of PII within any given file.

- Custom Sensitive Data Detection: Customers can find any text patterns in their data via both keyword and regular expressions (regex) search to identify specific data formats like employee IDs, machine or instrument IDs, product or project codes, or PHI data like healthcare-system specific patient record IDs.

- Scans Sensitive Data in Place: Executes locally behind enterprise firewalls so sensitive data stays in place, unlike cloud-based data detection services. e.g. here.

- Remediate and Move: Once PII data is detected, users can set up a workflow to confine the data or move it to a safe location.

- Pre-Process for AI Ingest: Sensitive data detection can be a pre-process step for an AI ingest workflow to eliminate sensitive data leakage to AI.

- Ongoing Workflow: Users can set workflows to run periodically, so Komprise automatically finds and acts on any new sensitive data for ongoing detection, tagging and mitigation, with full audit capabilities.

The Komprise software maintains a full audit record of all data processed by any workflow, such as copying data to a location for ingestion by an AI or ML system.

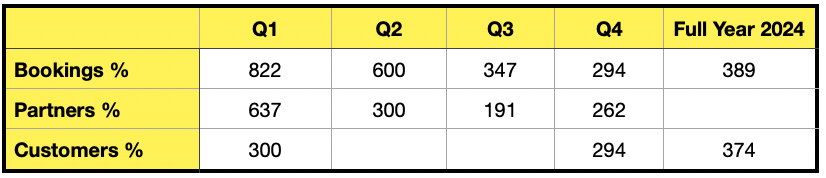

Komprise Smart Data Workflows, along with the new sensitive data detection and regex search, are currently in early access for customers and partners. They will be generally available by the end of Q1 as part of the Komprise Intelligent Data Management Platform.