Hammerspace claims it has set new records in the MLPerf Storage 1.0 benchmark with its Tier 0 storage beating DDN Lustre more than 16x at the same client count.

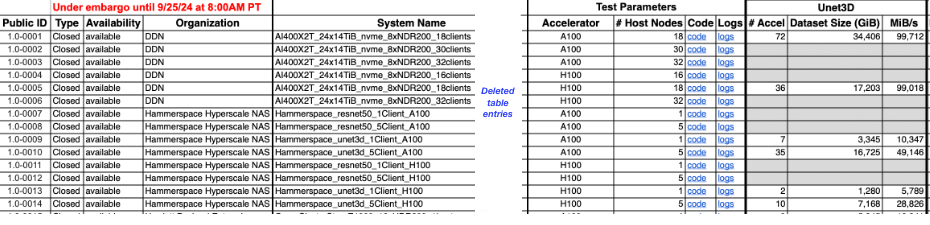

The MLPerf Storage 1.0 benchmark is produced by MLCommons and v1.0 results were made public in September. The benchmark tests combine three workloads – 3D-Unet, Cosmoflow, and ResNet50 – and two types of GPU – Nvidia A100 and H100 – to present a six-way view of storage systems’ ability to keep GPUs over 90 percent busy with machine learning work. Hammerspace scored 5,789 MiB/sec on the 3D-Unet workload with 2x H100 GPUs, and 28,826 MiB/sec with 10x H100s.

DDN scored 99,018 MiB/sec with 36x H100s, using an EXAScaler A1400X2T system with 18 clients. You can see the benchmark table here. It has a # Accel column, which is the number of GPUs (accelerators) supported. There is an edited extract of it shown below, with the DDN and Hammerspace entries in it:

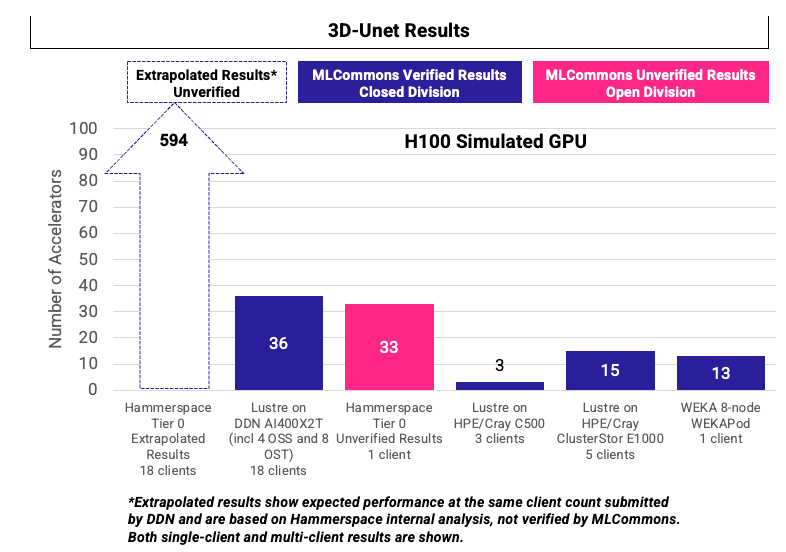

Since then, Hammerspace has refined its Global Data Platform technology to embrace locally attached NVMe SSDs in the GPU servers. It calls them Tier 0 storage and uses them as front end to external GPUDirect-accessed datasets, providing microsecond-level storage read and checkpoint write access to accelerate AI training workloads. It has now repeated its MLPerf Storage benchmark runs with this updated Tier 0 software and achieved significantly better performance, as its chart shows:

The Hammerspace results have been submitted but not verified by the MLCommons MLPerf organization and should be regarded as tentative.

Hammerspace says its single client system using its Tier 0 software supported 33 accelerators compared to its DDN rating of 36 for its 18-client system. With 18 clients, Hammerspace says its extrapolated results supported 594x H100 accelerators – a great deal more than DDN, or anyone else for that matter.

David Flynn, founder and CEO of Hammerspace, stated: “Our MLPerf 1.0 benchmark results are a testament to Hammerspace Tier 0’s ability to unlock the full potential of GPU infrastructure. By eliminating network constraints, scaling performance linearly and delivering unparalleled financial benefits, Tier 0 sets a new standard for AI and HPC workloads.”

Hammerspace claims its MLPerf testing also showed that Tier 0-enabled GPU servers achieved 32 percent greater GPU utilization and 28 percent higher aggregate throughput compared to external storage accessed via 400GbE networking.

Overall, it claims that extrapolated results from the benchmark confirm that scaling GPU servers with Tier 0 storage multiplies both throughput and GPU utilization linearly, ensuring consistent, predictable performance gains as clusters expand.

DDN’s SVP for Products, James Coomer, told us: “We’re proud of our proven and validated performance results in the MLPerf Storage Benchmark, where the DDN data platform consistently sets new records for throughput and efficiency in real-world machine learning workloads. With the release last week of our next-generation A³I data platform, the AI400X3, that drives performance up to 150GB/s from a single 2RU appliance which supports performance of up to 150 simulated GPUs, we’re reinforcing our commitment to delivering innovative and high-performance data solutions.

“While we welcome innovation across the storage landscape, our results—unlike speculative or extrapolated claims—have been rigorously tested and verified by MLCommons, highlighting our commitment to transparency and excellence.”