A new chapter is opening in the all-flash storage array (AFA) world as QLC flash enables closer cost comparisons with hybrid flash/disk and disk drive arrays and filers.

There are now 14 AFA players, some dedicated and others with hybrid array/filer alternatives. They can be placed in three groups. The eight incumbents, long-term enterprise storage array and filer suppliers, are: Dell, DDN, Hitachi Vantara, HPE, Huawei, IBM, NetApp and Pure Storage. Pure is the newest incumbent and classed as such by us because of its public ownership status and sustained growth rate. It is not a legacy player, though, as it was only founded in 2009, just 14 years ago.

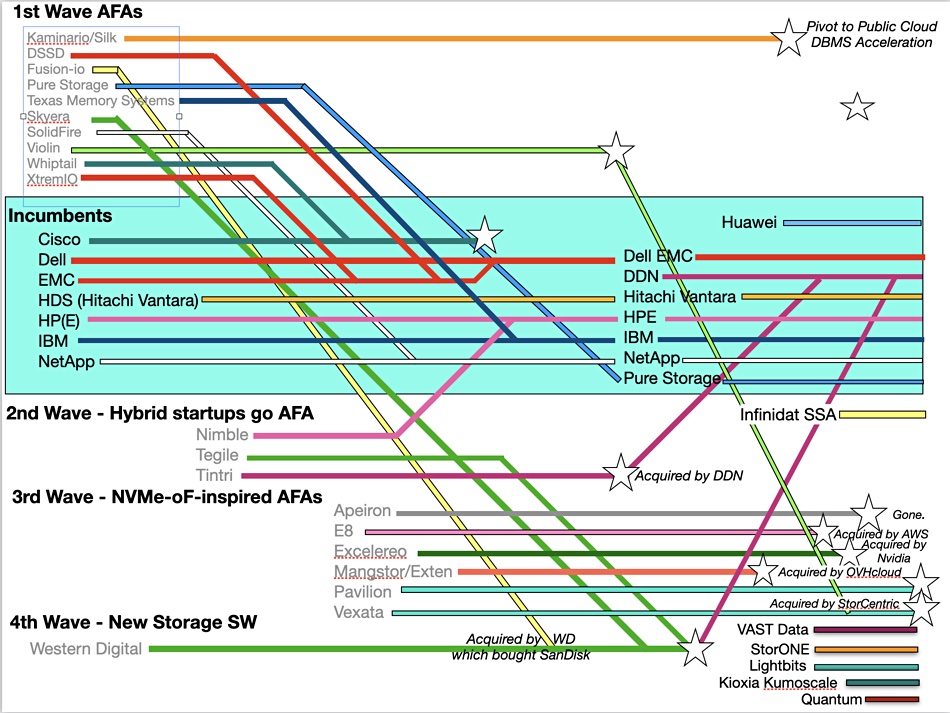

An updated AFA history graphic – we first used this graphic in 2019 – shows how these incumbents have adopted AFA technology both by developing it in-house and acquiring AFA startups. We have added Huawei to the chart as an incumbent; it is a substantial AFA supplier globally.

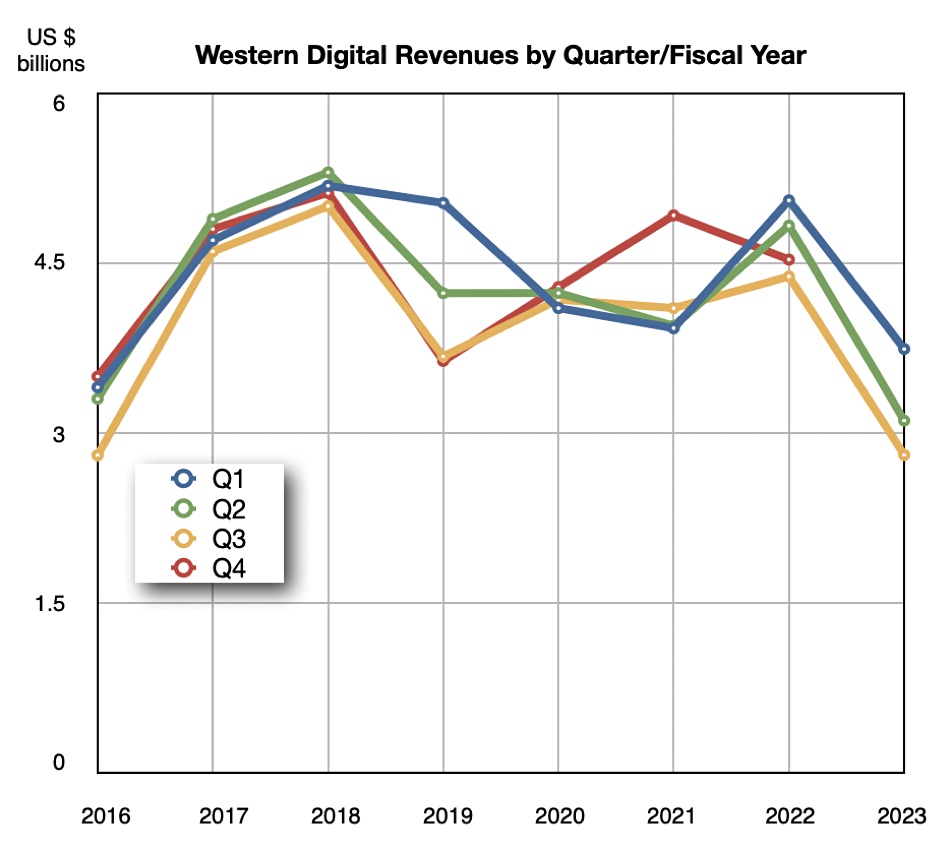

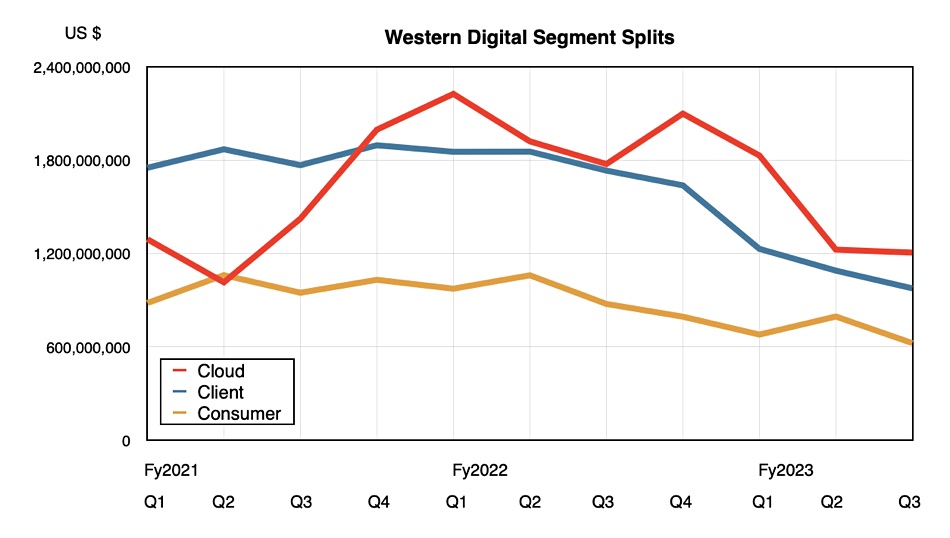

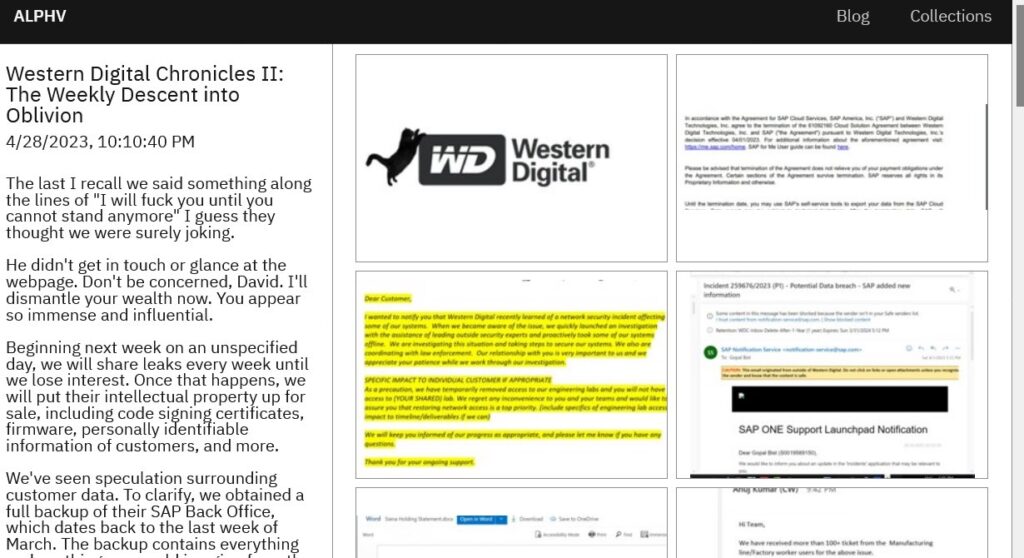

A second wave of hybrid array startups adopting all-flash technology – Infinidat, Nimble, Tegile and Tintri – have mostly been acquired, HPE buying Nimble, Western Digital buying Tegile, and DDN ending up with Tintri. But Infinidat has grown and grown and added an all-flash SSA model to its InfiniBox product line.

Infinidat is of a similar age to Pure, being founded in 2010, and has unique Neural Cache memory caching technology which it uses to build high-end enterprise arrays competing with Dell EMC’s PowerMax and similar products. It has successfully completed a CEO transition from founder Moshe Yanai to ex-Western Digital business line exec Phil Bullinger, and has been growing strongly for three years. It’s positioned to become a new incumbent.

The third wave was formed of six NVMe-focused startups. They have all gone now as well, either acquired or crashed and burned. NVMe storage and NVMe-oF access proved to be technology features and not products as all the incumbents adopted them and basically blew this group of startups out of the water.

The fourth wave of AFA startups has three startup members and two existing players moving into the AFA space. All five are software-defined, use commodity hardware, and are different from each other.

VAST Data has a reinvented filer using a single tier of QLC flash positioned as being suitable from performance workloads, using SCM-based caching and metadata storage, and parallel access to scale-out storage controllers and nodes, with data reduction making its QLC flash good for capacity data storage as well. Its brand new partnership with HPE gives it access to the mid-range enterprise market while it concentrates its direct sales on high-end customers.

StorONE is a more general-purpose supplier, with a rewritten and highly efficient storage software stack and a completely different philosophy about market and sales growth to VAST Data. VAST has taken $263 million and is prioritizing growth and more growth, while StorONE has raised around $30 million and is focused on profitable growth.

Lightbits is different again, providing a block storage array accessed by NVMe/TCP. It is relatively new, being started up in 2015.

Kioxia is included this group because it has steadily developed its Kumoscale JBOF software capabilities. The system supports OpenStack and uses Kioxia’s NVMe SSDs. Kioxia does not release any data about its Kumoscale sales or market progress but has kept on developing the software without creating much marketing noise about it.

Lastly Quantum has joined this fourth wave group because of its Myriad software announcement. This provides a unified and scale-out file and object storage software stack. Its development was led by Brian Pawlowski who has deep experience of NetApp’s FlashRay development and and Pure Storage AFA technologies. He characterizes Quantum as a late mover in AFA software technology, aware of the properties and limitations of existing AFA tech, and crafting all-new software to fix them.

We have not included suppliers such as Panasas, Qumulo and Weka in our list. Panasas has an all-flash system but is a relatively small player with an HPC focus. Scale-out filesystem supplier Qumulo also supports all-flash hardware but, in our view, is predominantly a hybrid multi-cloud software supplier. WEKA too is a software-focused supplier

Two object storage suppliers support all-flash hardware – Cloudian and Scality – but the majority of their sales are on disk-based hardware. Scality CEO Jerome Lecat tells us he believes the market is not really there for all-flash object storage. These two players are not included in our AFA suppliers’ list as a result.

The main focus in the AFA market is on taking share from all-disk and hybrid-flash disk suppliers in the nearline bulk storage space. In general they think that SSDs will continue to exceed HDD capacity; 60TB drives are coming before the end of the year and are generally confident they will continue to grow their businesses at the expense of the hybrid array suppliers. Some, like Pure Storage, are even predicting a disk drive wipeout. That prediction may come back to haunt them – or they could be laughing all the way to the QLC-powered flash bank.