Data protector ArcServe paid DCIG to review its UDP 9.0 backup product. The resulting study found that ArcServe UDP 9.0 offers organizations a clear edge in protecting against ransomware while tackling the persistent challenges of managing backup and recovery complexities. It hasn’t made the review public.

…

Disaster recovery specialist Continuity Center and Backblaze have announced Cloud Instant Business Recovery to help businesses of all sizes prepare for and recover from disaster – from ransomware to natural catastrophes. They say small and mid-sized businesses have historically been priced out of existing solutions designed for large enterprises with massive budgets and teams. Cloud Instant Business Recovery (Cloud IBR) instantly recovers Veeam backups from the Backblaze B2 Storage Cloud. The fully automated service deploys a recovery process through a simple web UI and in the background uses phoenixNAP’s Bare Metal Cloud servers to import Veeam backups stored in B2 and fully restores the customer’s server infrastructure.

…

DataOps.live – which enables companies to build, test and deploy applications on Snowflake – has raised $17.5 million in an A-round from Notion and Anthos, and is growing at speed. Its breakout fiscal 2023 saw 400 percent ARR growth.

…

Dell will be reselling Continuity’s StorageGuard, a security posture management solution which hardens storage and backup systems while guaranteeing compliance with relevant vendor, industry and regulatory best practices. StorageGuard scans customer environments to ensure they are secure and follow all relevant vendor, industry and regulatory best practices.

…

Google has announced GA of its C3 machine series VM with gen 4 Xeon SP (Sapphire Rapids) processors and made it available to GCE and GKS customers. C3 uses DDR5 memory and is currently available in the highcpu (2GB/vCPU) memory configuration. Standard (4GB/vCPU) and highmem (8GB/vCPU) configurations, as well as local SSD (up to 12TB), will be available in the coming months. C3 VMs use Google’s latest Infrastructure Processing Engine (IPU) to offload networking, deliver high performance block-storage with Hyperdisk, and speed up ML training and inference with Intel AMX.

…

Hitachi Vantara says the Arizona Department of Water Resources has chosen its Pentaho Data Catalog to mitigate its water crisis. The AZ State has collected centralized data from 330,000 distributed water resources that lead to better insights, improving water use for the state’s 7 million residents. To effectively manage Arizona’s water supply, the Department of Water Resources collects, stores and conducts analysis on reported water uses of nearly 6 trillion gallons per year across thousands of wells and surface water sources. By analyzing data against records of geolocation coordinates, depth to water and uses, the agency is better equipped to establish the adjudication of water rights with trusted, accurate data.

…

At its Innovative Data Infrastructure Forum (IDI Forum) 2023 in Munich, Huawei has added 3 entry-level arrays and an entry level protection array to its OceanStor line. This consists of 3000, 5000/6000 and 8000/18000 all-flash arrays (AFAs) and the 2000, 5000, 6000 and 18000 hybrid flash/disk arrays; the bigger the number the bigger and more powerful the product. The newcomers are the 2000 AFA and 2200 and 2600 hybrid arrays. It’s also added an entry-level X300 to its OceanProtect products, augmenting the existing X6000, X8000 and X9000 models.

Huawei believes emerging big data and AI applications pose higher requirements for the parallel processing of diversified data. Cloud-native applications are becoming more prevalent in enterprise data centers and reliable and high-performance container storage will be a necessity. The read/write bandwidth and I/O access efficiency of scale-out storage need to be significantly improved. An intelligent data fabric is required to implement a global data view and unified data scheduling across systems, regions, and clouds.

…

IDrive e2 announced the release of its on-premises object storage appliance at VeeamON 2023. It will enable users to radically simplify the ability to manage, store, and protect data while allowing S3/HTTP access to any application, device or end user. A spokesperson told us: “You recently wrote about Object First, so we will compete with them, our pricing is available upon request but we will be more aggressive than Object First, and considering competitors like Wasabi or Backblaze, we are the first to launch such a device.”

…

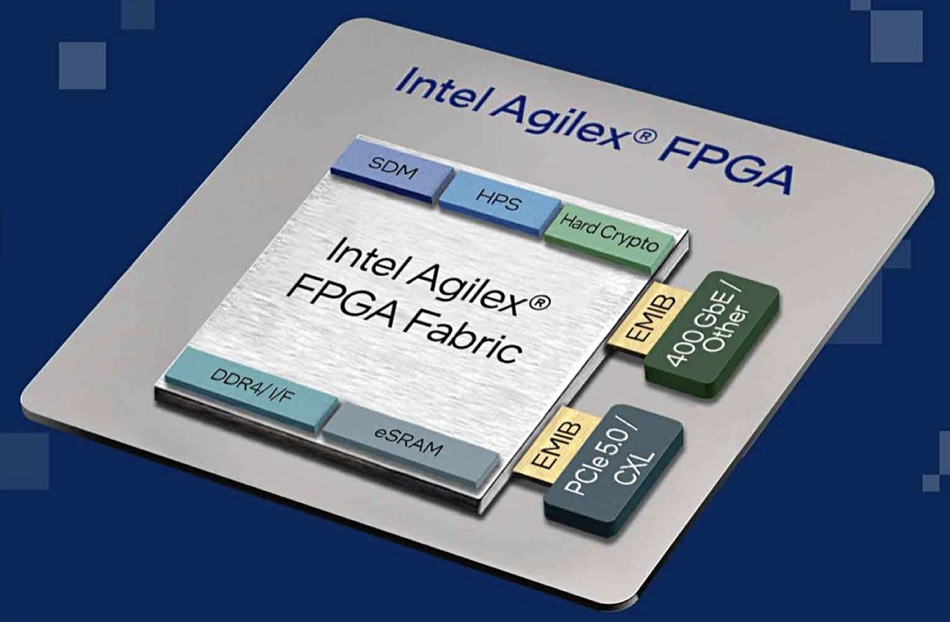

Intel is volume shipping the Agilex 7 FPGA with the R-Tile chiplet. This FPGA is the first with PCIe 5.0 and CXL capabilities and the only FPGA with hard intellectual property supporting these interfaces. The “hard intellectual property” term signifies that chip designers can’t significantly modify the digital IP cores involved. Intel says by using Agilex 7 with R-Tile, customers can seamlessly connect their FPGAs with processors, such as 4th Gen Intel Xeon Scalable ones, with the highest bandwidth processor interfaces to accelerate targeted datacenter and high-performance computing (HPC) workloads.

…

Kasten by Veeam has released its Kasten K10 v6 Kubernetes data protection software. It includes features to help customers scale cloud-native data protection more efficiently, better protect applications and data against ransomware attacks, and increase accessibility with new cloud native integrations. It now supports Kubernetes 1.26, Red Hat OpenShift 4.12, and a new built-in blueprint for Amazon RDS. The platform also has hybrid cloud support on GCP, cross-platform restore targets for VMware Tanzu environments, and new Cisco Hybrid Cloud CVD with Red Hat OpenShift and Kasten K10. There are new storage options for NetApp ONTAP S3 and Dell-EMC ECS S3.

…

Lenovo says it is the number 5v global player in storage, behind, we understand, Dell, HPE, NetApp and Pure Storage. It was number 8 in its previous fiscal year. Its storage revenues, inside its Infrastructure Solutions Group, were up 208 percent Y/Y in its Q4 fy22/23 results. Servers grew 29 percent while its SW unit grew 25 percent ; storage strongly outgrew servers and SW. ISG made $2.2 billion in the quarter and $9.68 billion in the full year, up 37 percent , with the component server, storage and SW contributions not revealed.

…

Liqid has announced the successful delivery and acceptance of the largest composable supercomputers by the US Department of Defense (DoD). Liqid served as the prime contractor for the $32 million contract, delivering two systems to the Army Research Laboratory DoD Supercomputing Resource Center (ARL DSRC). These systems boast the largest composable GPU pools worldwide, with 360 Nvidia A100 GPUs.

…

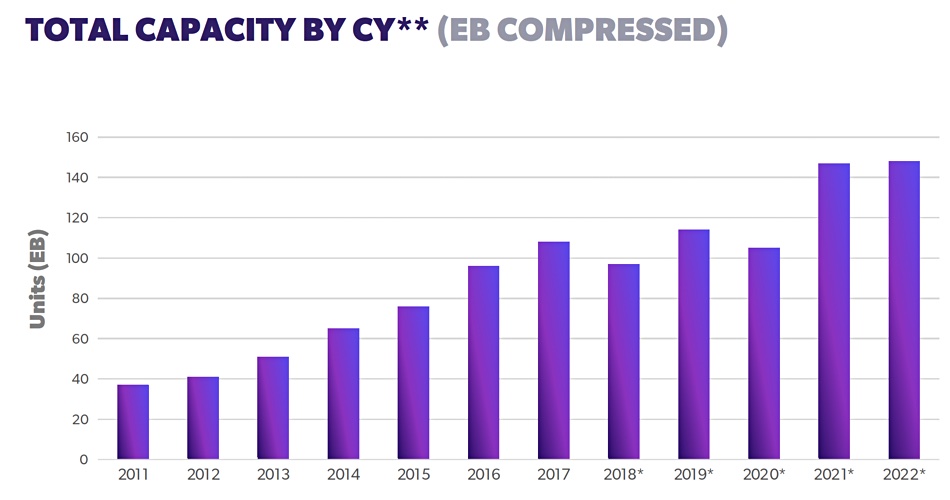

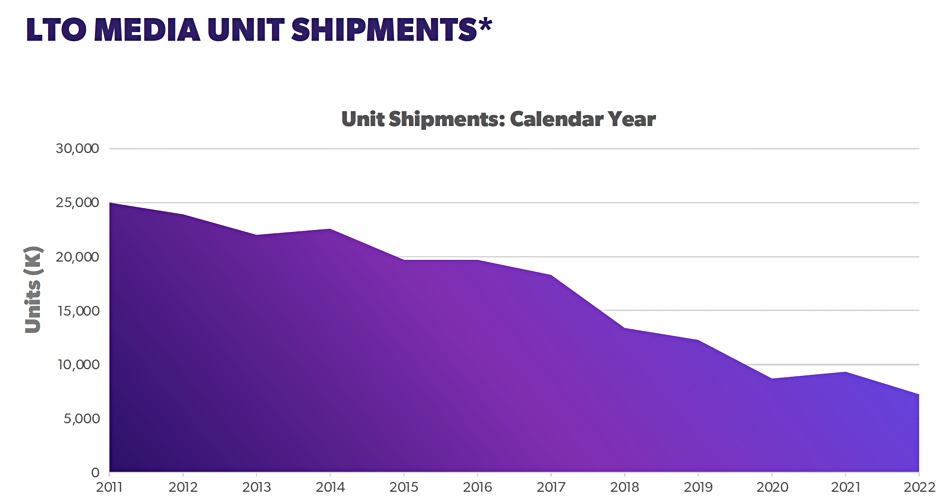

The LTO organization reports that a record 148.3EB of total compressed LTO tape capacity shipped in 2022, up a barely noticeable 0.5 percent over 2021. LTO says it’s a strong result. LTO-9 technology (45TB compressed) capacity shipments are demonstrating the most rapid capacity adoption rate since LTO-5. Tape media unit ships dropped, continuing a long-term trend.

There are no numbers for tape media shipments but it looks like a rough 22 percent drop from 9 million cartridges in 2021 to some 7 million cartridges in 2022. On that basis, a 22 percent unit shipment drop and mere 0.5 percent capacity increase, we can say the tape market almost declined in 2022 compared to 2021.

…

TechCrunch reports that Microsoft has launched Fabric, an end-to-end data and analytics service (not to be confused with Azure Service Fabric). It centers around Microsoft’s OneLake data lake, but can also pull in data from Amazon S3 and (soon) Google Cloud Platform, and includes everything from integration tools, a Spark-based data engineering platform, a real-time analytics platform, and, thanks to the newly improved Power BI, an easy-to-use visualization and AI-based analytics tool.

…

MSP data protector N-able is collaborating with the US Joint Cyber Defense Collaborative (JCDC) to help create a more secure global ecosystem and work towards helping reduce security risk for MSPs and their customers.

…

Resilio will soon be launching a private beta for a new scale-out file transfer technology that will dramatically improve the speed of file transfers for data-intensive companies. It says internal Resilio Connect tests, using AWS and Azure respectively, are regularly exceeding 100Gbps. Cloud scenarios tested include transferring a 1TB data set between Microsoft Azure regions in 90 seconds and transferring a 500GB data set in Google Cloud from London to Australia in 50 seconds.

Its automated scale-out technology features horizontal scaling with linear performance acceleration across multiple nodes in a cluster, collectively pooling Resilio agents to transfer and sync unstructured data in parallel. If an organization has 10 Agents with a 10Gbps bandwidth connection, files will transfer at 100Gbps. And through custom WAN optimization, Resilio overcomes latency due to distance.

Companies interested in participating in Resilio’s closed beta may sign up here. A technical blog post with additional details is available here.

…

Data protector Rubrik has appointed Toby Keech as VP for UK and Ireland. He joins from Zscaler, where he headed up UK&I and MEA business units.

…

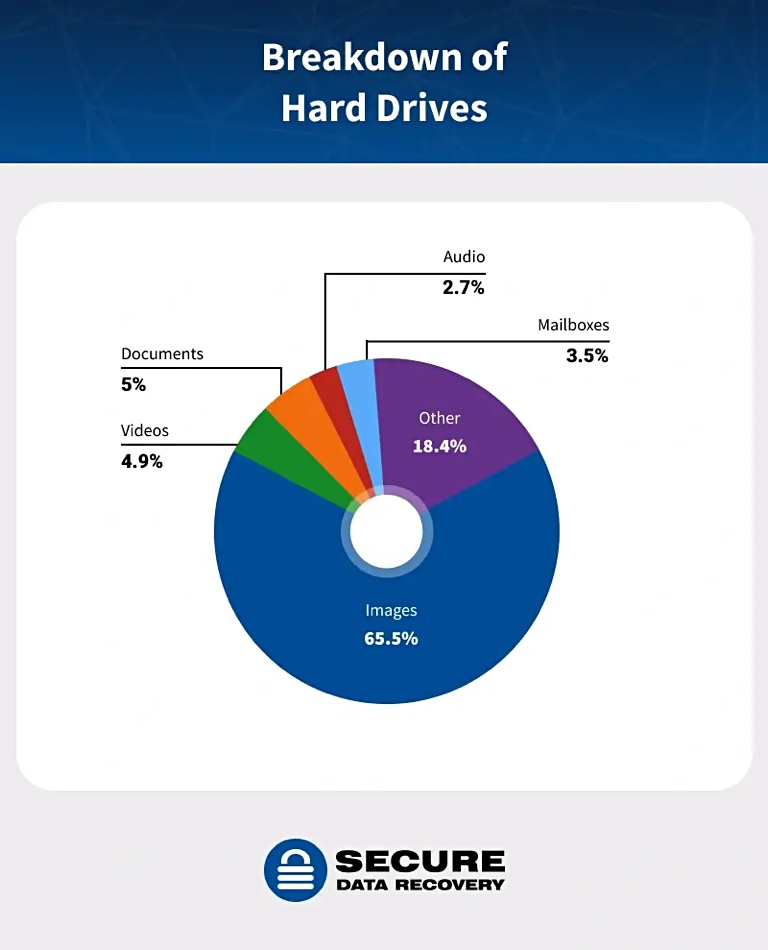

Secure Data Recovery purchased 100 discarded drives (for less than $100 total) to see what they could recover. Its engineers recovered data from 35 hard drives without repair (they found 31 hard drives in the sample were either damaged or encrypted). In total, the 35 unsanitized hard drives contained 5,786,417 files. All devices were listed as refurbished, used, or for parts. The release date of the hard drives ranged from 1994 to 2022.

…

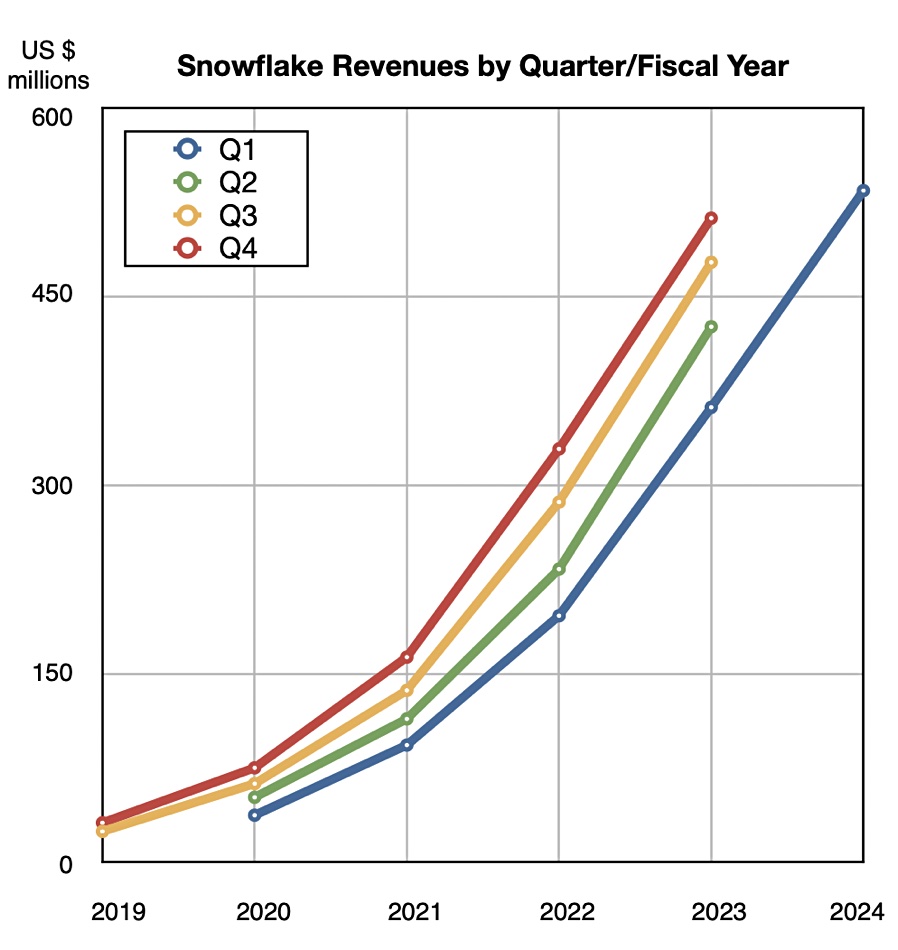

Snowflake revenue for Q1 fiscal 2024 was $623.6 million, representing 48 percent year-over-year growth. Product revenue for the quarter was $590.1 million, representing 50 percent year-over-year growth. The company now has 373 customers with trailing 12-month product revenue greater than $1 million and 590 Forbes Global 2000 customers. Net revenue retention rate was 151 percent as of April 30, 2023. Snowflake is acquiring Neeva, a search company that leverages generative AI and other innovations to allow users to query and discover data in new ways.

…

Swissbit Device Manager (SBDM) is the latest edition of Swissbit’s software tool for storage devices. It allows customers to initiate firmware updates in addition to insight into the lifecycle status of Swissbit storage devices. It supports customers who are in the design-in stages, giving them flexibility for extensive testing and customization. A user-friendly app presents all the captured data. It serves as a main interface for all Swissbit storage products. The Swissbit website offers a free download of the tool, which is available for both Windows and Linux.

…

According to new data in the Veeam 2023 Ransomware Trends Report, one in seven organizations will see almost all (>80 percent) data affected as a result of a ransomware attack – pointing to a significant gap in protection. It says attackers almost always (93+ percent) target backups during cyber attacks and are successful in debilitating their victims’ ability to recover in 75 percent of those events, reinforcing the criticality of immutability and air gapping to ensure backup repositories are protected.