HPC parallel scale-out file system software supplier Panasas has decided that full scale adoption of the public cloud, with its PanFS software ported there, is not needed by its customers. The cloud can be a destination for backed up PanFS-held data or a cache to be fed – but that is all.

Update: GPUDirect clarification points added. 3 March 2023.

An A3 TechLive audience of analysts and hacks (reporters) was told by Panasas’s Jeff Whitaker, VP for product management and marketing, that the AI use case has become as important and compute-intensive as HPC, and is seen in the cloud, but: “We see smaller datasets in the cloud – 50TB or less. We’re not seeing large AI HPC workloads going to cloud.”

Data, he says, has gravity, and compute needs to be where the data is. That means, for Panasas, HPC datacenters such as the one at Rutherford Appleton Labs in the UK, one of its largest customers. Also, in its HPC workload area, compute, storage, networking hardware and software have to be closely integrated. That is not so feasible in the public cloud where any supplier’s software executes on the cloud vendor’s instances.

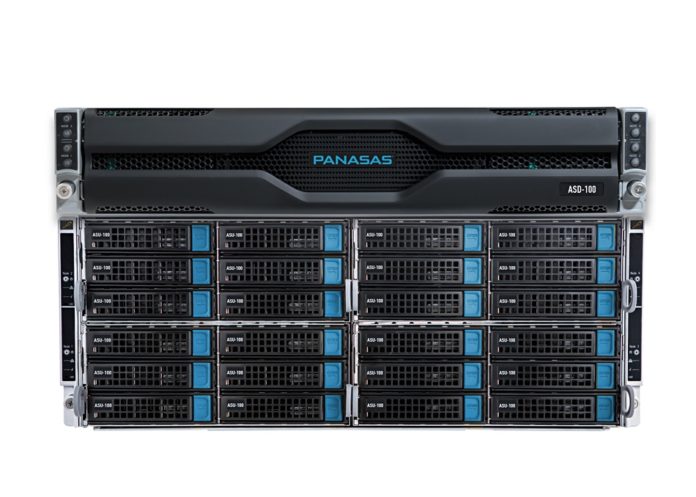

He says Panasas data sets need to be shared between HPC and AI/ML, and the Panasas ActiveStore/PanFS systems need to be able to serve data to both workloads. Its latest ActiveStir Ultra hardware can store metadata in fast NVMe SSDs, small files in not quite so fast SATA SSDs, and large files in disk drives which can stream them out at a high rate – supporting both small file-centric AI and large file-centric HPC.

This is tiering by file type and not – as most others do it – tiering by file age and access likelihood.

Panasas can support cloud AI by being able to move small chunks of data, up to 2TB or so, from on-premises PanFS systems to load cloud file caches in, for example, AWS and Azure.

Panasas also supports the movement of older HPC datasets to the cloud for backup purposes. Its PanMove tool allows end-users to seamlessly copy, move, and sync data between all Panasas ActiveStor platforms and AWS, Azure, and Google Cloud object storage.

Whittaker said Panasas saw on-premises AI workloads as important but it has no plans in place to support Nvidia’s GPUDirect fast file data feed to its GPUs – the GPUs which run many AI applications. This is in spite of GPUDirect support being in place for competing suppliers such as WekaIO, Pure Storage and IBM (Spectrum Scale). He suggests they are not actually selling much GPUDirect-supporting product.

Wantimg to clarify this point Whittaker told us: “We are very much selling into GPU environments, but our customers aren’t asking for GPUDirect. The performance they are achieving from our DirectFlow protocol is giving them a significant boost over standard file protocols without requiring an RDMA-based protocol such as GPUDirect. Due to this, we are meeting their performance and scale demands. GPUDirect is absolutely in-plan for Panasas. We just are finishing other engineering projects before completing GPUDirect.”

When Panasas needs to move GPUDirect from a roadmap possibility to a delivered capability then it could do so in less than 12 months. What Panasas intends to deliver later this year includes S3 primary support in its systems, Kubernetes support, and rolling out the upload to public cloud file cache from Panasas’s on-prem systems. For example, to AWS’s file cache with its pre-warming capability and other functionalities to keep data access rates high.

But there is no intent to feed data to the file caches from backed-up Panasas data in cloud object stores. Our intuition, nothing more, says this restriction will not hold, and that Panasas will have to have a closer relationship with the public cloud vendors.

A final point. Currently Panasas ActiveStor hardware is made by Supermicro. Whittaker said Panasas is talking abut hardware system manufacturing possibilities with large solution vendors. We suggested Lenovo but he wasn’t keen on that idea, mentioning Lenovo’s IBM (Spectrum Scale) connection. That leaves, we think, Dell and HPE. But surely not HPE with its Cray supercomputer stuff coming down market. So, we suggest, a Dell-Panasas relationship might be on the cards.

Whichever large solution vendor might be chosen it could provide an extra channel to market for Panasas’s software. It could also help in large deals where the customer has an existing server supplier and would like use their kit in its HPC workloads as well.