Lakehouse shipper Databricks has updated its open-source Dolly ChatGPT-like large language model to make its AI facilities available for business applications without needing massive GPU resources or costly API use.

ChatGPT is a chatbot launched by OpenAI which is based on a machine learning Large Language Model (LLM) and generates sensible-seeming text in response to user requests. This has generated an extraordinary wave of interest despite the fact that it can generate wrong answers and make up sources for statements it wants to use. ChatGPT was trained using a large population of GPUs and input parameters; the GPT-3 (Generative Pretrained Transformer 3) model on which it is based used 175 billion parameters.

Databricks sidestepped these limitations to create its Dolly chatbot, a 12 billion parameter language model based on the EleutherAI pythia model family. Its creators write on a company blog: “This means that any organization can create, own, and customize powerful LLMs that can talk to people, without paying for API access or sharing data with third parties.”

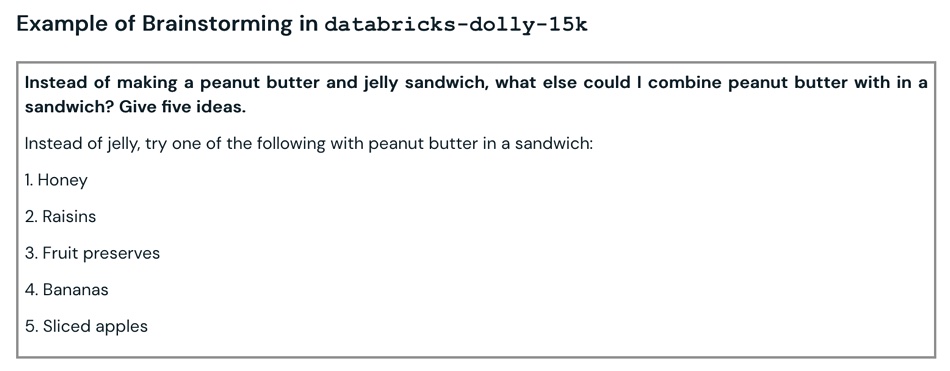

The Databricks team did this in two stages. In late March they released Dolly v1.0, an LLM trained using a 6 billion parameter model from Eleuther.AI. This was modified “ever so slightly to elicit instruction following capabilities such as brainstorming and text generation not present in the original model, using data from Alpaca.”

They say Dolly v1.0 shows how “anyone can take a dated off-the-shelf open source large language model (LLM) and give it magical ChatGPT-like instruction following ability by training it in 30 minutes on one machine, using high-quality training data.”

They open sourced the code for Dolly and showed how it can be re-created on Databricks, saying: “We believe models like Dolly will help democratize LLMs, transforming them from something very few companies can afford into a commodity every company can own and customize to improve their products.”

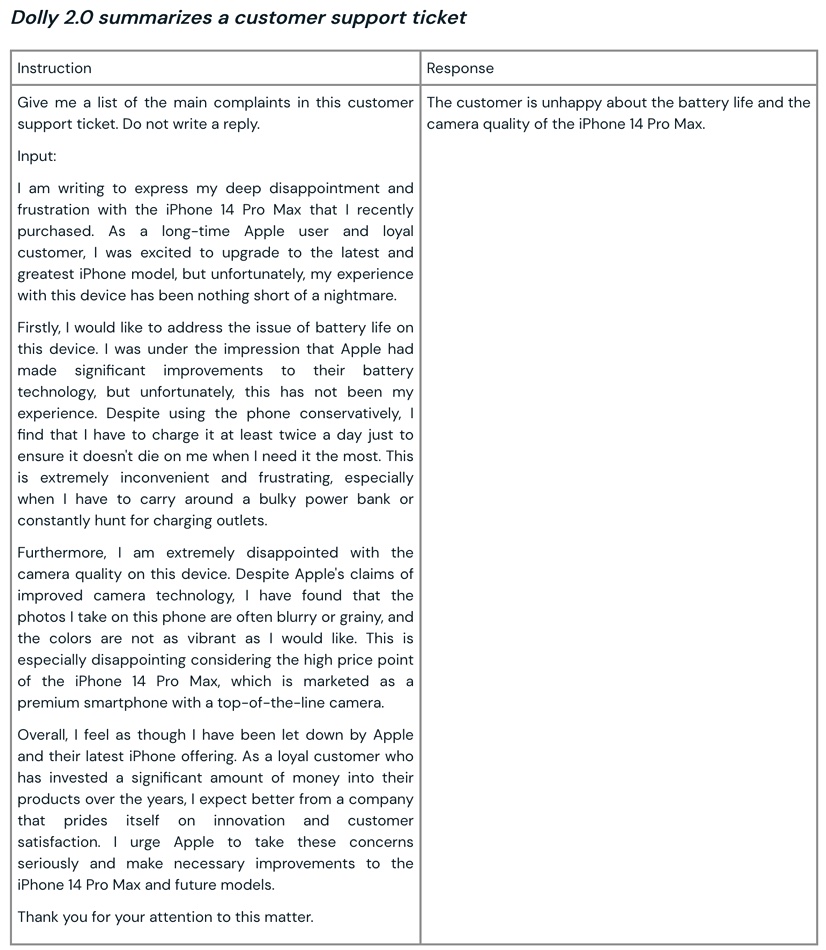

Now Dolly 2.0 has a larger model of 12 billion parameters – “based on the EleutherAI pythia model family and fine-tuned exclusively on a new, high-quality human generated instruction following dataset, crowdsourced among Databricks employees.” Databricks is “open-sourcing the entirety of Dolly 2.0, including the training code, the dataset, and the model weights, all suitable for commercial use.”

The dataset, databricks-dolly-15k, contains 15,000 prompt/response pairs designed for LLM instruction tuning, “authored by more than 5,000 Databricks employees during March and April of 2023.”

The OpenAI API dataset has terms of service that prevent users creating a model that competes with OpenAI. Databricks crowdsourced its own 15,000 prompt/response pair dataset to get around this additional limitation. Its dataset has been “generated by professionals, is high quality, and contains long answers to most tasks.” In contrast: “Many of the instruction tuning datasets released in recent months contain synthesized data, which often contains hallucinations and factual errors.”

To download Dolly 2.0 model weights, visit the Databricks Hugging Face page and visit the Dolly repo on databricks-labs to download the databricks-dolly-15k dataset. And join a Databricks webinar to discover how you can harness LLMs for your own organization.

+ Comment

A capability of Dolly-like LLMs is that they can write code, specifically SQL code. That could lead to non-SQL specialists being able to set up and run queries on the Databricks lakehouse without knowing any SQL at all.

This leads on to two thoughts: one, SQL devs could use it to become more productive and, two, you don’t need so many SQL devs. Dolly could reduce the need for SQL programmers in the Databricks world.

Extend that thought to Snowflake and all the other data warehouse environments and SQL skills could become a lot less valuable in future.