CXL – Computer eXpress Link – the extension of the PCIe bus outside a server’s chassis, based on the PCIe 5.0 standard. There are four versions;

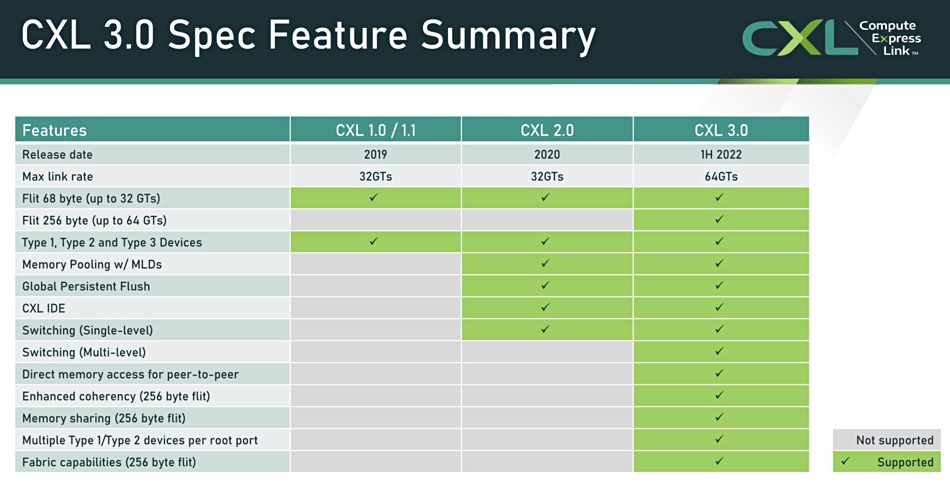

- CXL v1, released in March 2019 enables server CPUs to access shared memory on local accelerator devices with a cache coherent protocol; memory expansion.

- CXL v1.1 enables interoperability between the host processor and an attached CXL memory device; still memory expansion.

- CXL 2.0 provides for memory pooling between a CXL memory device and more than a single accessing host. It enables cache coherency between a server CPU host and three device types.

- CXL 3.0 uses PCIe gen 6.0 and doubles per-lane bandwidth to 64 gigatransfers/sec (GT/sec). It supports multi-level switching and enables more memory access modes – providing sharing flexibility and more memory sharing topologies – than CXL 2.0.

There are three device types in the CXL 2.0 standard;

- Type 1 devices are I/O accelerators with caches, such as smartNICs, and they use CXL.io protocol and CXL.cache protocol to communicate with the host processor’s DDR memory.

- Type 2 devices are accelerators fitted with their own DDR or HBM (High Bandwidth) memory and they use the CXL.io, CXL.cache, and CXL.memory protocols to share host memory with the accelerator and accelerator memory with the host.

- Type 3 devices are just memory expander buffers or pools and use the CXL.io and CXL.memory protocols to communicate with hosts.

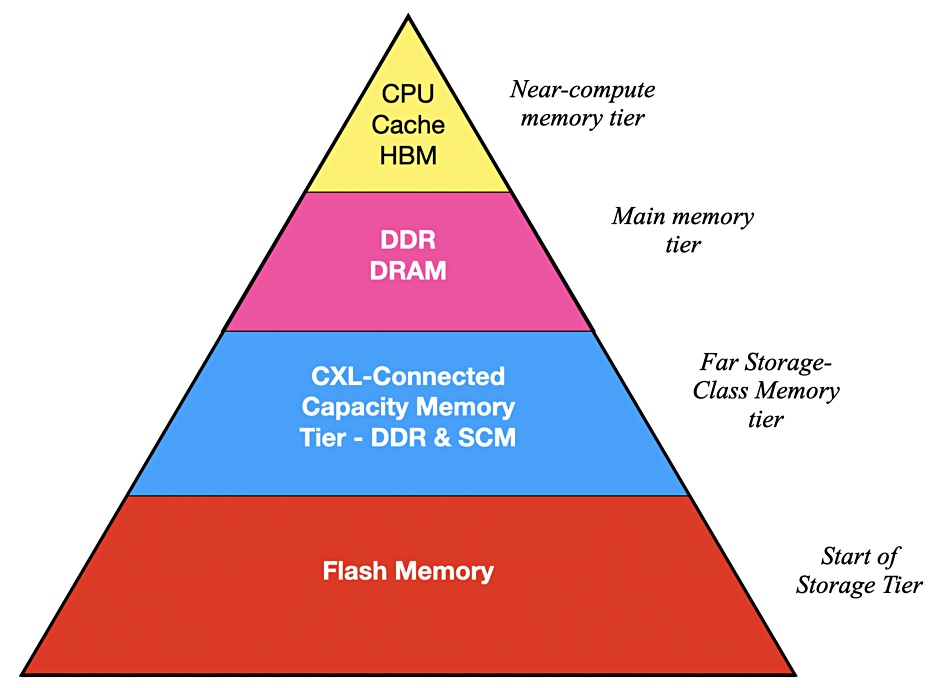

A CXL 2.0 host may have its own directly connected DRAM as well as the ability to access external DRAM across the CXL 2.0 link. Such external DRAM access will be slower, by nanoseconds, than the local DRAM access, and system software will be needed to bridge this gap.

A CXL fabric controller can talk to Single Logical Devices (SLDs) for Multi-Logical Devices (MLDs). In a CXL 2.0 domain multiple hosts can be connected to a single CXL device, and it can be useful to divide the device’s resources into multiple host recipients. A supporting MLD can be virtually separated into up to 16 logical devices (LDs) each with their own LD-ID.