Frore Systems showed off a 64TB U.2 SSD cooled by its AirJet Mini at Flash Memory Summit 2023 that removes 40W of heat without using bulky heat sinks, fans or liquid cooling.

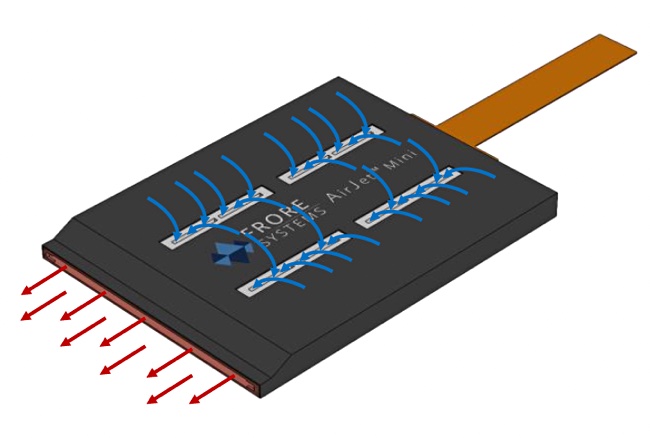

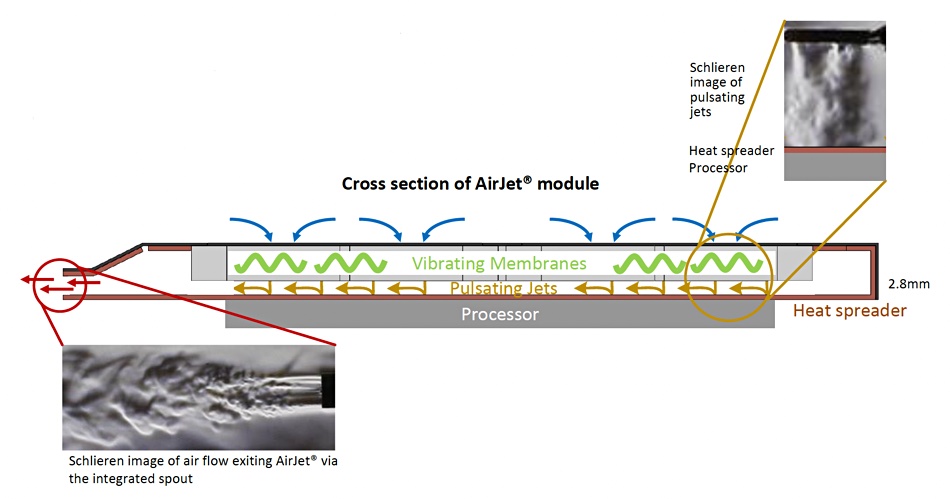

AirJet is a self-contained, solid-state, active heat sink module that’s silent, thin, and light. It measures 2.8mm x 27.5mm x 41.5mm and weighs 11g. It removes 5.2W of heat at a 21dBA noise level, while consuming a maximum 1W of power. AirJet Mini generates 1,750 Pascals of back pressure, claimed to be 10x higher than a fan, enabling thin and dust-proof devices that can run faster because excess heat is taken away.

Think of the AirJet Mini as a thin wafer or slab that sits on top of a processor or SSD and cools it by drawing in air, passes it over a heat spreader physically touching the device, then ejects it from another outlet. Alternatively, AirJet Mini can be connected to its target via a copper heat exchanger.

Inside the AirJet Mini are tiny membranes that vibrate ultrasonically and generate the necessary airflow without needing fans. Air enters though top surface vents and is moved as pulsating jets through the device and out through a side-mounted spout.

AirJet is scalable, with additional heat removed by adding more wafers or chips. Each chip removes 5W of heat, two chips can remove 10W, three chips 15W, and so on. A more powerful AirJet Pro removes more heat – 10.5W of heat at 24dBA, while consuming a maximum 1.75W of power.

AirJet can be used to cool thin and light notebook processors or SSDs, and enable them to run faster without damage. Running faster produces more heat, which the AirJet Mini removes.

OWC built a demonstration a portable SSD-based storage device in a 3.5-inch carrier, which it exhibited at FMS 2023. Inside the Mercury Pro casing are 8 x 8TB M.2 2280 format SSDs, each with an attached AirJet Mini. Its bandwidth is between 2.2GBps and 2.6GBps for sequential writes. We don’t know how it would perform without the Frore cooling slabs, though.

Speed increases may not be the only benefit, however, as a similar-sized Mercury Pro U.2 Dual has an audible fan. Frore’s cooling does away with the noise and needs less electricity.

We could see notebook, gaming system and portable SSD device builders using Frore membrane cooling technology so their devices can be more powerful without needing noisy fans, bulky heatsinks or liquid cooling.

OWC has not committed to making this a product. Get a Frore AirJet Mini datasheet here.