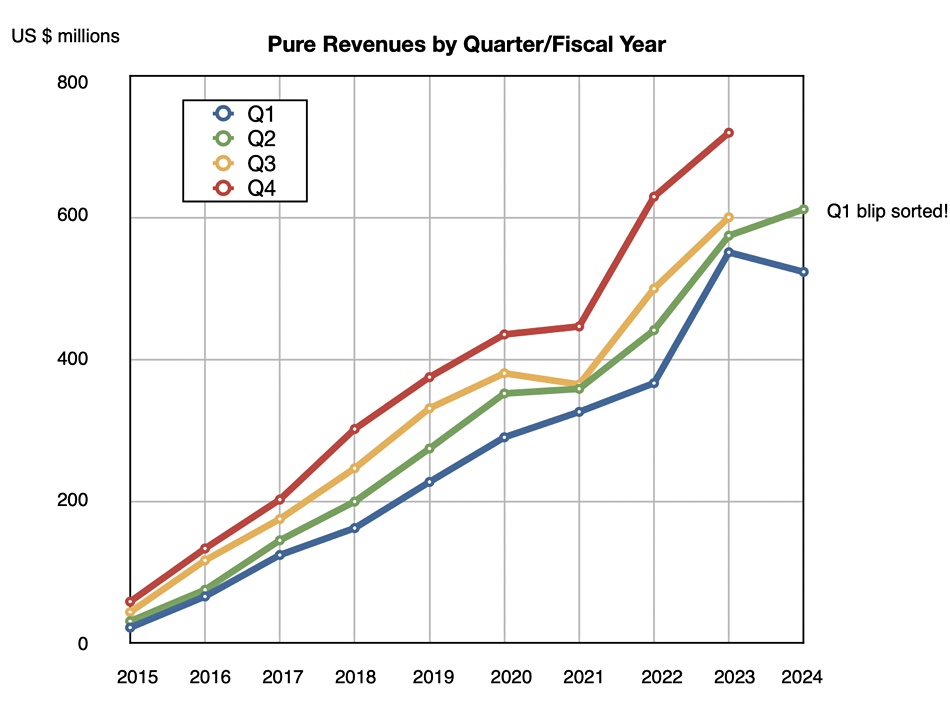

In spite of a revenue decline in the first quarter of fiscal 2024, Pure has bounced back with positive growth in its second quarter.

Update. Dell storage decline corrected to 3 percent from 11 percent (which was ISG decline). 5 Sep 2023.

The company reported revenues of $688.7 million for the quarter ending August 6, a rise of 6.5 percent year-over-year and surpassing projected figures. The reported loss of $7.1 million down from a $10.9 million profit from the same period a year ago.

CEO Charlie Giancarlo said: “We are pleased with our financial results this quarter. While the macro environment continued to be challenging, we outpaced our competitors and saw strong growth in our strategic investments, particularly in Flashblade//S, Flashblade//E and Evergreen//One… I have never been more confident in our long-term growth strategy or in our opportunity to lead this market.”

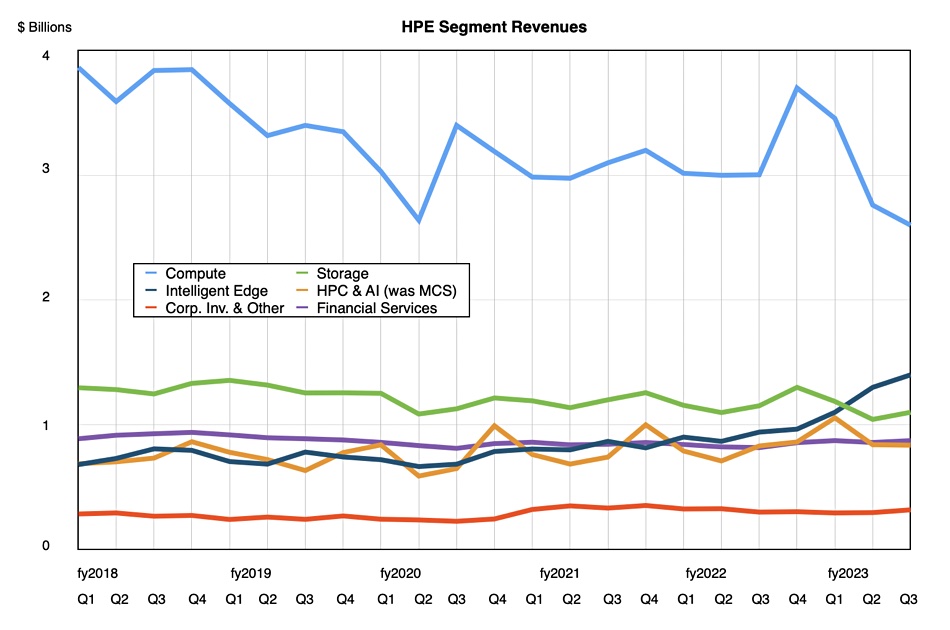

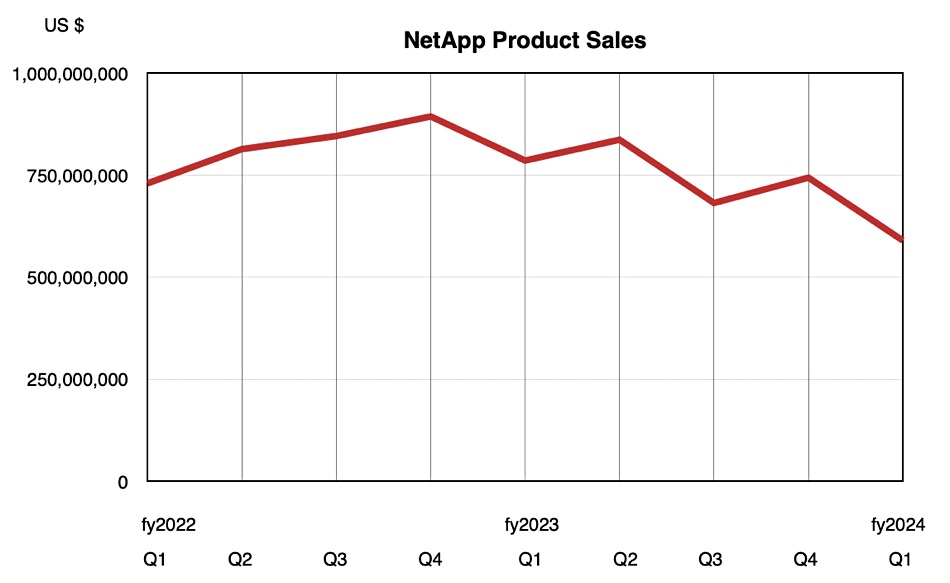

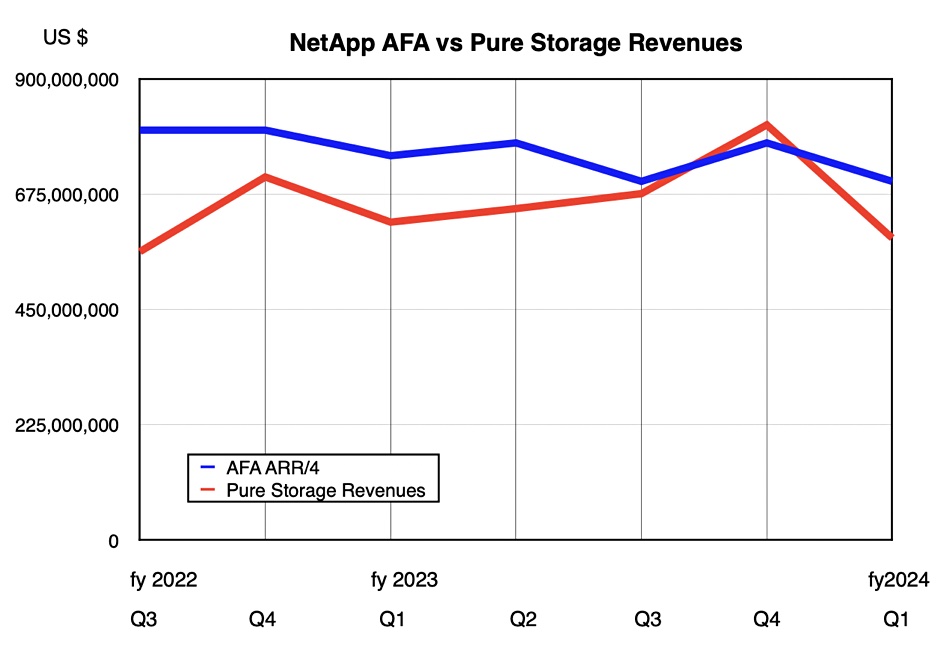

Indeed, when compared to its competitors, Pure exhibited strong performance. Dell’s storage results showed a 3 percent decrease to $4.2 billion, NetApp fell by 10 percent to $1.43 billion, and HPE experienced a 4.5 percent decline, settling at $1.1 billion.

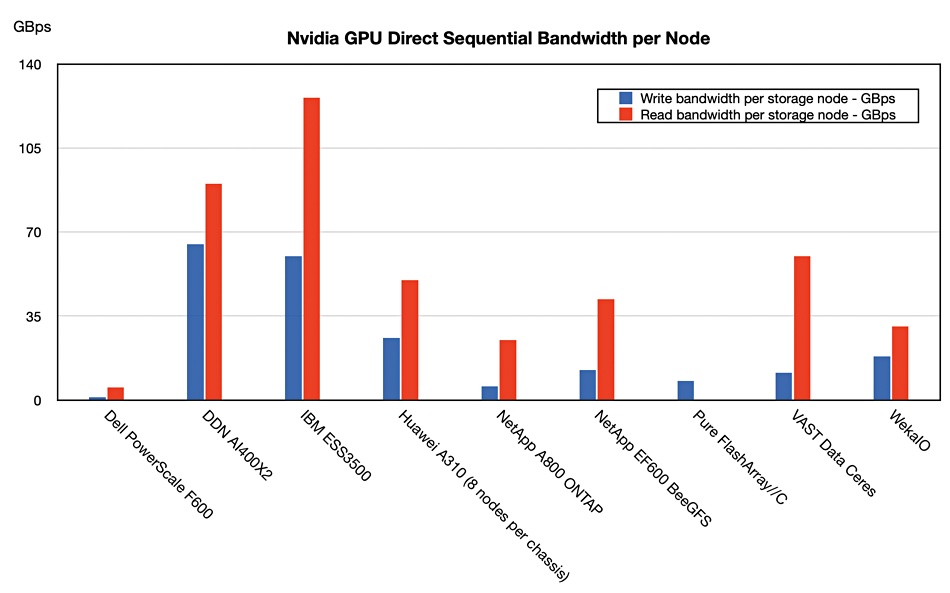

Q2 also saw record sales for Pure’s scale-out FlashBlade unified file+object products, which includes a significant deal (minimum $10 million) for its FlashBlade//S product designed for generative AI work. The FlashBlade//E’s sales growth, based on QLC NAND, has been faster than any previous product launched by Pure.

Subscription services revenue saw year-over-year growth of 24 percent, reaching $288.9 million. This resulted in an ARR of $1.2 billion, a 27 percent increase. Evergreen//One’s consumption-as-a-service subscription sales witnessed a twofold rise year-over-year. The global customer base expanded by 325 this quarter, surpassing 12,000, which now includes 59 percent of the Fortune 500 – a growth from the previous quarter’s 56 percent. This means an addition of 15 Fortune 500 clients.

CFO Kevan Krysler said: “We were very pleased with record sales across our FlashBlade portfolio, and doubling sales of our Evergreen//One subscription offering this quarter. With our Purity software working directly with raw flash, we have established substantial differentiated advantages and business value for our customers, while at the same time expanding our margins.”

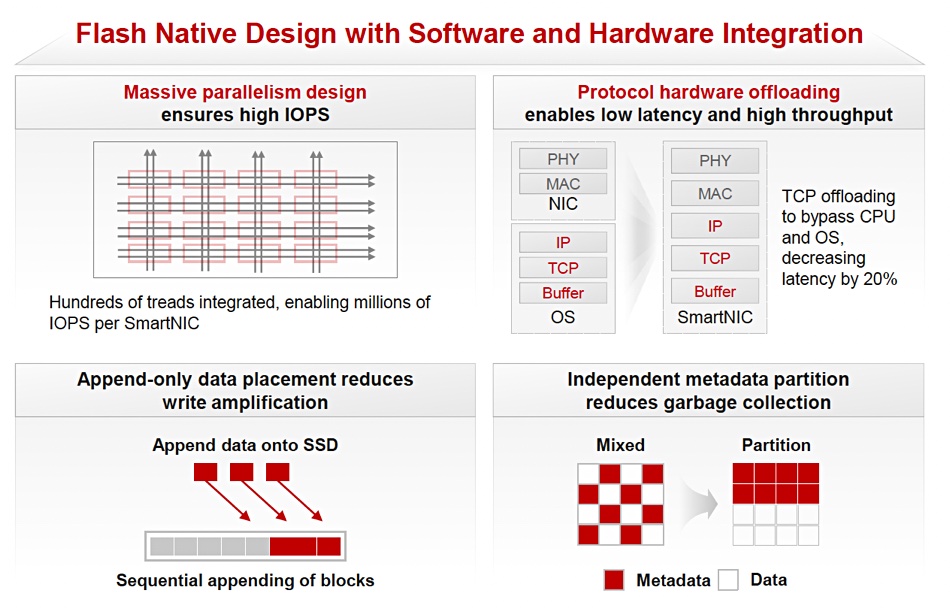

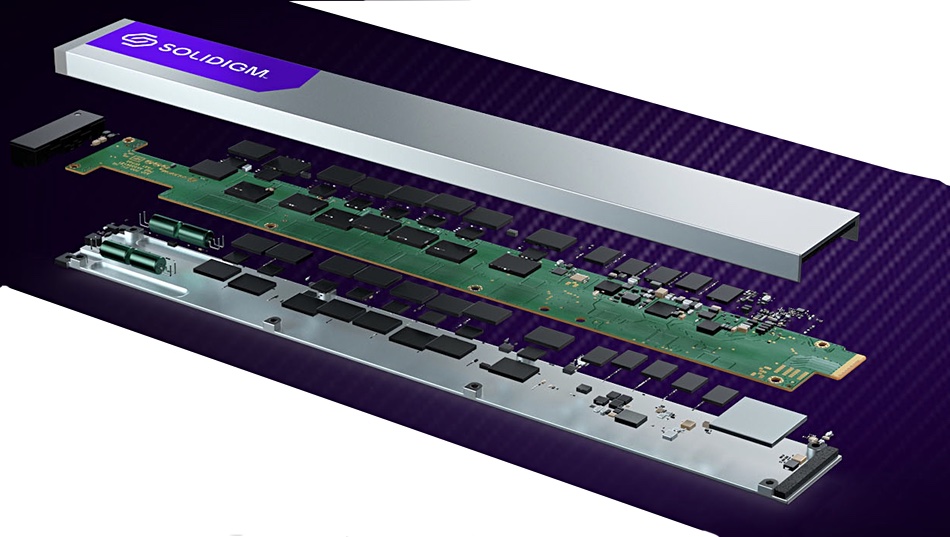

Pure’s competitors use off-the-shelf SSDs in contrast to Pure’s proprietary direct flash module (DFM) drives. Krysler said: “The majority of the capacity we now ship is based on QLC (4bits/cell) raw flash,” which should give Pure a pricing advantage over customers using more expensive TLC (3bits/cell) commercial SSDs.

”US revenue for Q2 was $495 million dollars and International revenue was $194 million dollars,” he added, implying that Pure has growth opportunities outside the USA.

Pure’s enterprise business and Evergreen//One both surpassed expectations, contributing to the reversal of Q1’s revenue decline. According to Krysler: “That’s a testament to our field really adjusting to our customers’ buying behavior.”

Financial summary

- Gross margin: 70.7 percent vs 70.1 percent in Q1

- Operating cash flow: $101.6 million

- Remaining performance obligations: $1.89 billion, up 26 percent Y/Y

- Free cash flow: $46.5 million

- Total cash, cash equivalents & marketable securities: $1.2 billion

Regarding the Portworx Kubernetes storage business, Giancarlo said: ”Portworx had a good quarter, so we’re very pleased with the progress overall of Portworx. I would say that the the enterprise market for cloud native applications for stateful cloud-native applications has probably progressed a bit slower in the last year than we had expected early on.

“But our expectation is that 5 to 10 years from now, all applications will be designed in a cloud native with containers and Kubernetes. So we’re very confident about the future… We’re #1 in that space, and we expect that to continue.”

Disk dying

CTO Rob Lee reiterated Pure’s view on flash replacing disk drives in the earnings call: “Disk is – well, a dead technology spinning, so to speak.” He said Pure’s DFM gave it a competitive lead over SSD-using competitors such as Dell, HPE and NetApp. “We’ve got a three to five-year structural and sustained competitive advantage over, frankly, the rest of the field that I think is trapped on SSD technology.”

IBM FlashSystems use proprietary IBM flash drives and Pure may face stronger competition there.

Giancarlo added: ”The last refuge for hard disks now is in the secondary and tertiary tier. And now we’re able to reach price parity with them at a procurement cost and yet have much lower total cost of ownership and be smaller and be more reliable.

“There’s no other markets that are going to hold revenue for hard disks that flash won’t penetrate. And what that means is just lesser revenue and therefore, lesser investment in ongoing development of hard disks. That’s also going to be a problem for the vendors. So it’s unfortunate. I don’t hold any malice. But similar to markets in the past, you’re just – when these transitions take place, CDs over vinyl or DVDs over VHS, there’s just no stopping progress.”

AI

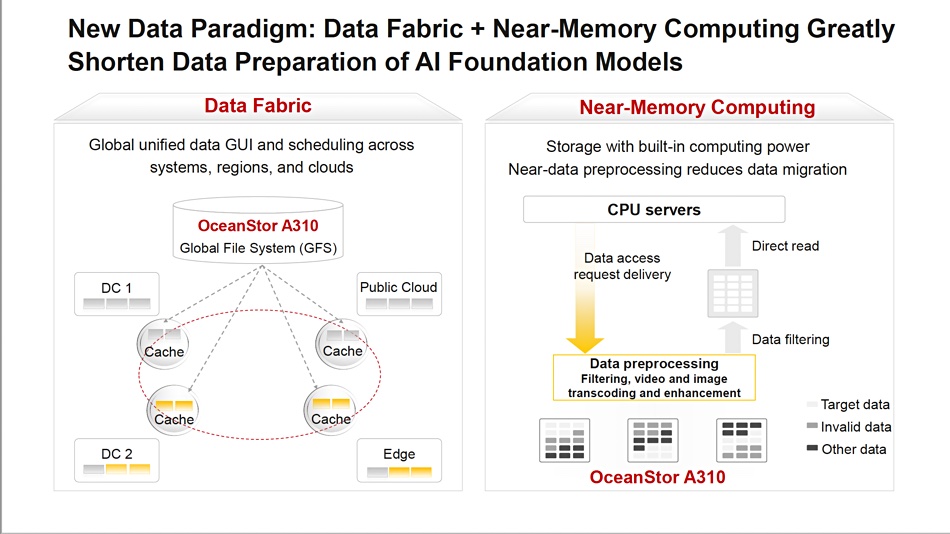

Pure has more than 100 customers using its products in the traditional AI and newer generative AI fields. Giancarlo said: “AI systems are typically greenfield. So we’re not generally replacing. What we are competing with are solely all-flash systems. Hard [disk drive] systems just can’t provide the kind of performance necessary for a sophisticated AI environment.”

Older datasets stored on disk have a growing need to be made accessible for AI processing and Pure hopes customers will move these datasets to its faster flash storage. Lee said: “That’s where we see a tremendous opportunity for us with especially in our FlashArray//C line.”

The outlook for next quarter is for revenues of $760 million, 12.4 percent higher than a year ago. Krysler said the guidance “assumes continued strong subscription revenue growth fueled by our Evergreen//One subscription services.”

Giancarlo added: “We’re expecting stabilization through the end of the year and hopefully an improvement towards the end of the year beginning of next.”