Interview Here is the second part of our interview with Mainline Information Systems storage architect Rob Young, following part 1. We talked about proprietary vs standard SSD formats, high-speed cloud block storage, CXL, file vs block and single tier vs multi-tier arrays and more.

Blocks & Files: What advantages does proprietary flash hold versus commercial off the shelf?

Rob Young: Pure [Storage] demonstrates the advantages in its DirectFlash management of their custom modules, eliminating garbage collection and write amplification with zero over-provisioning. With zero over-provisioning you get what you paid for. VAST Data, with its similar scheme, works with cheap (in their words) COTS QLC drives. Their stellar algorithms with wear-level tracking cell history is such a light touch that their terms of service will replace a worn out QLC drive for 10 years. Likewise, we read that VAST are positioned to go from working with 1000 erase cycles of QLC cell to the 500 erase cycles of PLC when PLC becomes a thing.

What is an advantage of custom design? The ability like Pure to create very large modules (75 TB currently) to stack a lot of data in 5 units of rack space. The drives are denser and more energy-efficient. IBM custom FCM’s are very fast (low latency is a significant FCM custom advantage), and have onboard and transparent zero overhead compression. This provides less advantage these days with custom compression at the module level. Intel incorporated QAT tech and compression is happening at the CPU now. In development time QAT is a recent 2021 introduction. At one point, Intel had separate compression cards for storage OEMs. I don’t want to talk about that.

Let’s briefly consider what some are doing with QLC. Pure advertises 2 to 4 ms IO (read) response time with their QLC solution. In 2023 is that a transactional solution? No, not a great fit. This is a tier2 solution – backup targets, AI/ML, Windows shares, massive IO and bandwidth – a great fit for their target audience.

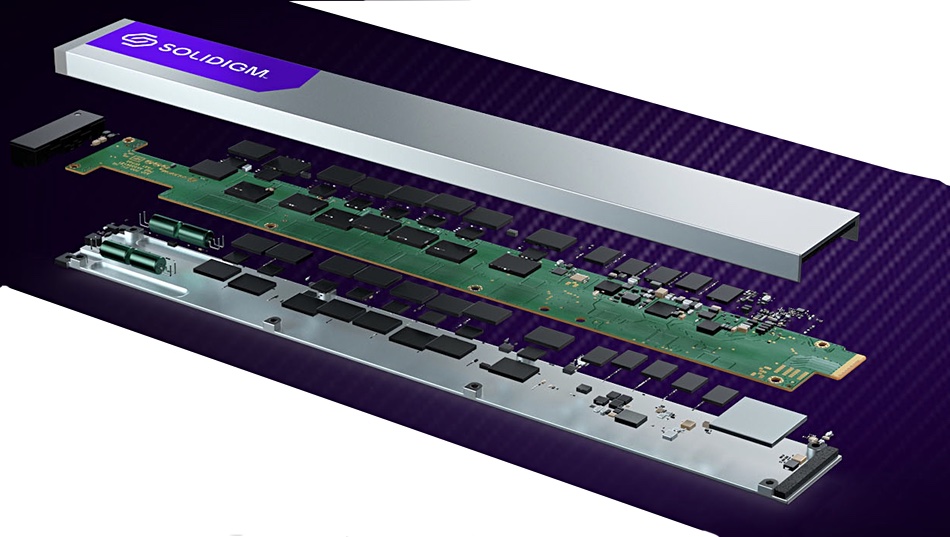

So, when we discuss some of these solutions keep in mind, you still need something for transactional workloads and there are limited one-size fits all solutions. Now having said that, look at the recent Solidigm announcement of the D5-P5336. They are showing a 110 us small random read described as TLC performance in QLC form-factor. That’s 5x faster than the QLC VAST is currently using. Surely a game changer for folks focused on COTS solutions.

Blocks & Files: Do very high-speed block array storage instances in the cloud using ephemeral storage, in a similar way to Silk and Volumez represent a threat to on-premises block arrays?

Rob Young: Volumez is a very interesting newcomer. We see that Intersystems is an early showcase customer adopting Volumez for their cloud-based Iris analytics. What is interesting about Volumez is the control plane that lives in the cloud.

On-prem storage growth is stagnant while clouds are climbing. These solutions are no more of a threat to on-prem than the shift that is organically occurring.

Secondly, Volumez is a Kubernetes play. Yes, next-gen dev is focused on K8s but many Enterprises are like aircraft carriers and slow to pivot. There are a lot of applications that will be traditional 3-tier VM stack for a long time. What wouldn’t surprise me, with cloud costs typically higher versus on-prem, is we would see Volumez customers at some point do common sense things like test/dev footprint resides on-prem and production in a cloud provider. Production resides in the cloud in some cases just to feed nearby analytics plus it is smaller footprint than test/dev. Cloud mostly owns analytics from here on, the great scale of the shared Cloud almost seals that deal.

Blocks & Files: How might these ephemeral storage-based public cloud storage instances be reproduced on-premises?

Rob Young: Carmody tells us an on-prem version of Volumez will happen. Like Volumez, AWS’s VSA uses ephemeral storage also. How will it happen? JBOF on high-speed networks and it might take a while to become common but suppliers are about today.

Cloud has a huge advantage in network space. Enterprise on-prem lag cloud in that regard with differing delivery mechanisms (traditional 3-tier VMs and separate storage/network .) What Volumez/AWS VSA have in common is there is no traditional storage controller as we know it.

VAST Data D-nodes are pass-thru storage controllers, they do not cache data locally. VAST Data gets away with that because all writes are captured in SCM (among other reasons.) Glenn Lockwood in Feb 2019 wrote a very good piece describing VAST Data’s architecture. What is interesting is the discussion in the comments where the speculation is the pass-thru D-Node is an ideal candidate to transition to straight fabric-attached JBOF.

Just prior to those comments, Renen Hallak (VAST co-founder) at Storage Field Day spoke about a potential re-appearance of Ethernet Drives. The pass-thru storage controller is no longer at that point. It’s just getting started with limited Enterprise choices but folks like VAST Data, Volumez and others should drive fabric-based JBOF adoption. Server-less infrastructure headed to controller-less. Went off the rails pie-in-the-sky a bit here but what we can anticipate is several storage solutions become controller free with control planes in the cloud and easily pivot back and forth cloud to on-prem. For all the kids in the software pool, “hardware is something to tolerate” is the attitude we are seeing from a new storage generation.

Blocks & Files: How might block arrays use CXL, memory pooling and sharing?

Rob Young: Because it is storage, a CXL-based memory pool would be a great fit. The 250 nanosecond access time for server-based CXL memory? Many discussions on forums about that. From my perspective it might be a tough sell in the server space if the local memory is 3-4x faster than fabric-based. However, and as mentioned above, 250 ns second added overhead on IO traffic is not a concern. You could see where 4, 6, 8, 16 PowerMAX nodes sharing common CXL memory would be a huge win in cost savings and allow for many benefits of cache sharing across Dell’s PowerMAX and other high-end designs.

Blocks & Files: Should all block arrays provide file storage as well, like Dell’s PowerStore and NetApp’s ONTAP do? What are the arguments for and against here?

Rob Young: I touched on a bunch of that above. The argument against is the demarcation of functions, limiting the blast radius. It isn’t a bad thing to be block only. If you are using a backup solution that has standlone embedded storage, that is one thing. But if you as a customer are expected to present NFS shares as backup targets either locally at the client or to the backup servers themselves you must have separation. As mentioned, Pure has now moved file into their block, unified block and file.

Traditionally, legacy high-end arrays have been block only (or bolt-on NFS with dubious capability.) Infinidat claims 15 percent of their customers use their Infinibox as file only, 40 percent block and file. The argument for is there is a market for it if we look at Infinidat. Likewise, Pure now becomes like the original block/file Netapp. There is a compelling business case to combine if you can or the same vendor block usage on one set of arrays, file on other. Smaller shops and limited budgets combine also.

The argument against it is to split the data into different targets (high-end for prod, etc.) and a customer’s architectural preferences prevail. There is a lot of art here, no “one way” to do things. Let me point out a hidden advantage and a caution regarding other vendors. Infinidat uses virtual MAC addressing (as does Pure – I believe) for IP addressing in NAS. On controller reboot via outage or upgrade, the hand-off of that IP to another controller is nearly instant and transparent to the switch. It avoids gratuitous arps. One of the solutions mentioned here (and there are more than one) typically takes 60 seconds for IP failover due to arp on hand-off. This renders NFS shares for ESXi DataStores problematic for the vendor I am alluding to and they aren’t alone. Regarding the 15 percent of Infinidat’s customers that are NFS/SMB only; a number of those customers are using NFS for ESXi. How do we know that? Read Gartner’s Peer Insights, it is a gold mine.

Blocks & Files: How might scale-out file storage, such as VAST Data, Qumulo and PowerScale be preferred to block storage?

Rob Young: Simple incremental growth. Multi-function. At the risk of contradiction, Windows Shares, S3 and NFS access in one platform (but not backup!)

Great SMB advantages of same API/interface, one array as file, other as block. More modern features including built-in analytics at no additional charge in VAST and in Qumulo. In some cases, iSCSI/NFS transactional IO targets (with caveats mentioned.) Multiple drive choices in Qumulo and PowerScale. NLSAS is quite a bit cheaper – still – take a dive on the fainting couch! For some of these applications you want cheap and deep. Video capture is one of them. Very unfriendly to deduplication and compression.

In VAST Data’s case, you will be well poised for the coming deluge. Jeff Denworth at VAST wrote a piece The Legacy NAS Kill Shot basically pointing out high bandwidth is coming with serious consequences. That article bugged me ever since I read it, I perseverated on it far too long. He’s prescient, but like Twain quipped: “it is difficult to make predictions, particularly about the future.” What we can say is AI/ML shows you with a truly disaggregated solution that shares everything with no traffic cop is advantageous.

But the high bandwidth that is coming when the entire stack gets fatter pipelines, not just for AI but in general as PCIe5 becomes common, 64 Gbit FC, 100 Gbit ethernet and higher performing servers will make traditional dual-controller arrays strain under bandwidth bursts. Backup and databases are pathological IO applications, they will be naughty and bursting much higher reads/writes. Noisy neighbor (and worse behavior) headed our way at some point.

Blocks & Files: How do you view the relative merits of single-tiered all-flash storage versus multi-tiered storage?

Rob Young: Phil Soran, founder of Compellent, in Eden Prairie, Minnesota, came up with auto-tiering; what a cool invention – but it now has a limited use case.

No more tiers. I get it and it is a successful strategy. I’ve had issues with multi-tier in banking applications at month-end. Those blocks that have long since cooled off to two tiers lower are suddenly called to perform again. Those blocks don’t instantly migrate to more performant layers. That was a tricky problem. It took an Infinidat to mostly solve the tiering challenge. Cache is for IO, data is for backing store. To get the data into a cache layer quickly (or it is already there) is key and they can get ahead of calls for IO.

Everyone likes to set it and forget it with stable/consistent performant all flash arrays. IBM in their FS/V7K series provides tiering and it has its advantages. Depending on budget and model, you can purchase a layer of SCM drives and hot-up your data to that tier0 SCM layer from tier1 NVMe SSD/FCM – portions of the hottest data. The advantage here is the “slower” performing tier1 is very good performance on tier0 misses. There is a clear use case for tiering as SCM/SLC is still quite expensive. Also, there is your lurker, let’s put an IBM FS9000 IO less than 300 us but more than Infinidat SSA. Additionally, tiering is still a good fit for data consumers that can tolerate slower retrieval time like video playback (I’m referring to cheap and slow NLSAS in the stack.)