Dell has devised a reference architecture-type design for a combined data lake/data warehouse using third-party partner software and its own server, storage, and networking hardware and software.

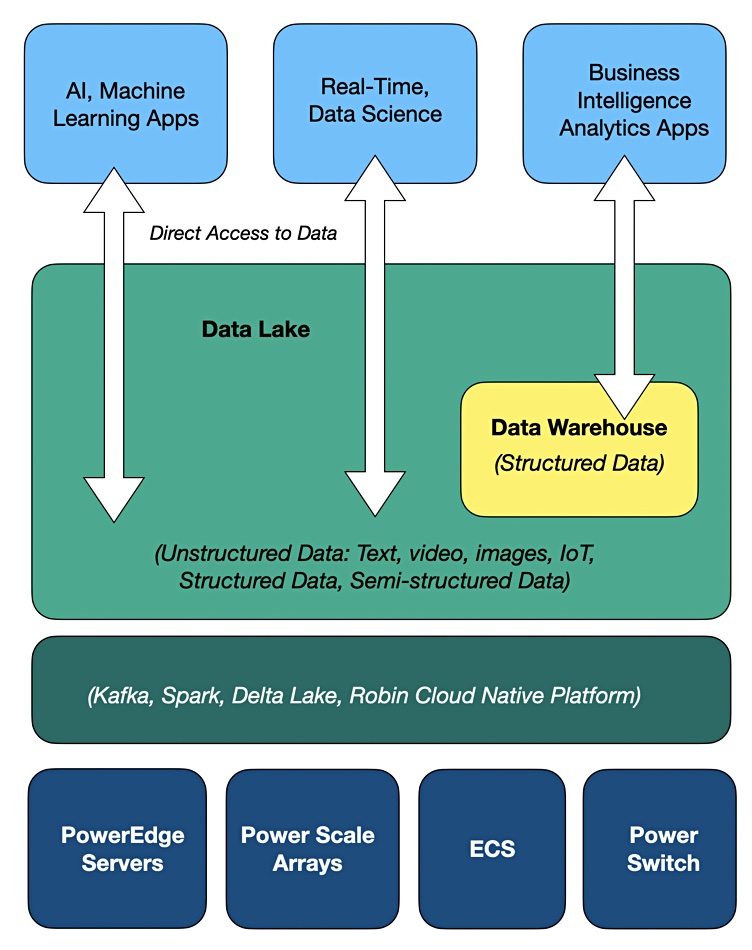

Like Databricks, Dremio, SingleStore, and Snowflake, Dell envisages a single data lakehouse construct. The concept is that you have a single, universal store with no need to run extract, transform and load (ETL) processes to get raw data selected and put into the proper form for use in a data warehouse. It is as if there is a virtual data warehouse inside the data lake.

Chhandomay Mandal, Dell’s director of ISG solution marketing, has written a blog about this, saying: “Traditional data management systems, like data warehouses, have been used for decades to store structured data and make it available for analytics. However, data warehouses aren’t set up to handle the increasing variety of data — text, images, video, Internet of Things (IoT) — nor can they support artificial intelligence (AI) and Machine Learning (ML) algorithms that require direct access to data.”

Data lakes can, he says. “Today, many organizations use a data lake in tandem with a data warehouse – storing data in the lake and then copying it to the warehouse to make it more accessible – but this adds to the complexity and cost of the analytics landscape.”

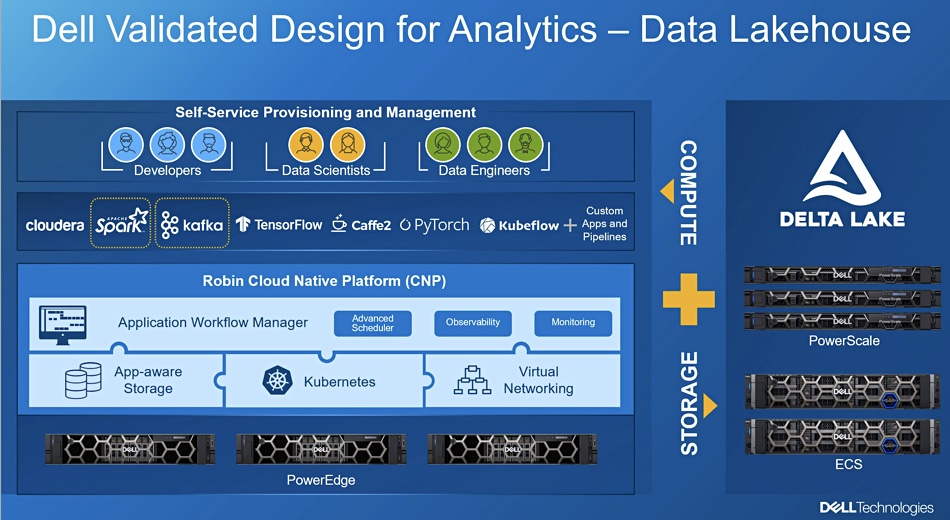

What you need is one platform to do it all and Dell’s Validated Design for Analytics – Data Lakehouse provides it, supporting business intelligence (BI), analytics, real‑time data applications, data science, and machine learning. It is based on PowerEdge servers, PowerScale unified block and file arrays, ECS object storage, and PowerSwitch networking. The system can be housed on-premises or in a colocation facility.

The component software technologies include the Robin Cloud Native Platform, Apache Spark (open-source analytics engine), and Kafka (open-source distributed event streaming platform) with Delta Lake technologies. Databricks’ open-source Delta Lake software is built on top of Apache Spark and Dell is using Databricks’ Delta Lake in its own data lakehouse.

Dell is also partnering Rakuten-acquired Robin.IO with its open‐source Kubernetes platform.

Dell recently announced an external table access deal with Snowflake and says this data lakehouse validated design concept complements that. Presumably Snowflake external tables could reference the Dell data lakehouse.

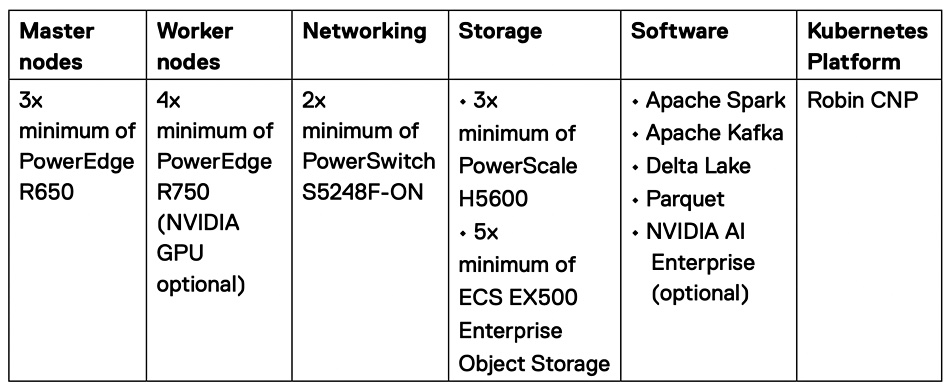

With the above Dell graphic, things start to look complicated. A Dell Solution Brief contains more information, along with this table:

Clearly this is not an off-the-shelf system and needs a good deal of careful investigation and component selection and sizing before you cut a deal with Dell.

Interestingly, HPE has a somewhat similar product, Ezmeral Unified Analytics. This also uses Databrick’s Delta Lake technology, Apache Spark, and Kubernetes. HPE is running a Discover event this week, with many news expected. Perhaps the timing of Dell’s announcement is no accident.