Asigra, Coraid, Gartner and Toshiba supply a clutch of storage news to start the week, with better backup pricing, block and mobile phone storage and a supplier-ranking look at business analytics and data warehousing

There are updates from AOMEI, BackBlaze, Fujitsu and StorageOS followed byCxO hires by Pivot3 and Pure Storage.

Asigra goes unlimited

Backup supplier Asigra has introduced an unlimited use subscription licensing model for managed service providers, saying the existing pay-as-you grow model is broken.

It says MSPs should be able to accurately forecast backup software licensing costs for customers with mixed environments with its new scheme.

Asigra bases the unlimited use pricing on the service provider’s previous year usage plus a jointly-agreed growth factor for the of the agreement. Usage is based on capacity, virtual machines, physical machines, sockets, users, and other things. There are no unexpected pricing uplifts during the year for the service provider, meaning predictable Asigra-based costs.

David Farajun, CEO of Asigra, says. “This first-of-its-kind cloud backup software licensing approach fixes the broken pay-as-you-go model, which has become a sore point for service providers globally due to unplanned pricing spikes as backup volumes surge.”

The increased usage during the term is taken into account when setting the next term’s unlimited use pricing.

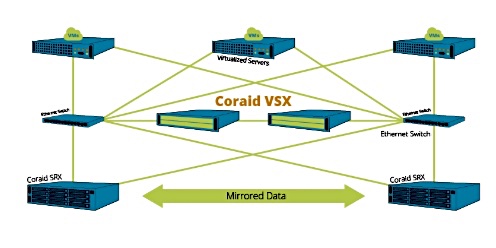

Coraid extends Ethernet block storage scalability

ATA-over-Ethernet (AoE) storage vendor Coraid has updated its VSX product to v8.1.0.

VSX is a diskless storage appliance that provides network storage virtualisation. It uses Coraid’s SRX storage appliances to create an elastic storage system. SRX is Coraid EtherDrive software, providing Ethernet block storage and runs on commodity servers.

AoE is said by Coraid to have a 10x price/performance advantage over Fibre Channel and iSCSI block storage. A VSX with three years of software starts at $4,687.

Gartner’s business analytics MQ sees split market

Gartner’s 2019 Magic Quadrant for Data Management Solutions for Analytics (DMSA) report shows a two-way divide. There is just one visionary vendor, MarkLogic, with a cluster of eight leaders and second cluster of ten niche players. There are no challengers.

The report mentions an increasingly split market, and implies the market is maturing, saying; “Disruption slows as cloud and non-relational technology take their place beside traditional approaches, the leaders extend their lead, and distributed data approaches solidify their place as a best practice for DMSA.”

Snowflask is the only recent vendor to make the jump into the leaders’ quadrant, having been ranked as a challenger a tear ago. Google was a visionary then, and is now also in the leader’s quadrant.Others, like Cloudera and Hortonworks, are stuck in the niche players’ box.

Google will ship you a full copy of the report if you register.

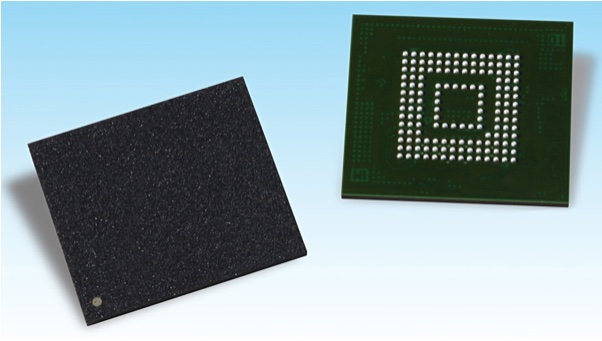

Toshiba’s mobile phone SSD goes faster

Toshiba is sampling 96-layer 3D NAND UFS v3.0 storage. UFS (Universal Flash Storage) is embedded storage for mobile devices. Toshiba’s device has 128GB, 256GB and 512GB capacities.

The UFS v3.0 standard has been issued by JEDEC and specifies a 11.5 x 13mm package.

Toshiba’s mobile phone SSD-like product contains flash memory and a controller which carries out error correction, wear levelling, logical-to-physical address translation, and bad-block management.

It says the new device has a theoretical interface speed of up to 11.6Gbit/s per lane, with two lanes providing 23.2Gbit/s. Sequential read and write performance of the 512GB device are improved by approximately 70 per cent and 80 per cent, respectively, over previous generation devices.

These complied with the JEDEC UFS v2.0 standard, topped out at 256GB capacity, and used the 64-layer 3D NAND. The maximum data rate was 1.166Gbit/s. Toshiba does not supply public sequential read and write performance numbers for either its old or new UFS devices.

Shorts

AOMEI’s Partition Assistant for Windows PC and Server helps in managing hard disks and partitions. Users can resize, move, extend, merge and split partitions. It has been revved to V8.0 with a new user interface and a modernised look and feel.

A Backblaze Cloud Backup v6.0 update enables users to save Snapshots directly to B2 Cloud Storage from their backup account and retain the Snapshots as long as they wish.

Fujitsu has updated one of its four hyperconverged infrastructure (HCI) products, the Integrated System PRIMEFLEX for VMware vSAN, to run SAP applications like HANA. It was first introduced in 2016. Fujitsu provide no hardware details of the refreshed system, merely saying it’s been optimised for SAP. We’re trying to find out more.

Startup StorageOS, which produces cloud native storage for containers and the cloud, has achieved Red Hat Container Certification. A StorageOS Node container turns an OpenShift node into a hyper-converged storage platform, with features like high availability and dynamic volume provisioning. StorageOS is now available through the Red Hat Container Catalog to provide persistent storage for containers.

People

All the ‘P’s this week:-

Rance Poehler has joined HCI vendor Pivot3 as its VP for global sales and chief revenue officer. He joins from Dell Technologies where he was VP w-w sales for cloud client computing, an $8800m business.

Sudhakar Mungamoori has become senior director for product marketing at storage processor startup Pliops. His previous billet was at Toshiba Memory America, where he worked on Kumoscale.

Pure Storage has hired Robson Grieve as its Chief Marketing Officer. He was previously CMO at software analytics company New Relic, and before that, was an SVP and CMO at Citrix.