In this article we explore Storage-Class-Memory (SCM) and explain why it is ready for enterprise IT big time in 2019.

Setting the scene

DRAM is the gold standard for storing data and code in terms of access speed where it is used by CPUs and caches. Everything else – NAND, disk and tape – is slower but much cheaper.

Artificial intelligence, graph-processing, bio-informatics and in-memory database systems all suffer from memory limitations. They have large working data sets and their run time is much faster if these data sets are all in memory.

Working data sets are getting larger and need more memory space yet memory DIMM sockets per CPU are limited to 12 slots on 2-socket X86 systems and 24 on larger systems.

Memory-constrained applications are throttled by a DRAM price and DIMM socket trap: the more you need the more expensive it gets. You also need more CPUs to workaround the 12 DIMM slots per CPU limitation.

A 2016 ScaleMP white paper sums this up nicely. “A dual-socket server with 768GB of DRAM could cost less than $10,000, a quad-socket E7 system with double the capacity (double the cores and 1.5TB memory) could cost more than $25,000. It would also require 4U of rack space, as well as much more power and cooling.”

Storage Class Memory tackles price-performance more elegantly than throwing expensive socketed CPUs and thus more DRAM at the problem.

SCM, or persistent memory (P-MEM) as it is sometime called, treats fast non-volatile memory as DRAM and includes it in the memory space of the server. Access to data in that space, using the memory bus, is radically faster than access to data in local, PCI-connected SSDs, direct-attach disks or an external array.

Bulking and cutting

It is faster to use the entire memory space for DRAM only. But this is impractical and unaffordable for the vast majority of target applications, which are limited by memory and not by compute capacity. SCM is a way to bulk out server memory space without the expense of an all-DRAM system.

In the latter part of 2018, mainstream enterprise hardware vendors have adopted NVMe solid state drives and Optane DIMMs. This month Intel released its Cascade Lake Xeon CPUs with hardware support for Optane. These NVMe drives and Optane DIMMs can be used as storage class memory, and this indicates to us that 2019 will be the year that SCM enters enterprise IT in a serious way.

Background

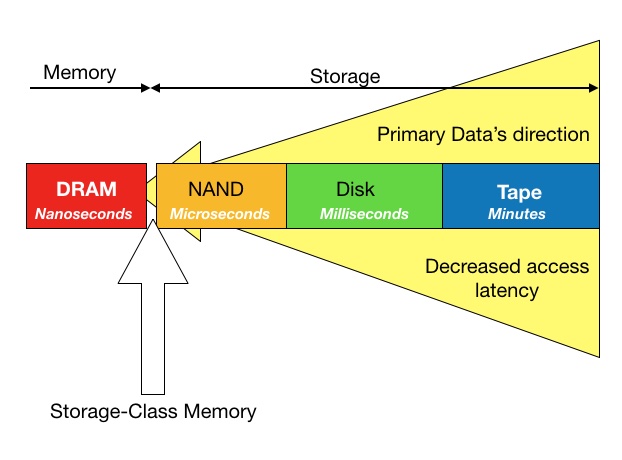

IT system architects have always sought to get primary data access latencies as low as possible while recognising server DRAM capacity and cost constraints. A schematic diagram sets the scene:

A spectrum of data access latency is largely defined by the media used. Coincidentally, the slower the media the older the technology. So, access latency decreases drastically as we move from right to left in the above diagram.

Access type also changes as we move in the same direction: storage media is block-addressable, and memory is byte-addressable. A controller software/hardware stack is typically required to convert the block data to the bytes that are stored in the media. This processing takes time – time that is not needed when reading and writing data to and from memory with Load and Store instructions.

From tape to flash

Once upon a time, before disk drives were invented, tape was an online media. Now it is offline and it can take several minutes to access archival backup data.

Disks, with latencies measured in milliseconds, took over the online data storage role and kept it for decades.

Then along came NAND and solid state drives (SSDs). The interface changed from the SATA and SAS protocols of the disk drive eras as SSD capacities rose from GBs to TBs. Now the go-to interface is NVMe (Non-Volatile Memory express) which uses the PCIe bus in servers as its transport. SSDs using NVMe have access latencies as low as 100 microseconds.

In 2018, the network protocols used for SSDs in all-flash arrays are starting to change from Fibre Channel and iSCSI to NVMe using Ethernet (ROCE, NVME TCP), Fibre Channel (NVMe FC) and InfiniBand (iWARP), with access latencies of 120 – 200 microseconds.

But this is still slower than DRAM, where access latency is measured in nanoseconds.

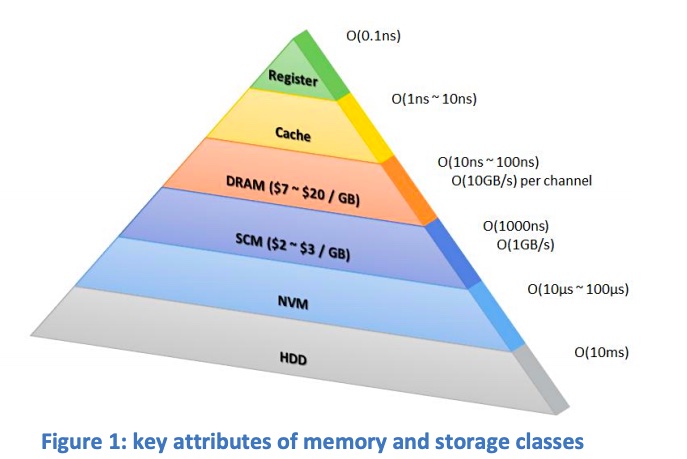

We can illustrate this hierarchy with a chart from ScaleMP (it’s from 2016, so the example costs are out of date).

The NVMe section refers to NVMe SSDs. DRAM is shown with 10 – 100 nanosecond access latency, an order of magnitude faster than NAND.

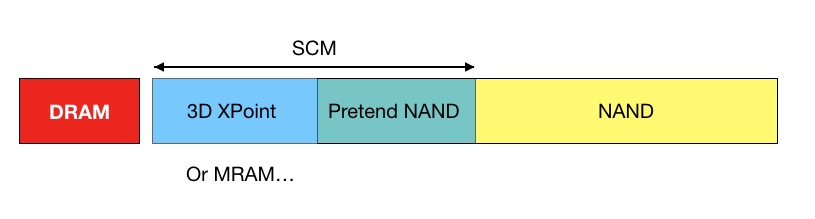

To occupy the same memory space as DRAM, slower media must be presented, somehow, as fast media. Also the media if block-addressable – such as NAND – needs to be presented with a byte-addressable interface. Hardware and/or software is required to mask the speed and media access pattern differences. If NAND is used for SCM it becomes, in effect pretend NAND, and presents as quasi-DRAM.

SCM media and software candidates

There are five SCM media/software possibilities:

- 3D XPoint (Intel and Micron)

- Intel Memory Drive Technology (DRAM + Optane + ScaleMP)

- ME 200 NAND SSD + ScaleMP SW MMU (Western Digital)

- Z-SSD (Samsung)

- MRAM, PCM, NRAM and STT-RAM.

3D XPoint and Optane

Intel’s 3D XPoint is a 2-layer implementation of a Phase Change Memory (PCM) technology. It is built at an Intel Micron Flash Technologies foundry and the chips are used in Intel Optane SSDs and are soon to feature in DIMMs.

Optane has a 7-10μs latency. Intel’s DC P4800X Optane SSD has capacities of 375GB, 750GB and 1.5TB, runs at up to 550,000/500,00 random read/write IOPS, sequential read/write bandwidth of up to 2.4/2.0GB/sec, and can sustain 30 drive writes per day. This is significantly more than a NAND SSD.

Intel introduced the DC P4800X in March 2017, along with Memory Drive technology to turn it into SCM. It announced Optane DC Persistent Memory DIMMs in August 2018, with 128, 256 and 512GB capacities and a latency at the 7μs level.

Intel has announced Cascade Lake AP (Advanced Processor) Xeon CPUs that support these Optane DIMMs. Cascade Lake AP will be available with up to 48 cores, support for 12 x DDR4 DRAM channels and come in either a 1- or 2-socket multi-chip package.

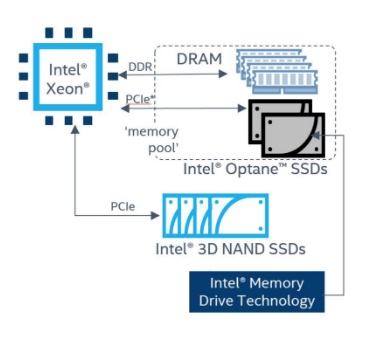

Intel Memory Drive Technology

Intel Memory Drive Technology (IMDT) is a software-defined memory (SDM) product – actually vSMP MemoryONE licensed from ScaleMP.

According to Intel, IMDT is optimized for up to 8x system memory expansion over installed DRAM capacity and provides ultra-low latencies and close-to DRAM performance for SDM operations. It is designed for high-concurrency and in-memory analytics workloads.

A section of the host server’s DRAM is used as a cache and IMDT brings data into this DRAM cache from the Optane SSDs when there is a cache miss. Data is pre-fetched and loaded into the cache using machine learning algorithms. This is similar to a traditional virtual memory paging swap scheme – but better, according to Intel and ScaleMP.

IMDT will not support Optane DIMMs, Stephen Gabriel, Intel’s data centre communications manager said: “It doesn’t make sense for Optane DC persistent memory to use IMDT, because it’s already memory.”

Intel’s Optane DIMMs have a memory bus interface instead of an NVMe drive’s PCIe interface. This means that SCM software has to be upgraded to handle byte addressable instead of block-addressable media.

ScaleMP’s MemoryONE can’t do that at the moment but the company told us that the software will be fully compatible and support Optane DIMM “when it will become available”.

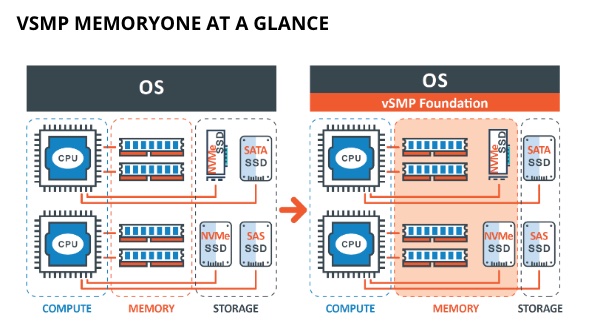

ScaleMP Memory ONE

ScaleMP combines X86 servers into a near-supercomputer by aggregating their separate memories into a single pool with its vSMP software. This is available in ServerONE and ClusterONE forms.

ServerONE aggregates multiple, industry-standard, x86 servers into a single virtual high-end system. ClusterONE turns a physical cluster of servers into a single large system combining the computing-power, memory and I/O capabilities of the underlying hardware and running a single instance of an operating system.

With MemoryONE two separate pools of ‘memory’ in a single server are combined. We say ‘memory’ because one pool is DRAM and the other is a solid state store that is made to look like DRAM by the MemoryONE software.

A bare metal hypervisor functions as a software memory management unit and tells the guest Linux variant operating systems that the available memory is the sum of actual DRAM and the alternate solid state media, either NAND or 3D XPoint.

Intel and Western Digital use ScaleMP’s software, and other partnerships may be in development.

MemoryONE SCM cost versus all-DRAM cost

Let us suppose you need a system with 12TB of memory and two Xeon SP CPUs. For an all-DRAM system you require an 8-socket Xeon SP configuration, with each CPU fitted with 12 DIMM slots populated with 128GB DIMMs. This adds up to 1.5TB per socket.

ScaleMP tells us that 128GB DIMMs cost, on average, around $25/GB. These DIMMs require a high-end processor – with an ‘M’ part number – which has a price premium of $3,000.

The math says the DRAM for a 1.5TB socket costs $41,400 inclusive of the CPU premium (1,536GB x $25/GB + $3,000) and the entire 8 sockets’ worth will cost $331,200.

The cost is much lower if you use MemoryONE and a 1:8 ratio of DRAM to NAND or Optane. For a 12TB system, the Intel memory Drive Technology (IMDT) manual says you need 1.5TB of DRAM plus 10 units of the 1.5TB Optane SSDs. (ScaleMP explains that the larger total has to do with decimal to base of 2 conversion, and some caching techniques).

If you don’t need the computing horsepower of 8-socket system, you could have a 2-socket Xeon SP system, with each CPU supporting 768GB of DRAM (12 x 64GB) – and reach 12TB of system memory with IMDT, using 15TB of Optane capacity (10 x 1500GB P4800X). Also you avoid the $3,000 ‘M’ part premium.

You save the price of six Xeon SP processors, the ‘M’ tax on the two Xeons you do buy, along with sundry software licenses, and the cost difference between 12TB of high-cost DRAM ($307,200 using 128GB DIMMs) vs. 1.5TB of commodity DRAM ($15,360 using 64GB DIMMs) and 10 x P4800X 1.5TB SSDs with the IMDT SKU, which cost $6,580 each.

In total you would pay $81,160 for 12TB of memory (DRAM+IMDT), a saving of $250,040 compared to 12TB of DRAM on an 8 socket Xeon SP machine. Also, if you opt to do this with a dual-socket platform you pay less for the system itself. So you are looking at even greater savings.

ScaleMP and Netlist HybriDIMMs

In July 2018 Netlist, an NV-DIMM developer, announced an agreement with ScaleMP to develop software-defined memory (SDM) offerings using Netlist’s HybriDIMM.

HybriDIMM combines DRAM and existing NVM technologies (NAND or other NVMe-connected media) with “on-DIMM” co-processing to deliver a significantly lower cost of memory. It is recognised as a standard LRDIMM, by host servers, without BIOS modifications. Netlist is gunning for product launch for 2019.

Netlist states: “PreSight technology is the breakthrough that allows for data that lives on a slower media such as NAND to coexist on the memory channel without breaking the deterministic nature of the memory channel. It achieves [this] by prefetching data into DRAM before an application needs it. If for some reason it can’t do this before the applications starts to read the data, it ensures the memory channels integrity is maintained while moving the data [onto] DIMM.”

As noted above, ScaleMP’s MemoryONE will be fully supportive of non-volatile Optane and NAND DIMMs.

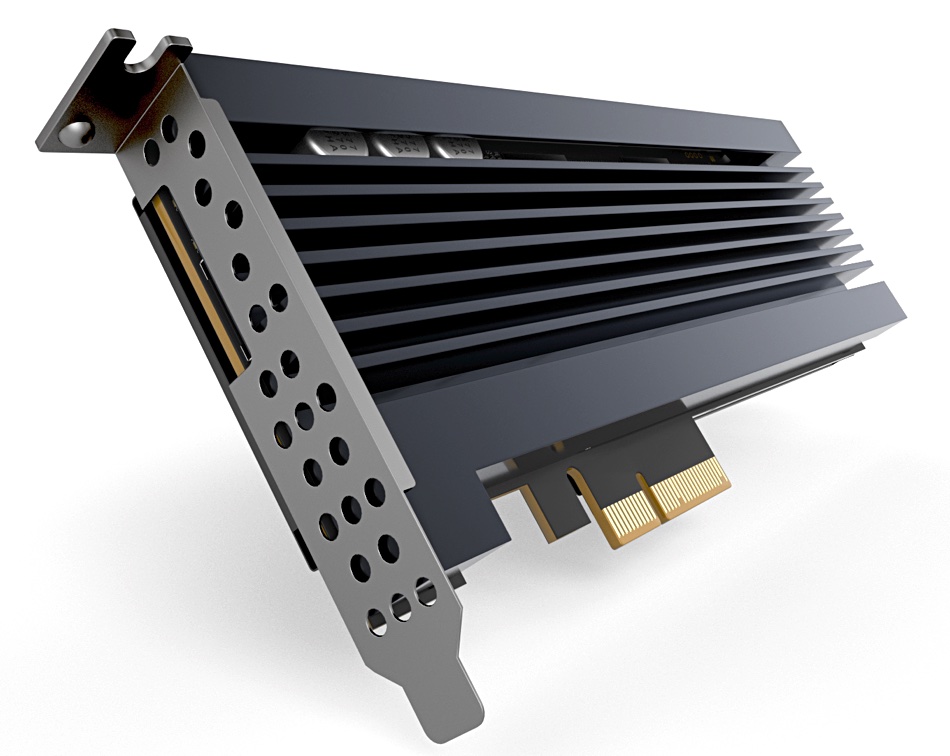

Western Digital Memory Expansion drive

Western Digital’s SCM product is the ME200 Memory Expansion drive. This is an optimised Ultrastar SN200 MLC (2bits/cell) SSD, plus MemoryONE software.

This is like the Intel MDT product but uses cheaper NAND rather than Optane.

Western Digital says a server with DRAM + ME200 + MemoryONE performs at 75 – 95 per cent of an all-DRAM system and is less expensive.

- Memcached has 85-91 per cent of DRAM performance with a 4-8x memory expansion from the ME200

- Redis has 86-94 per cent DRAM performance with 4x memory expansion

- MySQL has 74-80 per cent DRAM performance with 4-8x memory expansion

- SGEMM has 93 per cent DRAM performance with 8x memory expansion

We have not seen any Optane configuration performance numbers, but it is logical to infer that the alternative using Optane SSDs instead of NAND will perform better and cost more.

However, Western Digital claims the ME200 route gets customers up to “three times more capacity than the Optane configuration”.

Eddy Ramirez, Western Digital director of product management, says there is “not enough compelling performance gain with Optane to justify the cost difference.”

Samsung Z-SSD

Z-NAND is, basically, fast flash. Samsung has not positioned this as storage-class memory but we think it is a good SCM candidate, given Intel and Western Digital initiatives with Optane and fast flash.

Samsung’s SZ985 Z-NAND drive has a latency range of 12-20μs for reads and 16μs for writes. It has an 800GB capacity and runs at 750,000/170,000 random read/write IOPS. Intel’s Optane P4800X runs at 550,000/500,000 so its write performance is much faster but the Samsung drive has up to 50 per cent more read performance.

The SZ985’s sequential bandwidth is up to 3.1 GB/sec for reads and writes, with the P4800X offering 2.4/2.0 GB/sec. Again Samsung beats Intel.

The endurance of the 750GB P4800X is 30 drive writes per day (41 PB written during its warranted life) – the same as Optane.

With this performance it is a mystery why Samsung is not making more marketing noise about its Z-SSD. And it is a puzzle why ScaleMP and Samsung are not working together. It seems a natural fit.

Samsung provided a comment, saying: “Samsung is working with various software and hardware partners to bring Z-SSD technology to the market, including some partners that are considering Z-SSD for system memory expansion. We can’t discuss engagements with specific partners at the moment.”

Watch this space.

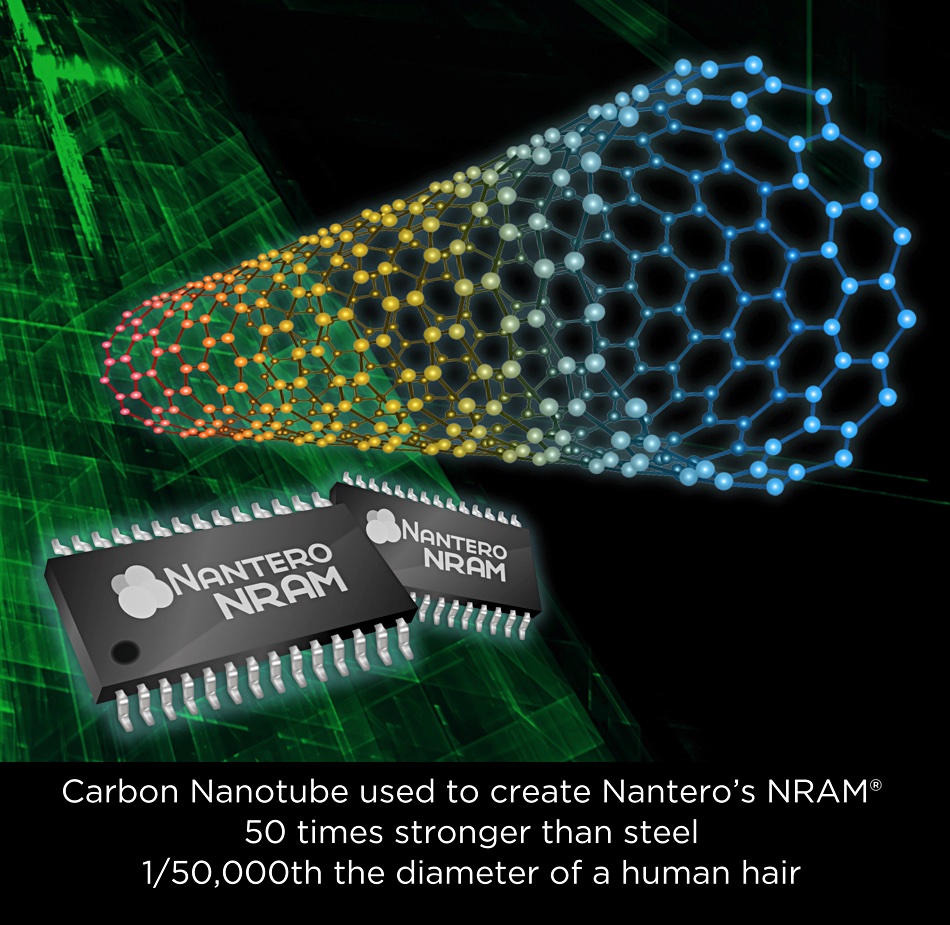

MRAM, PCM, NRAM and STT-RAM

Magnetic RAM and STT-RAM (Spin Transfer Torque RAM) are the same thing: non-volatile and byte-addressable memory using spin-polarised currents to change the magnetic orientation, and hence bit value, of a cell.

Crocus, Everspin and STT are the main developers here and they claim their technology has DRAM speed and can replace DRAM and NAND, plus SRAM and NIR, and function as a universal memory.

But no semiconductor foundry has adopted the chip design for mass production and consequently the technology is unproven as practical SCM.

PCM is Phase-Change Memory and it is made from a Chalcogenide glass material whose state changes from crystalline to amorphous and back again by using electric current. The two states have differing resistance values, which are detected by a different electric current and provide the two binary values needed: 0 and 1.

This is another prove-it technology with various startups and semi-conductor foundries expressing interest. Micron, for example, demonstrated PCM tech ten years ago. But no real technology is available yet.

NRAM (Nanotube RAM) uses carbo nanotubes and is the brainchild of Nantero. Fujitsu has licensed the technology, which is said to be nonvolatile, DRAM-like in speed, and possess huge endurance. This is another yet-to-be-proven technology.

Summary

For NAND to succeed as SCM, software is required to mask the speed difference between it and DRAM and provide byte addressability. ScaleMP MemoryONE software provides thus functionality and is OEMed by Intel and Western Digital.

Samsung’s exceptional Z-SSD should be an SCM contender – but we discount this option until the company gives it explicit backing.

For now, Optane is the only practical and deliverable SCM-only media available. It has faster-than-NAND characteristics, is available in SSD and DIMM formats and is supported by MemoryONE.

Cascade Lake AP processors will be generally available in 2019, along with Optane DIMM. This combination provides the most realistic SCM componentry available thus far. We can expect server vendors to produce SCM-capable product configurations using this CPU and Optane DIMMs.

The software, hardware and supplier commitment elements are all in place for SCM systems to be adopted by enterprises for memory-constrained applications in 2019 and escape the DRAM price and DIMM slot limitation traps.