NetApp has announced a stripped down, hot-box, EF series all-flash array with double the performance of its predecessor.

Suggested EF600 workloads include Oracle databases, SQL Server, real-time analytics, and hig performance computing applications on top of a BeeGFS parallel file system.

NetApp said the EF600 offers a leading price/performance ratio for these workloads, especially compared to SAS-based all-flash arrays, but has not revealed pricing.

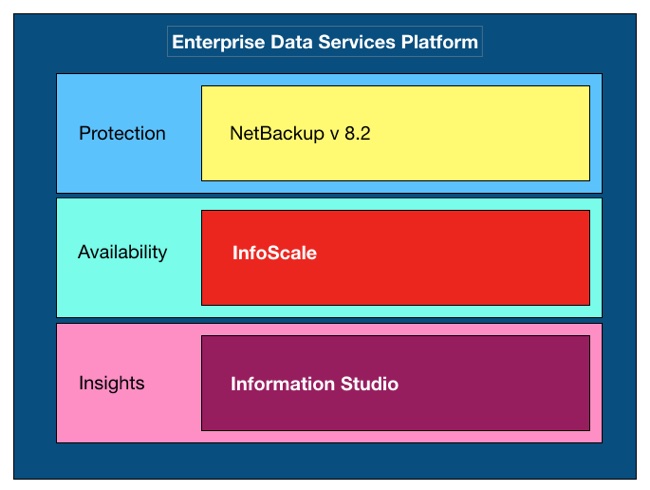

EF block storage arrays sit below NetApp’s ONTAP AFF unified file and block arrays in terms of general power and stripped down software features.

The EF570 was launched in September 2017, two years after the 2015-launched EF560 which it replaced. Now, two years later, we have its replacement.

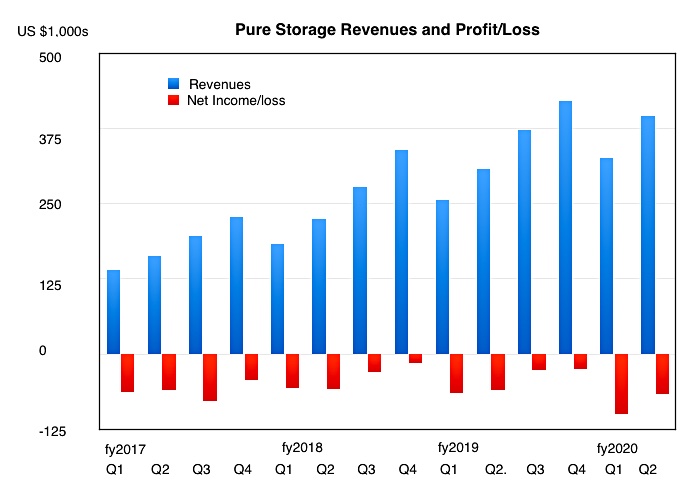

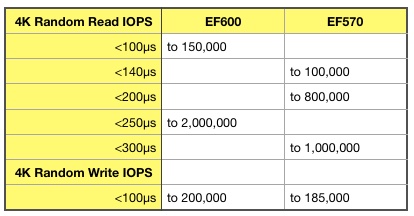

The new top-end EF600 all-flash array, with its end-to-end NVMe storage, is twice as fast as the EF570, at 2 million IOPS. The EF570 supported NVMe-over Fabrics access by host servers but didn’t have an NVMe path to the drives.

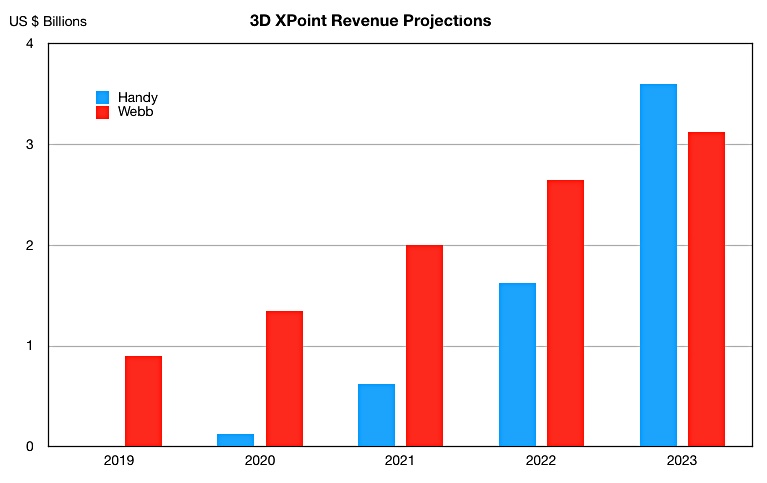

That meant the EF570 could output up to 21GB/sec while the EF600 cranks up to 44GB/sec. It can also support lower latencies for more IOPS than the EF570, particularly for random reads:

Oddly, the EF600 only supports up to 360TB of raw capacity from the 24 drives in its 2U chassis, while the EF570 supported up to 1.8PB from its 120 drives, spread across a 2U base chassis and 4 expansion shelves. We have asked NetApp why the EF600 has lower capacity and no expansion capability.

Simplified choices

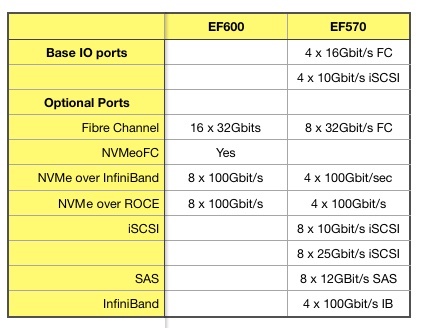

The EF600 has a greatly simplified set of IO port choices compared to the EF570:

Basically, 16Gbit/s Fibre Channel, SAS and 10 and 25Gbit/s iSCSI are canned, with the EF600 supporting NVMe over Fabrics (using Fibre Channel, InfiniBand or Ethernet) or 32Gbit/s Fibre Channel only. Mellanox provides the 100Gbits network controllers needed for NVMe across InfiniBand or Ethernet (ROCE.)

Another aspect of EF600 simplification over the EF570 is host OS support. The EF600 supports hosts with the SUSE Linux Enterprise Server OS only. In contrast the EF570 supported IBM AIX, Centos, Windows Server, Oracle Enterprise Linux and Solaris, VMware ESX and both Red Hat and Ubuntu Linux as well. All gone now and, again, we have asked why?

The EF600 supports SANtricity Snapshot and volume copy, but not SANtricity thin provisioning, synchronous and asynchronous mirroring or Cloud Connector, which the EF570 did. We have asked NetApp why it has removed these options.

There’s a NetApp blog about the EF600 which points readers to a product webpage and that leads on to a data sheet.

NetApp query answers

Blocks & Files gratefully received answers from NetApp to the questions we asked and here they are;

Blocks & Files; The EF600’s Max raw capacity is 360TB while the EF570’s is 1.8PB. Why is this please?

NetApp; The reason for this is contained in question #2; 24 drives maximum limits the max capacity possible. There is no software architectural limit; as larger SSDs are integrated, the max raw capacity will increase.

Blocks & Files; The EF600 supports 24 drives with no expansion shelves while the EF570 supports 120, via 4 expansion shelves. Why does the EF600 support far fewer drives?

NetApp; The EF600 is focused on extreme low latency and high performance, which are most efficiently accomplished within a single enclosure.

Blocks & Files; According to a data sheet the EF600 supports SANtricity Snapshot and volume copy, but not SANtricity thin provisioning, synchronous and asynchronous mirroring or Cloud Connector. Why not please?

NetApp; The initial release of the EF600 is laser-focused on very low latency and high performance as mentioned. Additional features are planned for future releases.

Blocks & Files; The EF600 supports only one host OS – SUSE Enterprise Linux Server. The EF570 supports IBM AIX, Centos, Windows Server, Oracle Enterprise Linux and Solaris, VMware ESX and both Red Hat and Ubuntu Linux as well. Why the slimmed down host OS choice please?

NetApp; The current narrow OS matrix is solely due to the state of support for NVMe in the operating systems. SLES 12 SP4 is the first enterprise-ready OS for NVMe-oF that supports Asymmetric Namespace Access (ANA) which is required for high availability I/O paths. There are other OSes not far behind that can be certified when they are available.

Blocks & Files; Does the EF600 have different CPUs than the EF570? Is that why it can do 2 million IOPS instead of the EF570’s 1 million IOPS?

NetApp; The EF600 does have newer generation processors, but there are many innovations for performance in the product.

Blocks & Files; If so is that part of the reason why the EF600 can sustain lower latencies for a greater number of IOPS?

NetApp; There are improvements in the NVMe devices’ latency in addition to product latency improvements.

Blocks & Files; Do the Mellanox network controllers also help with the lower latency and more sustained IOPS numbers?

NetApp; We were not sure if this question was about the host adapters or the controller HICs (host interface cards). Mellanox is a great partner, enabling us to deliver a robust RDMA-enabled solution for both EF600 and EF570. For the EF600 we are seeing the same latency and IOPS improvements across the interfaces, regardless of whether NVMe/FC vs. NVMe/IB or NVMe/RoCE is in use.