Editor’s Note: This blog by storage industry veteran Hubbert Smith begins with a brief history of SLC-MLC-TLC-QLC and then gives a forecast of what to expect from QLC. It pertains mostly to server/cloud SSDs.

History

SLC – single level cell. It stores a single bit and a cell uses 2 charge levels. A full charge of 1.8V is a one and zero charge is a zero.

MLC – multi level cell stores two bits. A cell uses several charge levels to store two bits; 1.8V, 1.2V, .6V, and 0V.

TLC – three level cell stores three bits. A cell uses even more charge levels to store three bits; 1.8V, 1.5V, 1.2V, 0.9V, 0.6V, 0.3V, 0V.

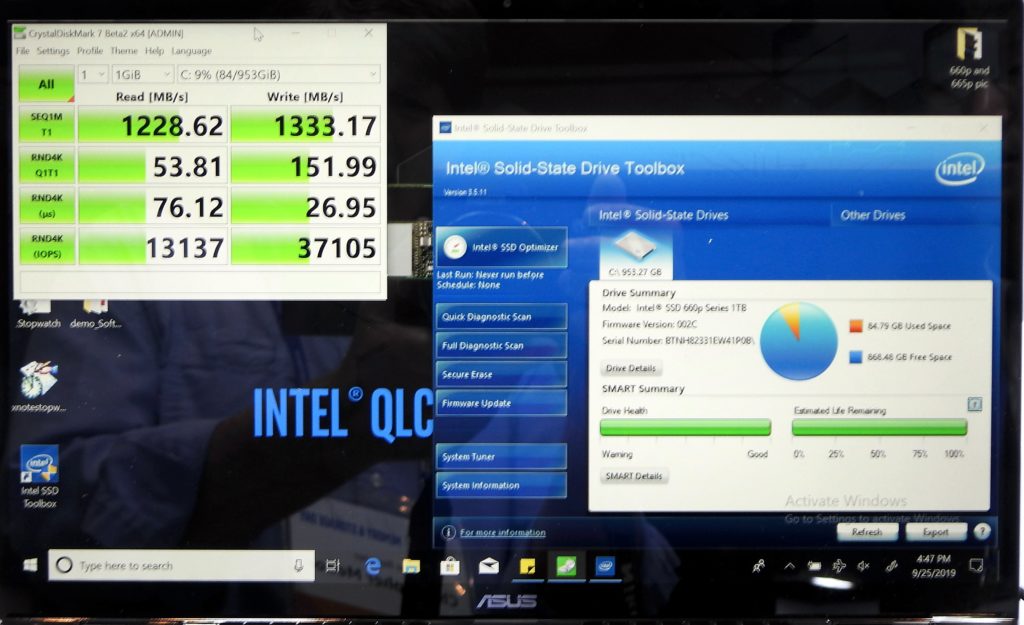

QLC – quad level cell stores four bits. A cell uses even more charge levels… you get the idea. Voltage charge deltas are even tighter and more error prone.

Let’s look at the capacity and the value of the NAND and SSD:

- As compared to SLC; MLC doubles capacity, adds 100 per cent more bits per cell.

- As compared to MLC; TLC adds 50 per cent more bits per cell.

- As compared to TLC; QLC adds only 25 per cent more bits per cell.

Diminishing returns

QLC is only 25 per cent better capacity than TLC, and with every generation the industry trades slower and slower performance with poorer write endurance. With just 25 per cent better capacity than TLC, QLC shows diminishing returns.

Additionally NAND is ugly and QLC NAND is a whole new level of ugly. Here’s why.

NAND cells are less than perfect. Firmware goes through all sorts of contortions to identify and correct media errors. As mentioned earlier, SSD data retention relies on the electrical charge of a cell and given enough time these electrical charges will evaporate. Recharging cells is one of the many maintenance tasks handled by firmware. When an SSD is plugged in, the firmware will refresh cell charges every 30 to 60 days.

What happens when an SSD is without a power source to refresh cell charges? No power means no cell recharge. Sooner or later the electrons will drift away and the cell electrical charge will evaporate; data loss occurs.

QLC promise and reality

The promise of QLC is very different than the reality of QLC. There are systems vendors attempting to drive to lower cost/GB. In a system this is likely workable.

There are memory vendors attempting to use QLC for cold storage; these folks are naively over-selling QLC.

Humble advice

- Stick to proven TLC. Let someone else save a nickel and learn hard lessons.

- Consider SLC for systems where the data sets are small but the over-writes are high. (Its endurance is far higher than that of MLC, TLC and QLC.)

Note: Consultant and patent holder Hubbert Smith (Linkedin) has held senior product and marketing roles with Toshiba Memory America, Samsung Semiconductor, NetApp, Western Digital and Intel. He is a published author and a past board member and workgroup chair of the Storage Networking Industry Association, and has had a significant role in changing the storage industry with data centre SSDs and Enterprise SATA disk drives.