Actifio has released a major update of its eponymous copy data manager software.

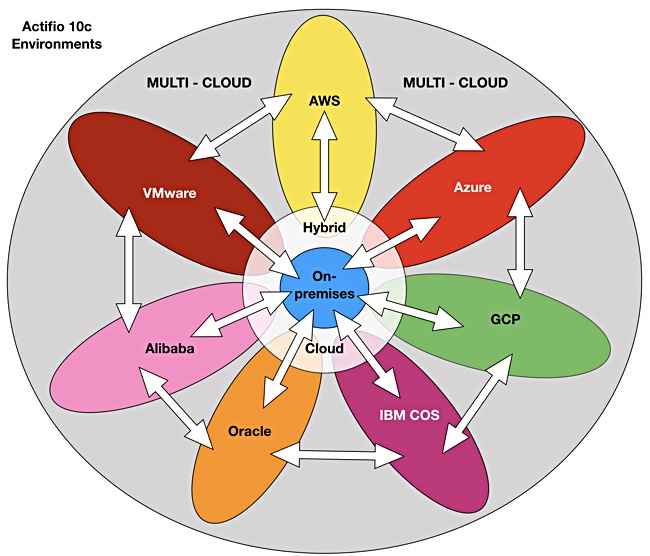

Actifio 10c focuses on three ‘Cs’: cloud, containers and copy data. The company emphasises backup as a means of getting data into its orbit and using it for archive, disaster recovery, migration and copy data provisioning within and between public clouds and on-premises data centres.

CEO Ash Ashutosh said in a press briefing in Silicon Valley last week: “Copy data begins with backup. And goes all the way to archive.” He said cloud backup is traditionally a low-cost data graveyard but Actifio “provides instant access and re-use.”

Warming to his theme, Ashutosh added: “The disaster recovery workload is just metadata,” claiming 10c offers single-click DR. In this worldview, Virtual Machine recover and migration and disaster recovery are just another form of copy data management.

Peter Levine, general partner at Andreessen Horowitz, the venture capital firm which has invested in Actifio, said in the same briefing that “where software eats the world, data eats software.” Actifio is “in the exact right place – hybrid cloud,” he said. “Hybrid and multi-cloud have to work together… to move data seamlessly between all these repositories… It was ahead of its time but the time has now grown into Actifio – [which is] right on the cusp of cracking open a new layer in the software stack.”

Mostly cloudy

10c backs up on-premises data to object storage in the cloud and supports seven public clouds: Alibaba, AWS, Azure, GCP, IBM COS, Oracle and VMware

Actifio’s objects can be used to instantly recover virtual machines. However, at this point, only AWS, Azure, GCP and IBM COS are supported for direct-to-cloud backups of on-premises VMware virtual machines.

The 10c product stores database backups in the cloud using their native format and can clone them. Actifio positions this as a facility for in-cloud test and development. Developers and testers will use Jenkins, Git, Maven, Chef or Ansible and request fresh clones through them, via a 10c API. The Actifio SW sends database clones from these backups to the testers’ containers running in AWS, Azure, GCP and the IBM COS clouds.

10c also brings simple wizards for SAP HANA, SAP ASE, Db2, MySQL database backup and recovery and external snapshot support for Pure Storage and IBM Storwize arrays.

Actifio’s objects are self-describing – which aids their movement between clouds as they carry their metadata within them. Ashutosh said. “You can’t scale with a separate object metadata database.” He noted object storage supplier Cloudian, for example, uses a Cassandra database for metadata.

10c speed

The 10c speed angle is strengthened by Actifio’s ability to create and provision 50TB clones of an Oracle database from a 17TB object, and deliver them to five test developers as virtual object copies in eight minutes. It can deliver five production copies, in block format, in 13 minutes. (An IBM/ESG document describes this test.) Actifio said Oracle RAC’s own procedures would take 90 minutes at best and possibly days to produce five block-based copies.

An on-premises cache can be used to speed self-service on-premises recoveries and lower cloud egress charges. The device uses SSD storage and can cache reads-from and writes-to cloud object storage to increase the overall IO speed.

Actifio partners

Actifio 10c is generally available in the first quarter of 2020. The enhancements in Actifio 10c will also be available in deployments of Actifio GO, the company’s multi-cloud copy data management SaaS offering, as well as Actifio’s Sky and CDX products.

Actifio has more than 3,600 enterprise customers in 38 countries. Hitachi and NEC are big resellers in Japan and Lenovo is also a reseller. IBM resells Actifio’s software as its Virtual Data Pipeline, and this competes somewhat with IBM’s own Recover software. There is no partnership with HPE and nor with NetApp but, Ashutosh says: “We’re friendly with Dell EMC.”

It will sell software in the SMB market through resellers.