Kioxia America

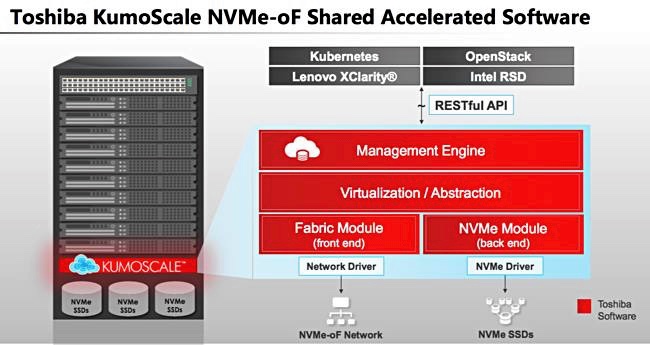

Kioxia America has added snapshots and clones to its KumoScale all-flash storage system.

It has done this by including Kubernetes CSI-compliant snapshots and clones to the KumoScale NVMe-over Fabrics-based software. This means faster backup for large, stateful containerised applications such as databases. Generally, database operations have to be quiesced during database backup. Snapshotting takes seconds and releases the database for normal operation more quickly than other backup methods based on copying database records or their changes.

KumosScale snapshotting works with the CSI-compatible snapshot feature of Kubernetes v1.17. Users can incorporate snapshot operations in a cluster-agnostic way and enable application-consistent snapshots of Kubernetes stateful sets, directly from the Kubernetes command line.

Separately, Kioxia has rebranded all the old Toshiba Memory Corp. consumer products with the Kioxia moniker, meaning microSD/SD memory cards, USB memory sticks and SSDs. These are for use with smartphones, tablets and PCs, digital cameras and similar devices.

Qumulo gets cosy with Adobe

Scale-out filesystem supplier Qumulo is working with Adobe so work-from-home video editing and production people can collaborate to do work previously done in a central location.

Two Adobe software products are involved: Adobe Premiere Pro and After Effects and the two companies say they enable collaborative teams to create and edit video footage using cloud storage with the same levels of performance, access and functionality as workstations in the studio.

You can register for a Qumulo webinar to find out more.

Samsung develops 160+ layer 3D NAND

Samsung has accelerated the development of its 160-plus layer 3D NAND,a string stacking arrangement of two x 80+ layer chips, Korea’s ET News reports. This will be Samsung’s seventh 3D NAND generation in its V-NAND product line.

Samsung’s gen 6 V-NAND is a 100+ layer chip – the precise layer count is a secret, which started sampling in the second half of 2019.There is no expected date for the 160+ layer chip to start sampling. But it looks like Samsung wants to ensure it is a generation ahead of its competitors, and thereby have lower costs in $/TB terms.

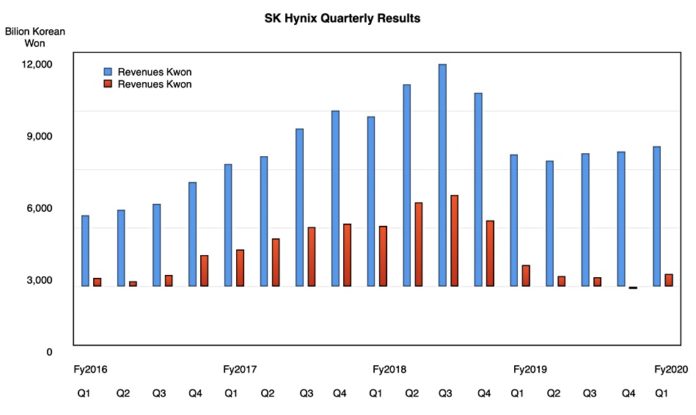

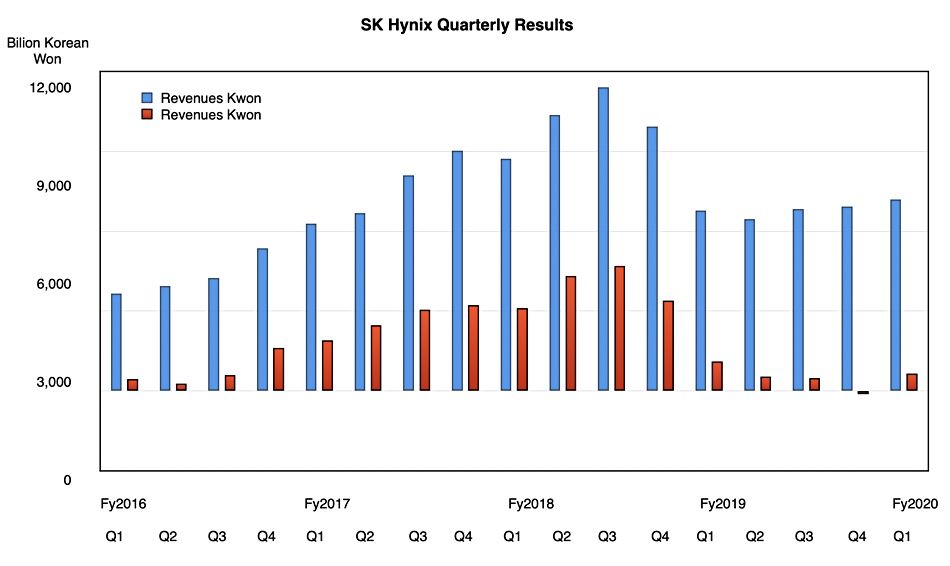

China’s Yangtze Memory Technology Corporation announced this month that it is sampling string-stacked 128-layer 3D NAND. SK hynix should sample a 128-layer chip by the end of 2020.

WekaIO launches Weka AI

WekaIO, a vendor of fast filesystems, talks of AI distributed across edge, data centres (core) and the public cloud, with a multi-stage data pipeline running across these locations. It says each stage within this AI data pipeline has distinct storage IO requirements: massive bandwidth for ingest and training; mixed read/write handling for extract, transform, load (ETL); ultra-low latency for inference; and a single namespace for entire data pipeline visibility.

Naturally Weka’s AI offering meets all these varied pipeline stage requirements and delivers fast insights at scale.

Weka AI is a framework of customisable reference architectures (RAs) and software development kits (SDKs) with technology alliances like Nvidia, Mellanox, and others. The company said engineered systems with partners ensure that Weka AI will provide data collection, workspace and deep neural network (DNN) training, simulation, inference, and lifecycle management for the AI data pipeline.

Weka claims its filesystem can deliver more than 73 GB/sec bandwidth to a single GPU client. You can check out a datasheet to get more information.

Veritas says dark data causes CO2 emissions

Data protector and manager Veritas says storing cold, infrequently-accessed data on high-speed storage makes the global warming crisis worse. This so-called dark data sits on fast flash or disk drives and so consumes energy that it doesn’t actually need.

Veritas claims on average 52 per cent of all data stored by organisations worldwide is ‘dark’ as those responsible for managing it don’t have any idea about its content or value.

The company estimates that 6.4 million tonnes of CO2 will be unnecessarily pumped into the atmosphere this year as a result. It cities IDC forecast that the amount of data that the world will be storing will grow from 33ZB in 2018 to 175ZB by 2025. This implies that, unless people change their habits, there will be 91ZB of dark data in five years’ time.

Veritas’ announcement says we should explore its Enterprise Data Services Platform to get more information on data protection in the world of dark data – but there’s no specific information there linking it to decreasing dark data to reduce global warming.

Shorts

Databricks is hosting the Hackathon for Social Good as part of the Spark + AI Summit virtual event on June 22-26. The data analytics vendor is encouraging participants to focus on one of these three issues for their project: provide greater insights into the COVID-19 pandemic; reduce the impact of climate change; or drive social change in their community

Enterprises with office workers accessing critical data face having these people, sometimes thousands of them, work from home and, be default, using relatively insecure Internet links to access this sensitive data. They can set up virtual private networks (VPNs) to provide secure links but this entails additional complexity. FileCloud says it can provide VPN-levels of security with its seamless access to on-premises file shares from home without a VPN.

Its software uses common working folders. There is has built-in ransomware, anti-virus and smart data leak protection and there is no need to change file access permissions.

DBM Cloud Systems, which automates data replication with metadata, has joined Pure Storage’s Technical Alliance Partner program. That means DBM’s Advanced Intelligent Replication Engine (AIRE) is available for Pure Storage customers to replicate and migrate petabyte-scale data directly to Pure Storage FlashBlade, from most object storage platforms, including AWS, Oracle, Microsoft and Google.

In-memory real-time analytics processing platform GigaSpaces has announced its v15.5 software release. The upgrade doubles performance overall and introduces ElasticGrid, a cloud-native orchestrator, which the company claims is 20 per cent faster than Kubernetes.

Igneous has updated its DataDiscover and DataFlow software services.

DataDiscover provides a global catalog of all a customer’s files across its on-premises and public cloud stores. A new LiveView feature provides real-time insight into files by type, group, access time, keyword and so forth. The LiveViews (reports) can be shared with other users, taking account of their permissions. Users can find files faster with the LiveView feature.

DataFlow is a migration tool. It supports new NAS devices and cloud filesystems or objects without vendor lock-in. Data can be moved between the on-premises and public cloud environments, whether they it is stored in NFS, SMB or S3 object form. NFS and SMB files can be moved to an S3 object store.

Nutanix HCI software has been certified to run with Avid Media Composer video editing software and the Avid MediaCentral media collaboration platform. It says it is the first HCI-powered system to be certified for Avid products.

Verbatim is launching a range of M.2 2280 internal SSDs, delivering high speeds and low power consumption for desktop, ultrabook and notebook client upgrades.