Company profile Unstructured data manager Igneous claims it can manage masses of data so large that competitors are incapable of keeping up.

Igneous was founded in 2013 in Seattle and has taken in $67.5m in funding, including a $25m C-round in March 2019. The company focuses on petabyte-scale unstructured data backup, archive and storage system with a public cloud backend.

The initial product involved an on-premises scale-out ARM-powered appliance. Since then it has evolved into a data management supplier, based on commodity hardware. The core NAS backup morphed into a data management services offering and the company developed API-level integration with Pure Storage’s FlashBlade, Isilon OneFS systems and Qumulo Core filers.

Now it offers UDMaaS (unstructured data management as a service) covering file and object data, and providing data protection, movement and discovery. Cloud backends for tiering off data including the big three: AWS, Azure and GCP.

Customers use Igneous to discover low access-rate files and move them off primary storage to lower-cost tiers, and also to backup files. Many other suppliers can do this but they don’t scale so well, or so claims Christian Smith, an Igneous exec with a l-o-n-g title – VP Product, Solutions, Content, Marketing and Customer Success.

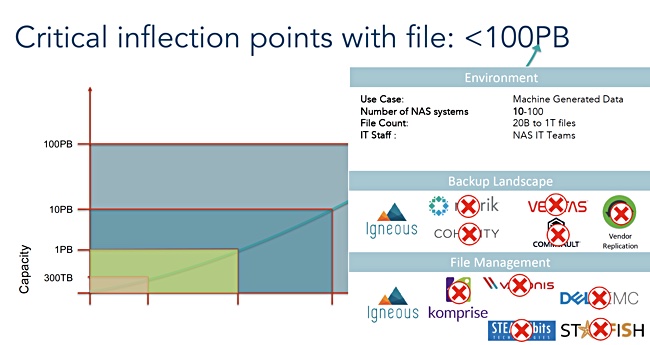

He told a Silicon Valley press briefing last week that its Data Discover and DataProtect backup and archive as-a-service runs rings round competitors at the 10PB to 100PB level, citing Cohesity, Commvault, Dell EMC, Komprise, Starfish, STEALTHbits, Varonis and Veritas.

Break points

According to Smith, there are break points as file populations scale past 300TB, then 1PB, and 10PB. Beyond 10PB, the limitations of other file management and backup suppliers are exposed. File manager STEALTHbits runs out of steam in the 300TB to 1PB region – 100 million to 1 billion files. Backup vendors Cohesity and Rubrik and file management firm Varonis start flagging as the file population grows from 1PB to 10PB – 1 billion to 40 billion files.

Above 10PB and up to 100PB, with 20 billion to 1 trillion files, data protectors Commvault and Veritas find life difficult, as do file management products from Dell EMC, Komprise and Starfish.

Coping with trillion-level file populations

Smith said Igneous’s software handles file populations in the trillions. At that size a simple scan of the source file systems can take days unless your software is designed to scale out to the performance required.

DataDiscover scans source filers using API access, or SMB and NFS protocols. It can also scan object stores using S3 and scans up to 30 billion files in a day. The scan uses scale-out, stateless, virtual machines, which run up to 400 files/sec when looking at flash-based storage. Disk is slower.

Smith said: “We wrote our own scanner. User space agent talks direct to NFS/SMB and there are no kernel interrupts. It’s heavily multi-threaded and we dynamically reallocate threads in process.”

The end result is a searchable InfiniteIndex which can contain trillions of file entries. It holds 20GB-40GB of compressed data for a 10PB file system scan. A 40 billion file entry index would take up 200GB. The scan process is managed remotely by Igneous.

Files moved by DataFlow to lower-cost storage are compressed and the network traffic is shaped to maximise bandwidth usage and move data at line speed.

Parallel ingests, costs and chunking

Igneous uses parallel ingests of data and the ingested data is chunked to combine many files into a single large object, which reduces PUT costs in the public clouds. Smith said it costs “5c per 1,000 puts into Glacier”. A petabyte of data and 1MB files has an AWS PUT cost of $537 with Igneous. This would be $50,000 without Igneous’s chunking.

Smith noted AWS DeepGlacier Archive is $12/TB/year and AWS Direct Connect is $1,620/month with 10GbitE. Prices will drop, he said: “At some point Glacier and the Azure equivalent will be free and you will pay per-transaction.”

Files that need to be deleted are instantly marked as deleted. File expiration in the public cloud has a cost that is measured against the chunk’s resulting storage cost, Smith said. “Only when the cost of expiration is less than the storage cost is the chunk compacted.”

Igneous monitors latency when scanning and moving files off primary storage and throttles operations if latency lengthens past a set point. The target for data moves can be on-premises object storage or the three main public clouds. Igneous software runs on commodity hardware or appliances and also in the three big public clouds.

Users run single-click archive processes through a central management portal, which also handles to data recovery from backups or the archive.

Igneous has 40 to 60 customers, mostly large enterprises. There are 75 staff, with 32 in engineering. The company plans a tentative expansion into Europe in 2020 via resellers.

Igneous and its investors are banking on enterprises turning to its services as file populations grow. At the moment Igneous appears to have a clear run but how quickly can competitors scale up their software in response? This will determine Igneous’s chances for long-term success.