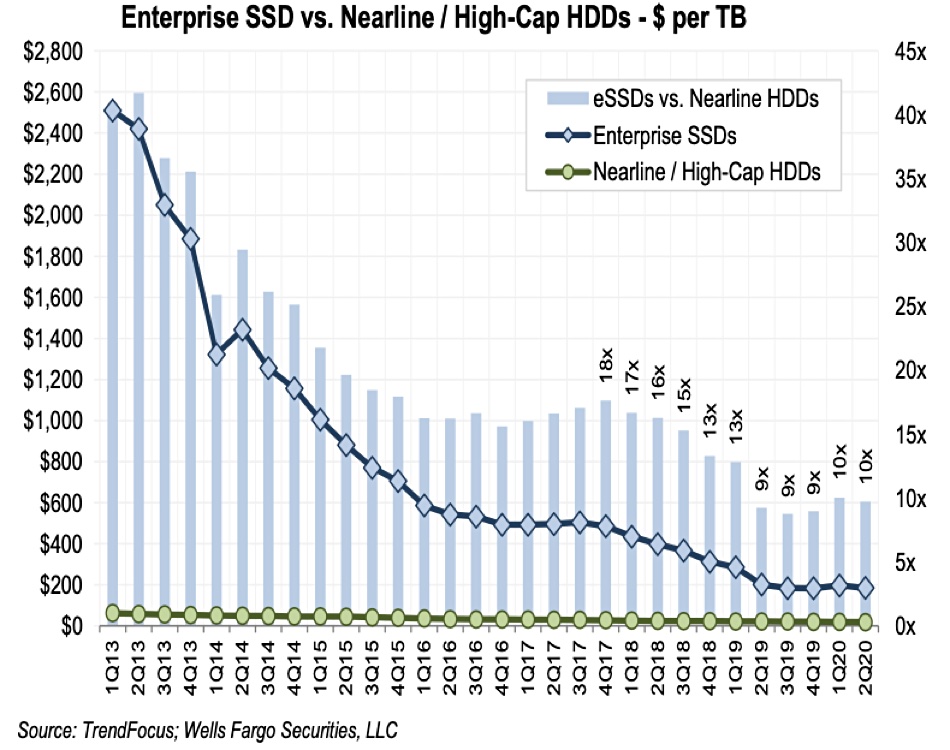

Nearline drives remain the sweet spot in the HDD market – and a key reason is that the 10x price premium that enterprise SSDs command remains stubbornly high and is not closing.

Update; Kioxia enterprise SSD market share data added. 28 August 2020.

SSDs are cheaper to operate than disk drives, needing less power and cooling, and are much faster to access. They are widely expected to mass- replace hard drives for mainstream enterprise workloads – at some point. But when? Wells Fargo analyst Rakers thinks demand for enterprise SSDs will soar when prices drop to a maximum five times higher than nearline HDDs. However, there is little sign of this happening in current price trends.

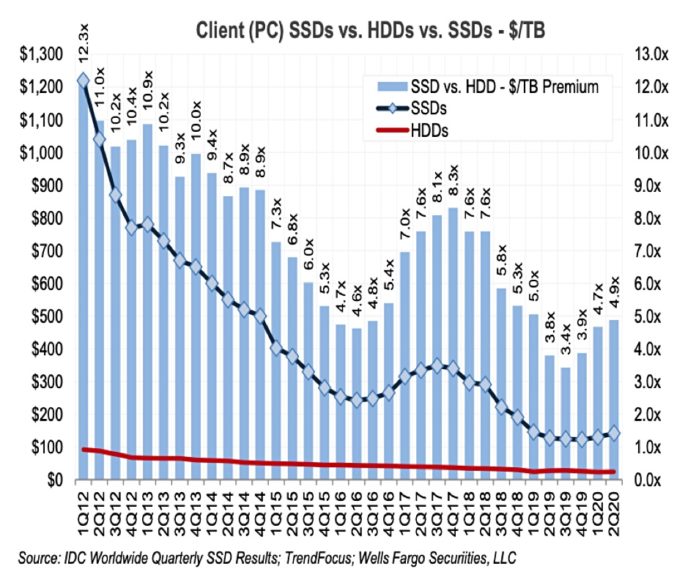

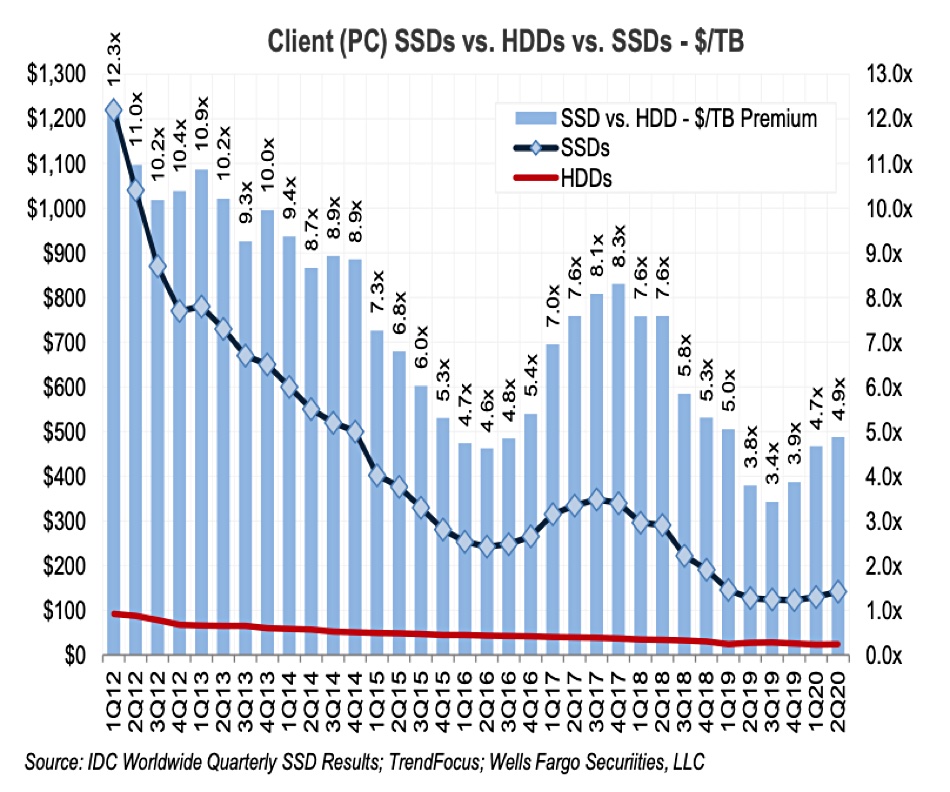

By contrast, SSDs are killing disk drives in the PC and nearline device storage market.

Rakers has pored over the the Q2 2020 SSD and HDD numbers collated by TrendForce, a market research firm. Nearline disk drive capacity, he reveals, shipped was about 165.6 EB, almost seven times greater than enterprise SSD totals. Enterprise SSDs cost a general $185/TB and nearline HDDs costs about $19/TB, meaning enterprise SSDs carry a 9.7x price premium in $/TB terms. And that premium is staying constant as this chart shows:

Rakers estimates the client SSD price premium over client HDDs is 4.9x. A chart shows how the client SSD price premium has dropped since 2012;

Why the different price premiums? We think this is in part related to capacity. Client storage drives are typically sub-5TB devices and speed of access is prized more than capacity. Also, low-access rate client backup data can be stored in the public cloud. Demand for larger capacity client drives is falling because of these two factors.

Enterprises and hyperscalers have large volumes of nearline storage data and per-drive capacities are now growing to 16TB, 18TB and – soon -20TB. Even greater capacities are coming down the line. This means the $/TB cost of nearline drives will continue to fall, keeping pace with the declining $/TB cost of enterprise SSDs.

Market share

Samsung led the overall NAND SSD market in 2Q 2020 in revenue share with 31 per cent. The Kioxia-Western Digital joint fab actually shipped more – with the two companies collectively taking 33 per cent revenue share. Micron, SK Hynix and Intel had 13.7 per cent, 12 per cent and 11 per cent, respectively.

TrendForce estimates 13.872 million enterprise SSDs shipped in the second quarter, up 84 per cent year on year. Capacity shipped was circa 24.3 EB, up 116 per cent and accounting for about a quarter of all shipped flash capacity. PCIe enterprise SSDs accounted for 15.2 EB, up 179 per cent year on year and 62 .6 per cent of the total enterprise SSD capacity. SATA accounts for around around 5.5 EB and SAS 3.7 EB.

Enterprise SSD revenue in Q2 was about $4.5bn, up 95 per cent Y/Y. Supplier capacity market shares were – roughly – Samsung at 38 per cent, Intel at 24 per cent, SK Hynix at 15 per cent, Western Digital 12 per cent and Micron with 3.5 per cent. Kioxia said its market share wass 5.5 per cent.

There were some 67.45 million client SSDs shipped, bringing in around $3.9bn. Client disk drive revenue was about $1.24bn. There was about 27.4 EB of client SSD capacity shipped, slightly more than the 24.3 EB of enterprise SSD capacity shipped.

Supplier unit ship market shares were Western Digital at nearly 25 per cent, Samsung at about 22 per cent, SK hynix at 12.5 per cent, Kioxia 12 per cent and Micron on six per cent.