Fungible, a California composable systems startup, claims its technology will save $67 out of every $100 of data centre total cost of ownership on network, compute and storage resources.

The company this week launched its first product, a data processing unit (DPU) that functions as a super-smart network interface card. Its ambitions are sky-high, namely to front-end every system resource with its DPU microprocessors, offloading security and storage functions from server CPUs.

Pradeep Sindhu, Fungible CEO, issued a boilerplate launch announcement: “The Fungible DPU is purpose built to address two of the biggest challenges in scale-out data centres – inefficient data interchange between nodes and inefficient execution of data-centric computations. examples being the computations performed in the network, storage, security and virtualisation data-paths.”

“These inefficiencies cause over-provisioning and underutilisation of resources, resulting in data centres that are significantly more expensive to build and operate. Eliminating these inefficiencies will also accelerate the proliferation of modern applications, such as AI and analytics.”

“The Fungible DPU addresses critical network bottlenecks in large scale data centers,” said Yi Qun Cai, VP of cloud networking infrastructure at Alibaba. “Its TrueFabric technology enables disaggregation and pooling of all data centre resources, delivering outstanding performance and latency characteristics at scale.”

Third socket

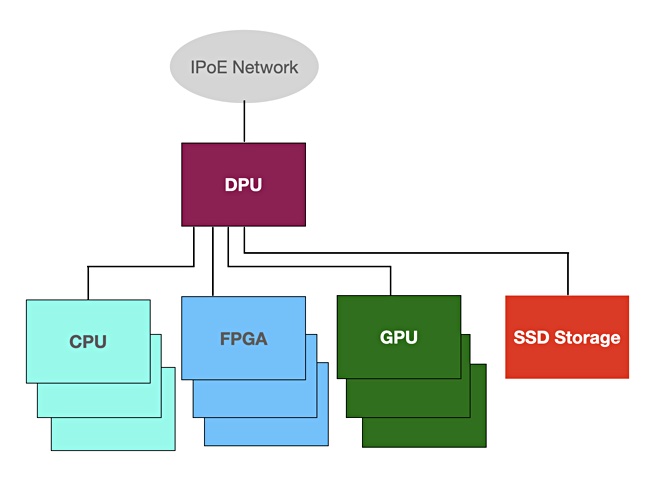

The Fungible DPU acts as a data centre fabric control and data plane to make data centres more efficient by lowering resource wait times and composing server infrastructures dynamically. Fungible claims its DPU acts as the ‘third socket’ in data centres, complementing the CPU and GPU, and delivering unprecedented benefits in performance per unit power and space. There are also reliability and security gains, according to the company.

Fungible says its DPU reduces total cost of ownership (TCO) for network resources 4 x, compute 2x and storage up to 5x, for an overall cost reduction of 3x.

As a composable systems supplier, Fungible will compete with Liqid, HPE Synergy, Dell EMC’s MX7000, and DriveScale. As a DPU supplier it competes with Nebulon and Pensando. The compute-on-storage and composable systems suppliers say they are focusing on separate data centre market problems. Not so, says Fungible. The company argues that this is all one big data-centric compute problem – and that it has the answer.

The technology

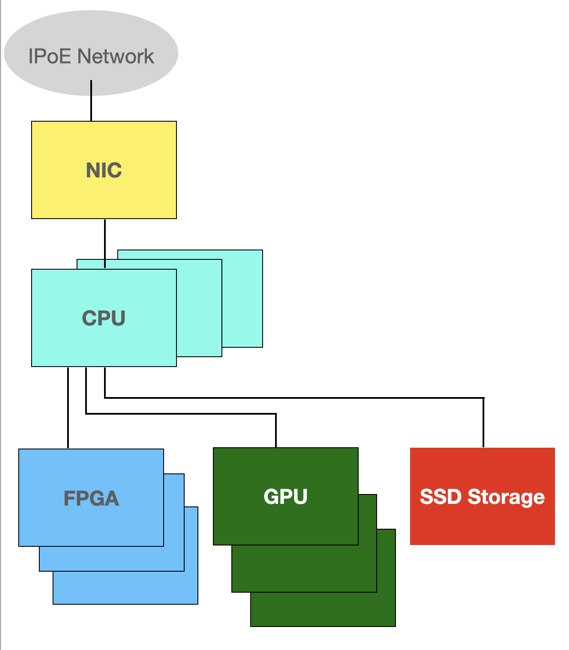

According to Fungible, today’s data centres use server CPUs as traffic cops but they are bad at inter-node, data-centric communications.

The Fungible DPU acts as the data centre traffic cop and has a high-speed, low-latency network, which it calls TrueFabric, that interconnects the DPU nodes.

This fabric can scale from one to 8,000 racks.

The Fungible DPU is a microprocessor, programmable in C, with a specially designed instruction set and architecture making it super-efficient at dealing with the context-switching involved in handling inter-node operations.

These will have data and metadata traveling between data centre server CPUs, FPGAs, GPUs, NICs and NVMe SSDs.

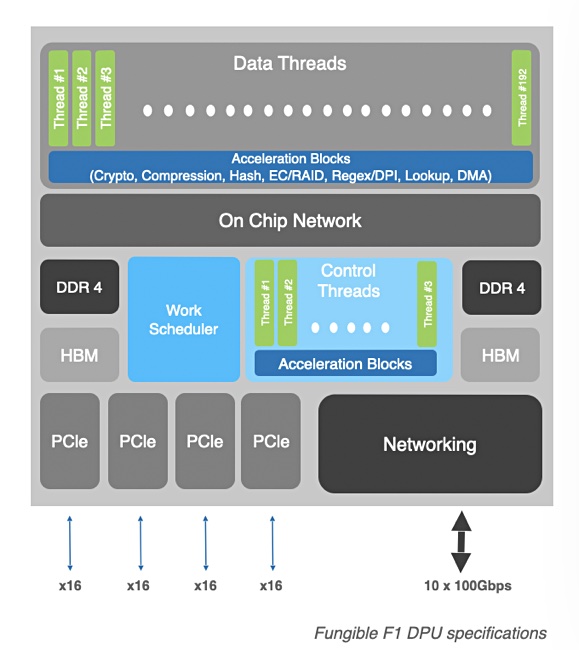

The DPU microprocessor has a massively multi-threaded design with tightly-coupled accelerators, an on-chip fabric and huge bandwidth – 4 PCIe buses each with 16 lanes, and 10 x 100Gbit/s Ethernet ports. This performs three to 100 times faster than an X86 processor at the decision support TPC-H benchmarks, Fungible says

There are two versions of the DPU. The 800Gbit/s F1 is for front-ending high-performance applications such as a storage target, analytics, an AI server or security appliance. The lower bandwidth 200Gbit/s S1 is for more general use such as bare metal server virtualization, node security, storage initiator, local instance storage and network function virtualization (NFV).

Both are customisable via C-programming and no change to application software is needed for DPU adoption. And both are available now to Fungible design partners wanting to build hyper-disaggregated systems.