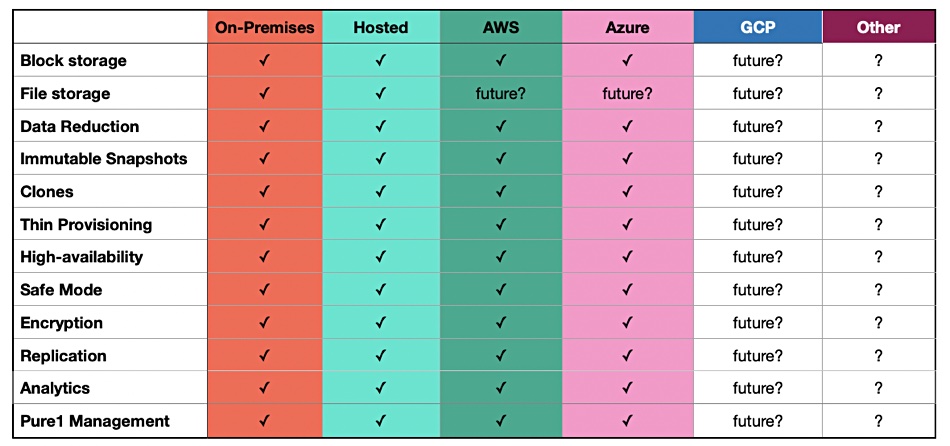

StorONE is running a technology preview of its TRU S1 software installed on Azure.

The company chose Azure cloud over AWS because of customer demand, StorONE marketing head George Crump told us in a briefing last week.

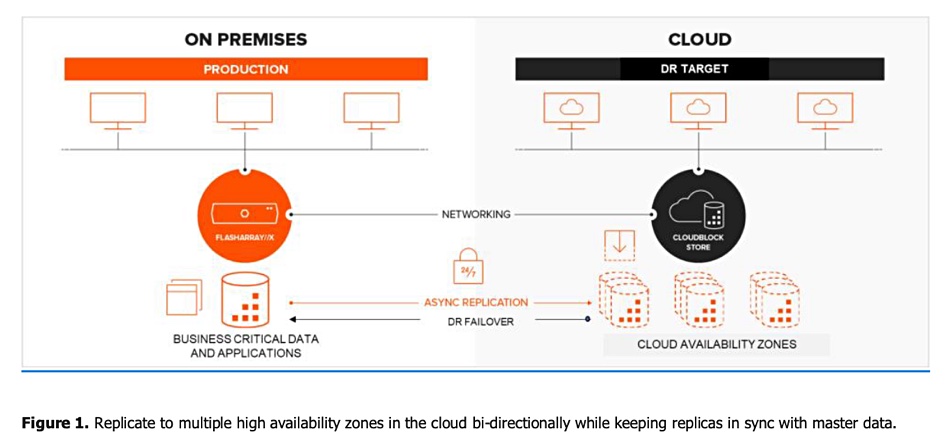

An Azure S1 instance could be a disaster recovery facility for an on-premises StorONE installation. It will be interesting to compare StorONE in Azure with Pure’s Cloud Block Store which is also available in Microsoft’s cloud. The GUI and functionality are identical to the on-premises StorONE array. The software might become generally available around May.

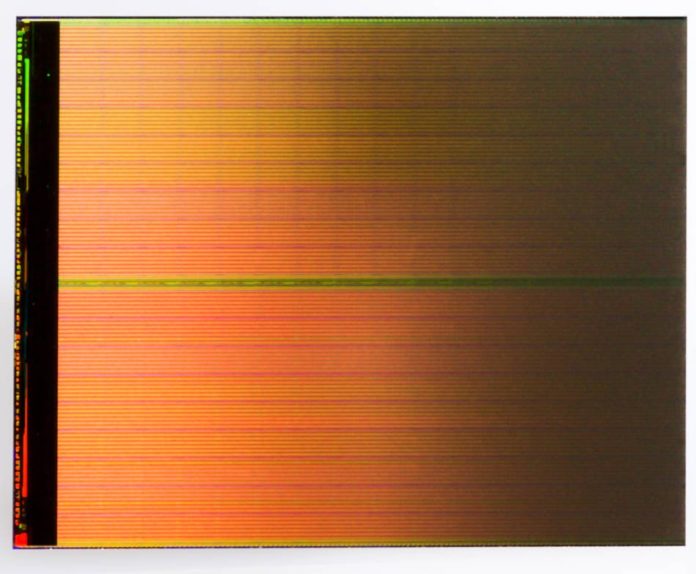

StorONE was founded in 2011, raised $30m in a single funding round in 2012 and promptly went into development purdah for six years. It announced TRU S1 software in 2018. This was described as enterprise-class storage and ran on clusterable Supermicro server hardware with Western Digital 24-slot SSD chassis.

Since then, StorONE has supported a high-performance Optane Flash Arrays, with Optane and QLC NAND SSDs, as well as a mid-performance Seagate hybrid SSD/HDD array. Crump told us that although all-flash arrays occupy the performance high-ground, the Seagate box is a “storage system for the rest of us with a mid-level CPU, affordability and great performance. … 2.5PB for $311,617 is incredible”.

“Seagate originally designed the box for backup and archive. We make it a mainstream, production workload-ready system.” StorONE’s S1 software provides shared flash capacity for all hybrid volumes. Crump said the flash tier is “large and affordable – 100TB, for example – and typically priced 60 per cent less than our competitors.”

The sequential writes to the disk tier provide faster recall performance. Overall the hybrid AP Exos 5U84 system delivers 200,000 random read/write IOPS.

According to Crump, competitor systems slow down above 55 per cent capacity usage – and StorONE doesn’t: “We can run at 90 per cent plus capacity utilisation.” This was because StorONE spent its six-year development purdah completely writing and flattened the storage software stack to make it more efficient .

Speeding RAID rebuild

Crump noted two main perceived disadvantages of hybrid flash/disk storage; slow RAID rebuilds, and performance. Failed disk drives with double-digit GB capacities can take days to rebuild in a RAID scheme, writing the recovered data to a hot spare drive, for example. That means a second disk failure could occur during the rebuild and destroy data, meaning recovery has to be made from backups.

StorONE’s vRAID protection feature uses erasure coding and has data and parity metadata striped across drives. There is no need for hot spare drives. A failed disk means that the striped data on that disk has to be recalculated, using erasure coding, and rewritten to the remaining drives in the S1 array.

Crump said: “We read and write data faster. We compute parity faster. It’s the sum of the parts.”

S1 software uses multiple disks for reading and writing data in parallel, and writes sequentially to the target drives. In a 48 drive system, vRAID reads data from the surviving 47 drives simultaneously, calculating parity and then writing simultaneously to those remaining 47 drives.

Crump told us: “We have the fastest rebuild in the industry; a 14TB disk was rebuilt in 1 hour and 45 minutes.” This was tested in a dual node Seagate AP Exos 5U84 system with 70 x 14TB disks and 14 SSDs. The disks were 55 per cent full.

Failed SSDs can be rebuilt in single-digit minutes. The fast rebuilds minimise a customer’s vulnerability to data loss due to a second drive failure overlapping the rebuild from a first drive failure.

Crump said StorONE has continued hiring during the pandemic, and that CEO Gal Naor’s ambition is to build the first profitable data storage company to emerge in the last 12 years.