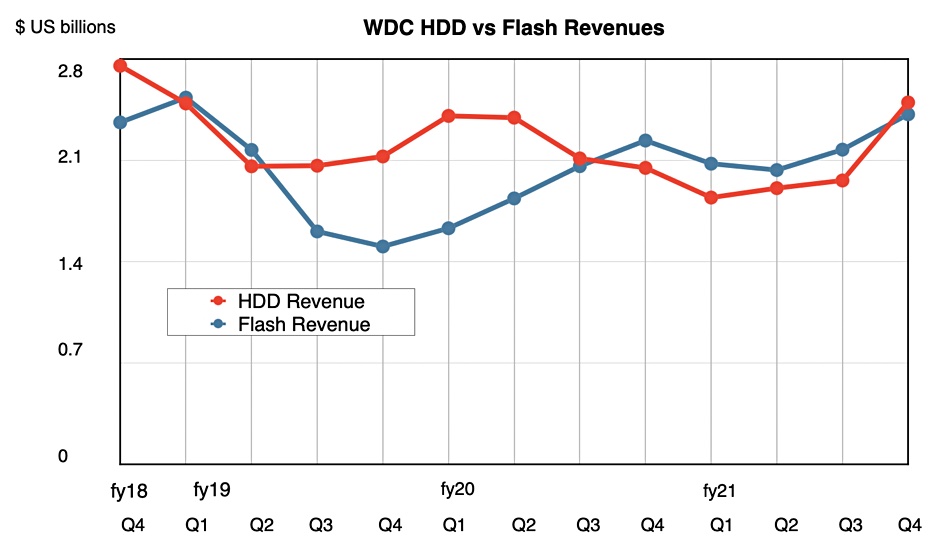

18TB nearline disk drives led Western Digital’s revenue charge in its beat-and-raise fourth fiscal 2021 quarter, with gross margin improvements leading to a tripled year-on-year profit increase.

Revenues were $4.9 billion in the quarter ended 31 July — up 15 per cent compared to last year — with profits 216 per cent higher than a year ago at $622 million. Full-year revenues were $19.9 billion — an increase of just one solitary per cent over fiscal 2020. That did produce profits of $821 million — a fine turnaround from last year’s $250 million loss.

CEO David Goeckeler enthused in his statement: “I am extremely proud of the outstanding execution our team exhibited as we achieved another quarter of strong revenue, gross margin and EPS results above expectations.” He also mentioned WD’s “unique ability to address two very large and growing markets” — referring to flash and disk drives.

In fact, believe it or not, disk drive revenues overtook flash revenues this quarter:

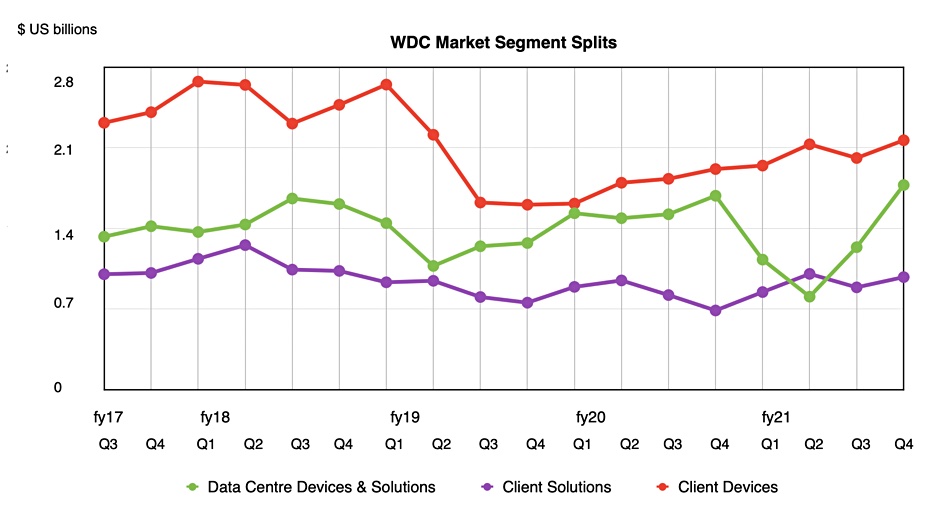

Sector views

The increase was led by WD’s data centre business which, at $1.7 billion grew faster than its client solutions ($977M) and client devices ($2.1B) businesses, as a second chart clearly shows:

The client devices sector experienced broad-based strength across nearly every product category, with better than expected demand for notebook and desktop HDDs, as well as flash-based solutions. It also saw robust demand for gaming, smart video, automotive, and industrial applications.

In the data center devices & solutions area, WD achieved a record shipment of over 104EB in enterprise capacity hard drives — a 49 per cent sequential increase. The 18TB energy-assisted hard drive was the leading capacity point, accounting for nearly half of its capacity enterprise shipments. Enterprise SSD demand strengthened, with stronger than expected sales of NVMe SSD, as WD completed a qualification at another cloud titan.

Client solutions experienced greater than seasonal demand, resulting in sequential growth for both HDD and flash-based solutions.

WD had ceded nearline high-capacity disk market share to Seagate over the last few quarters, and it now appears to be regaining the lost ground. Wells Fargo analyst Aaron Rakers said Seagate shipped 101.4EB of nearline capacity and Toshiba around 34.2EB, so WD was the lead nearline shipper in the quarter.

Financial summary:

- Gross margin — 31.8 per cent vs 26.4 per cent in prior quarter;

- EPS — $1.97 vs $0.63 in prior quarter;

- Operating expenses — $790M vs $713M in prior year;

- Operating activity cash flow — $994M vs $172M a year ago;

- Free cash flow — $792M vs $261M a year ago;

- Cash and cash equivalents — $3.4 billion.

Earnings call

In the earnings call Goeckeler confirmed the high-cap disk drive recovery: “The [revenue] upside was primarily driven by record demand for our capacity enterprise hard drives.”

He amplified this by saying: “We had our highest organic sequential revenue growth in the last decade, driven by the successful ramp of our 18-terabyte energy-assisted hard drive, growing cloud demand, a recovery in enterprise spending and, to a lesser extent, cryptocurrency driven by Chia.”

The gross margin increase was helped by lower costs. CFO Bob Eulau said: “We had very good cost takedowns in Q4 on both the hard drive side and on the flash side.”

The guidance for the next quarter is affected by concerns about the COVID pandemic’s effect on supply chains in Asia, so the sequential rise is lowish. WD expects revenues between $4.9 billion and $5.1 billion. A mid-point of $5 billion compares to $3.9 billion a year ago — a 28 per cent rise. Let the year-on-year compare good times roll.