WEBINAR: We all want flexible, cheap storage in the face of fast-growing data volumes. Blink and there’s more. A hundred thousand times more every second according to some estimates.

So making sure that data storage works efficiently for every company is central to any enterprise IT strategy worth its salt. But to do that requires a comprehensive understanding of what’s required coupled with the right storage solution to meet a specific business need.

StorPool says it has a fresh, ground-breaking approach to the conundrum that scopes the newest developments in block, file and object storage. The company has just published its ‘2024 Block Data Storage Buyer’s Guide’, a free reference resource designed to provide clear, practical, and proven guidance for IT practitioners and business leaders with the responsibility for deploying all types of enterprise-grade data storage solutions.

Find out more by joining our webinar on 25th January 4pm GMT/11am ET/8am PT. You’ll hear StorPool experts Alex Ivanov (Product Lead) and Marc Staimer (President, Dragon Slayer Consulting) explore the key challenges for organizations looking to upgrade their block data storage solutions in 2024, and share their insight into how to tackle real-world storage problems.

Alex and Marc will advise on how to avoid common pitfalls when devising an effective storage strategy and empower data storage buyers to compare and contrast different vendor block data storage systems while building a suitable infrastructure able to handle any amount of data growth on the horizon.

Deluged by data in 2024? This is one you really should take a look at. Sign up to watch the webinar here and we’ll send you a reminder when it’s time to log on.

Sponsored by StorPool.

Effective storage strategies for a new year

Storage news ticker – January 12

Google Cloud has eliminated data egress fees for customers that quit its service. Amit Zavery, Head of Platform for Google Cloud, blogged: “Google Cloud customers who wish to stop using Google Cloud and migrate their data to another cloud provider and/or on premises, can take advantage of free network data transfer to migrate their data out of Google Cloud.” Google Cloud customers still pay data egress (data transfer costs) when, for example, “data moves from a Cloud Storage bucket to the Internet.”

…

InfluxData, which has developed the time series database InfluxDB, has achieved AWS Data and Analytics Competency status in the Data Analytics Platforms and NoSQL/New SQL categories. InfluxDB connects with AWS services for developers to collect time series data, create data pipelines, and analyze data in real-time.

…

MAN Energy Solutions, a solutions provider in the maritime, power, and industrial sectors, has integrated the InfluxData real-time data analytics platform across its connected equipment operations. InfluxDB Cloud is the core of its MAN CEON cloud platform, a pivotal component in the company’s digital service and sustainability strategy to achieve fuel reductions in marine and power engines through the use of real-time data.

…

Kinetica, which supplies a real-time database for analytics and generative AI, announced the availability of a Quick Start for deploying natural language to SQL on enterprise data. This is for organizations that want ad-hoc data analysis on real-time, structured data using an LLM that accurately and securely converts natural language to SQL and returns quick, conversational answers. This offering makes it fast and easy to load structured data, optimize the SQL-GPT LLM, and begin asking questions of the data using natural language. This announcement follows a series of GenAI developments which began last May when Kinetica became the first analytic database to incorporate natural language into SQL.

…

Object First, the provider of Ootbi (Out-of-the-Box-Immutability), the ransomware-proof backup storage appliance purpose-built for Veeam, announced its partnership with Prodatix, a Veeam Certified Architect company specializing in data management, to offer Ootbi as their primary backup storage solution for Veeam.

…

Phison told us what it’s demonstrating at CES in Las Vegas:

- Phison PS5031-E31T: Low-Power PCIe 5.0 controller with a 4-channel DRAMless design offering up to 10.8 GBps performance and 8 TB maximum capacity

- Phison PS5026-E26 Max14um: For increased capacity and accelerated performance, the PCIe 5.0 E26 controller with I/O+ Technology is the most powerful device in its class, with 14 GBps+ sustained sequential read performance and over 1,000 MBPs in all PCMark 10 and 3DMark Storage Tests – a world first!

- Phison PS5027-E27T: The next generation in low-power, high-performance PCIe Gen4 SSD in a 2230 form factor optimized to reduce game load times in portable gaming devices like Steam Deck, Ally, and Legion Go

- Phison PS2251-21 (U21): The world’s first USB4 single-chip solution suited for small portable devices in conventional and unconventional form factors with up to 4 GBps performance in the palm of your hand.

…

The SNIA’s Networking Storage Forum is presenting on the topic “Everything You Wanted to Know About Throughput, IOPs, and Latency But Were Too Proud to Ask” on February 7 at 10am PT. Collectively, these three terms are often referred to as storage performance metrics. Performance can be defined as the effectiveness of a storage system to address I/O needs of an application or workload. Different application workloads have different I/O patterns, and with that arises different bottlenecks so there is no “one-size fits all” in storage systems. These storage performance metrics help with storage solution design and selection based on application/workload demands. Register here.

…

Block storage startup Volumez has a customer and partnership relationship with Anodot, which produces an AI-driven business monitoring product. Anodot is using Volumez to deliver persistent block storage on AWS for its large farm of PostgreSQL instances. It is also incorporating the Volumez SaaS service into its product portfolio and distribution channels.

…

Software RAID supplier Xinnor and E4 Computer Engineering, an HPC European System Integrator, have established a partnership to deliver enhanced storage capabilities to customers who require fast storage performance to run high performance computing simulations and Artificial Intelligence workflows. E4 has integrated xiRAID, Xinnor’s software RAID designed for modern NVMe SSDs, into its storage offerings, which are based on the BeeGFS parallel file system.

NAND in 2023: Layer counts rise and QLC advances

It was a roller coaster year for NAND flash storage as shipments sank then recovered as a cyclical downturn reversed. Suppliers increased cost effectiveness by extending 3D layer counts and using QLC flash, but looking at PLC and other extreme bits-per-cell counts proved disappointing.

The year started with NAND fabricators experiencing depressed revenues as a supply glut met muted demand. They cut production then saw demand start rising again as customers used up their existing inventory.

NAND foundry operators cut $/GB production costs by using more layers in their 3D NAND to build higher capacity dies. We saw a steady flow of layer count increases during the year, starting at the 218-layer level.

- March: SK hynix presented a >300-layer NAND paper at a conference, Kioxia and Western Digital have 218-layer NAND

- May: Micron builds 232-layer QLC SSD

- August: SK hynix introduces 321-layer NAND, Samsung has 300-layer NAND coming with 430 layers after that

- November: YMTC first to ship 232-layer QLC NAND

- December: Micron debuts 232-layer workstation SSD

All the suppliers moved to have the NAND control logic fabricated separately and added to the bottom or top of the die.

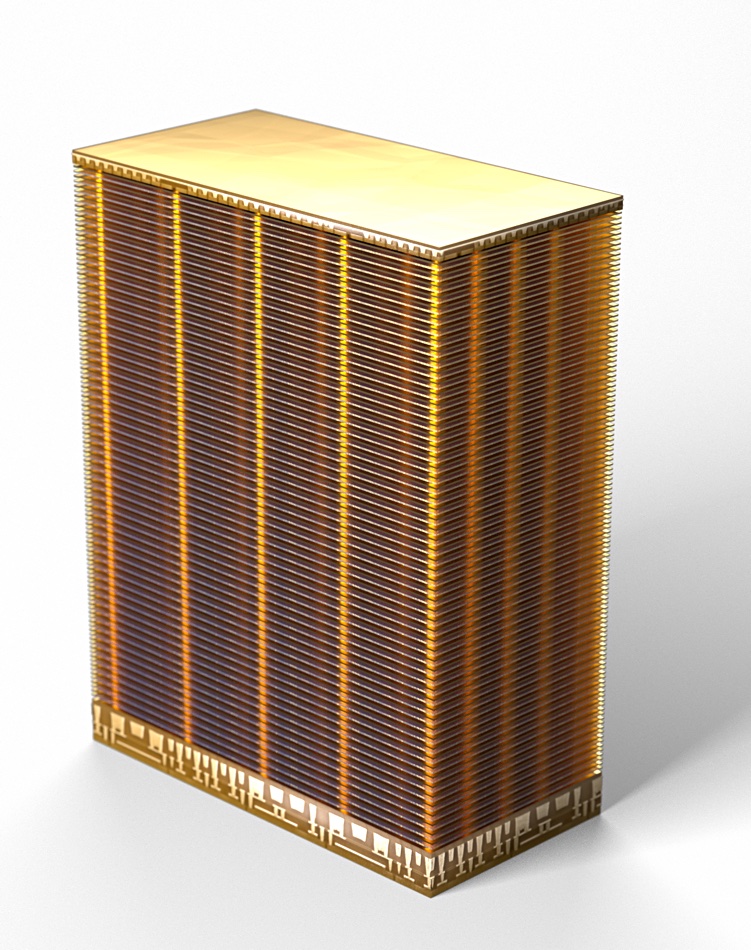

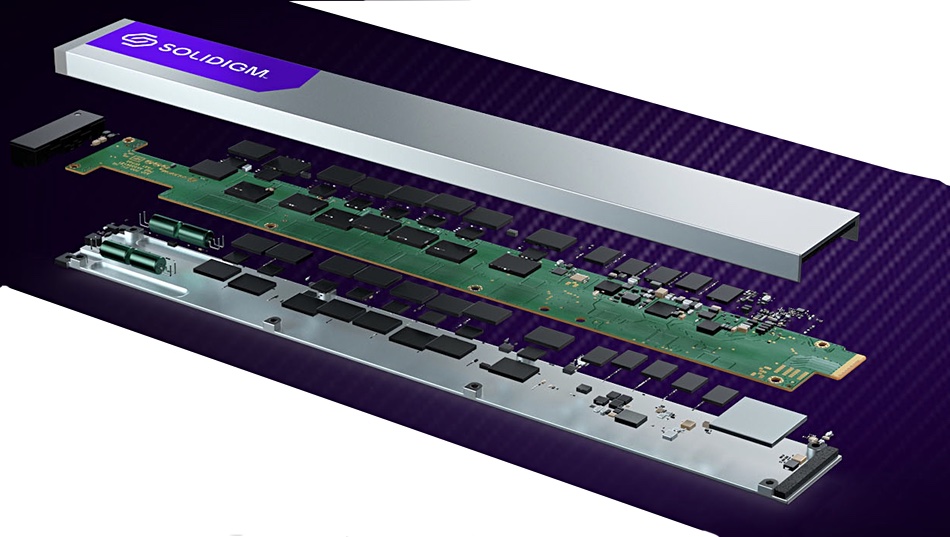

The standard SSD used TLC (3 bits/cell) flash but suppliers such as SK hynix subsidiary Solidigm and YMTC focused on providing QLC (4 bits/cell) NAND, and compensating for its lower write cycle endurance with over-provisioning and better write cycle management.

Storage array suppliers such as DDN, NetApp, and VAST Data took advantage of QLC flash to offer higher all-flash array capacities at a lower cost/TB and so were better able to compete with disk drive arrays.

There were no supplier announcements about penta-level cell (5 bits/cell) and beyond this year following 2022 forays. These layer counts seem utterly impractical as cryogenic cooling is needed for the 6 and 7-layer tech, and write endurance and read speed would both be increasingly low.

SSD capacities rose in the year, exceeding disk drive capacities, with Solidigm leading the way. It introduced a 61.44 TB capacity SSD, the D5-P5336, using QLC flash. Other SSD suppliers are shipping drives with maximum capacities around 30 TB. These are still larger than disk drives, which reached 24 TB with conventional recording and 28 TB with slower write shingled recording technology by year end, courtesy of Western Digital. This year we’ll probably see 60+ TB SSDs from other suppliers as well as Solidigm.

The EDSFF form factors started to make an appearance but not to the extent of killing off the classic M.2 (gumstick) and U.2 (2.5-inch bay) ones.

One piece of flash industry news that did not happen was Western Digital buying or merging with its NAND joint venture partner Kioxia. SK hynix, a Kioxia investor via Bain Consortium, killed that idea off by objecting to it.

Western Digital is now going to separate its disk drive and NAND fab/SSD businesses this year.

China’s YMTC managed to overcome US technology export obstacles and produce 232-layer NAND in the year. It would not be surprising if it announced 300+ layer technology this year, along with Kioxia and Western Digital, which are both currently at the 218-layer level with their BiCS 8 technology. These three suppliers lag Micron, Samsung, and SK hynix. We also expect Kioxia and Western Digital to announce a BiCS 9 NAND initiative this year and reach the 300-layer level.

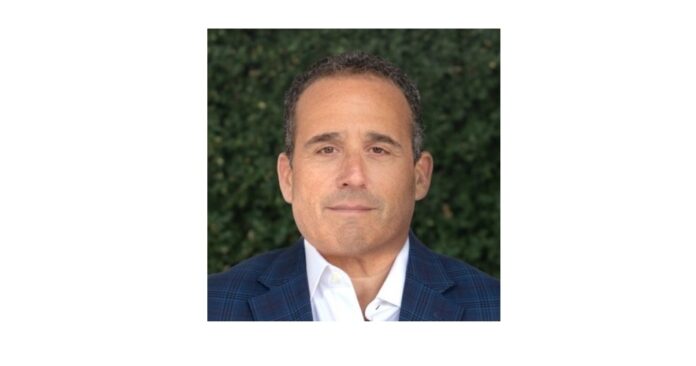

Veeam lays off 300 despite market share growth

Data protection outfit Veeam has reportedly laid off around 300 employees, according to one caught up in the redundancies.

In a post on LinkedIn, Denise Jongenelen, a senior campaign account manager, said she and “roughly 300 of my colleagues” were “eliminated” as part of an “organizational restructure”. She thanks the company for the “relationships, professional growth and experiences” during her five plus years on board.

B&F asked Veeam to comment on the number of redundancies, and chief operating officer Matthew Bishop sent us a statement:

“2023 was Veeam’s best ever year in terms of market share – now number 1 in the global market – growth and profitability. Like any successful company, during annual planning Veeam makes decisions to prioritize investment areas reflecting the evolution of the business and the market. We don’t publicly disclose confidential business plans but we can share we’re ramping up hiring in some areas, transitioning some roles to new teams, and retiring other roles. Our primary focus today is providing the best possible support to those Veeam employees impacted by the changes and assisting them to find their next career opportunity.”

The company laid off 200 employees in March last year. After that adjustment, Veeam’s total staff amounted to more than 5,000 employees.

Veeam, which has more than 450,000 customers, was bought by Insight Partners for $5 billion in 2020. It operates as on-premises application and system backup supplier, is moving into SaaS application protection via a deal with Cirrus for Microsoft 365 and Azure, and is investing in startup Alcion. Veeam also protects customers from ransomware and other cyber threats.

The business competes with traditional data protection players such as Commvault, Veritas, Rubrik, Cohesity, as well as Druva and HYCU that are active in the Backup-as-a-Service and SaaS application protection space.

Commvault is publicly owned whereas Cohesity, Druva, HYCU, Rubrik, and Veeam are not. There is a logjam building up as the backers of these companies look to get a return on the cash they invested. A Veeam IPO could deliver a good exit for Insight Partners and the company’s financial situation would need to look impressive, so trimming expenses is perhaps not surprising.

Where did the VCs invest storage startup dollars in 2023? (Clue: AI)

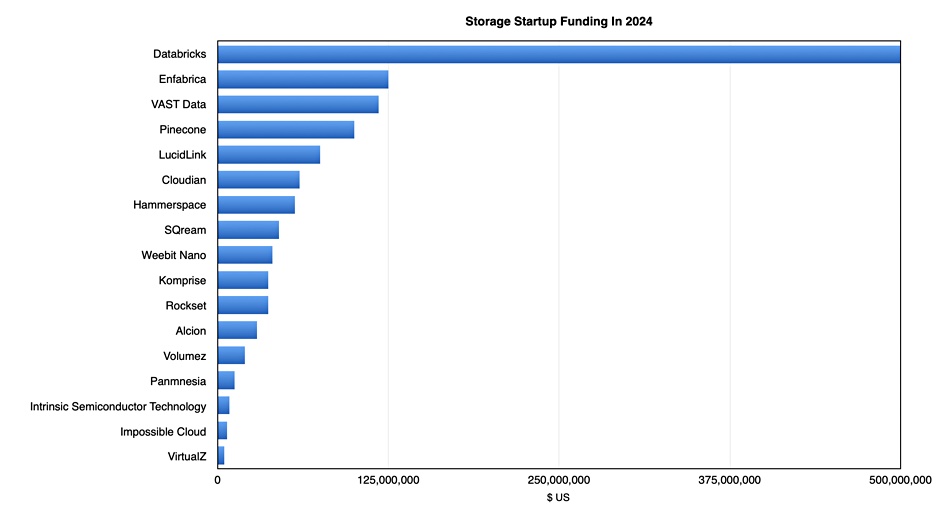

Venture Capitalists invested $1.3 billion in 17 storage startups in 2023, yet the bulk of that cash injection went to just four businesses that are weaving AI into their product portfolio.

Update. Volumez fund raise corrected from $12.5 million to $20 million. 12 January 2024.

Some 38.6 per cent went to Databricks in a huge $500 million infusion, its ninth or I-round of VC funding, taking its total raised to more than $4 billion. Three other companies raised $100 million or more: Enfabrica – $125 million, VAST Data – $118 million, and Pinecone – $100 million.

Notice the common factor:

- Databricks is a data lakehouse supplier eagerly chasing the gen AI (generative Artificial Intelligence) data storage and supply business.

- Enfabrica is an AI interconnect chip startup, developing an Accelerated Compute Fabric switch chip to combine and bridge PCIe/CXL and Ethernet fabrics.

- VAST Data provides fast and scalable all-flash storage roughly at the cost of disk, with an AI data management and execution superstructure being layered onto it.

- Pinecone is developing a vector database for AI applications.

That means $843 million of the entire year’s storage startup funding, went into just four businesses, all focused on AI; three software startups and one hardware business.

And the rest

The fifth largest recipient was LucidLink, which is developing large file collaboration software technology, and which pulled in a C-round worth $75 million.

Twelve year-old object storage supplier Cloudian raised $60 million in a late-stage F-round, five years after a $94 million E-round.

David Flynn’s data orchestrating startup Hammerspace jacked up its funding total with a $56.7 million in the year as the business rolled out slew of business development announcements. These included an AI reference architecture.

GPU-focused relational database supplier SQream raised $45 million in a C-round to help its AI/ML-focussed software business.

Resistive RAM storage hardware technology developer Weebit Nano announced a $40 million injection to boost HW development and trade.

Data management specialist Komprise is the equal tenth startup in our list, adding $37 million to its coffers in a D-round, with database developer Rockset also enlarging its bank account by $37 million, with a B-round extension, supported by $7 million in debt financing.

Alcion’s investors gave it $29 million in two stages; an $8 million seed round followed a few months later by a $21 million A-round. It is developing backup software for SaaS applications. Alcion’s founders previously founded the Veeam-acquired Kasten, a Kubernetes-orchestrated container backup company.

Cloud-native and cloud-orchestrated block storage software startup Volumez raised $20 million in an A-round. It has been quiet for a few months but announced a customer and partnership relationship with Anodot, an AI-powered business and cloud optimization supplier in December.

Korean CXL hardware startup Panmnesia raised $12.5 million, and is only the third hardware-based startup in our group, along with Enfabrica and Weebit Nano. Intrinsic Semiconductor Technology is a fourth HW startup, and attracted $8.7 million in the form of a VC round and a grant. It is developing a ReRAM chip, like Weebit.

That totals $213.1 million of hardware-focused startup investment, just 16.7 percent of the total invested in storage startups this year. Truly software is eating hardware in the storage startup world,

Germany’s Impossible Cloud raised $7 million in a seed round for its decentralized Web3 storage, while IBM mainframe data access startup VirtualZ had $4.9 million given to it by seed round investors.

The total storage startup investment we recorded for 2023, $1.3 billion, is less than half of the $3.1 billion invested in the sector in 2022. Investing fashion moves on and AI is all the rage now. But $1.3 billion isn’t small change. Also, storage is a pretty mature industry, as shown by the relatively small amount of funding going to array and chip-level technology. The bulk of the money is going into the storage AI interface area. We’ll see if that continues throughout 2024.

StorMagic says it’s happy living on the edge

StorMagic sells its virtual SAN product to edge IT sites and SMBs, not enterprise datacenters, and has no ambitions to go beyond these particular on-premises markets.

Although the virtual SAN technology coming from this software-defined storage supplier is always part of a hyperconverged infrastructure (HCI) setup, StorMagic does not class itself as an HCI supplier. That’s because the company does not supply a hypervisor, and its SvSAN software works with the vSphere, Hyper-V, and KVM hypervisors. Its product is an HCI component, not an HCI system.

Bruce Kornfeld, chief marketing and product officer, briefed Blocks & files on StorMagic’s market situation and strategies. The company was co-founded in Bristol, England, by CEO Hans O’Sullivan and CTO Chris Farey in 2011. Sullivan resigned in 2020 and Dan Beer became the CEO. Kornfeld told us that SvSAN supports x86 servers and only needs two of them to work as a high-availability clustered block storage system at edge sites, employing a remote witness to help with failover. The two servers can be mismatched in performance and configuration, SvSAN will still work.

The remote witness is a third server system-level application that selects one of the pair of servers at an edge site to function as the main server when the network link between the two edge site servers is broken. StorMagic can support thousands of edge sites and its minimal server hardware requirements increasingly help lower costs as edge site numbers grow. Kornfeld said: “With StorMagic, it’s just two servers, one virtual CPU, one gigabyte of RAM, one gigabit Ethernet, and away you go.”

SvSAN software is mature and proven, with StorMagic having more than 2,000 customers, with tens of thousands of sites, in more than 70 countries worldwide. It competes with VMware’s vSAN, Nutanix, and Scale Computing, which also sells hardware and software to edge sites and SMBs.

Kornfeld said of competition with Scale Computing: “[There’s] some, but they’re not the biggest competitor that we still have in the market [which] is VMware vSAN. We run into Scale Computing; they do a really good job, they’ve got a great solution there. What they’ve done is they’ve built their own hypervisor, which is good for many customers, because they just want their own thing and not have to worry about it. But a lot of customers aren’t ready to make that jump to learn something new. They want to be integrated into the environment they have. And we support Microsoft, VMware, and an open KVM as well. We tell our customers whatever hypervisor you want, we’re going to support you with a rock-solid hyperconverged storage solution.”

Competition with vSAN is being helped by Broadcom’s acquisition of VMware and its channel changes. Kornfeld told us: “With what’s happening with VMware and Broadcom, we’re starting to see even more desire from customers to use non-VMware hypervisors. So we think Hyper-V as well as KVM solutions will start to see more play.

“There are thousands and thousands of VARs around the world that sell to SMBs that now won’t have Broadcom channel partner status. So we’re getting a lot of inquiries from them.”

A major issue with Broadcom concerns VMware licensing. Kornfeld said: “They did a cold, hard stop on perpetual licensing … They’re forcing everyone to subscription. And we have a perpetual model that customers love. It’s low cost, you pay for the software once, you pay us annually for some support.

“We have a very low cost, perpetual license model,” he said. “It starts at $1,500 … It’s a great low-cost way for VARs to build solutions.”

We asked Kornfeld about support for Arm-based server hardware, and he said: “Our software is portable to Arm. And that is something that we debate here and there because at the edge … Arm is really starting to gain hold. But that’s something we haven’t tackled yet. That’s on the future to-do list.”

AI at the edge is not one of those customer requirements that StorMagic needs to support directly. Kornfeld said: “We are seeing AI driving significantly more data at the edge and at SMB sites … Many customers can’t wait to send data from the edge to the cloud to go and run analytics [there] and then sending answers back to the edge. We’re seeing it for sure. But our software doesn’t have to change to support it. They can put a GPU in any server they want. They can run any application, any analytics application they choose, whatever vertical market they’re in. And our product still works.”

However, AI could be used inside the SvSAN product, as Kornfeld revealed: “Without cornering me on release dates, I’ll just say that is an area that we’re looking at inside of our product, right?”

Our impression of the main current marketing activity for StorMagic is capitalizing on Broadcom’s enterprise and datacenter focus for VMware and its channel reorganization. It can realistically hope for a sales boost from refugee vSphere edge and SMB channel partners and expanding its worldwide sales footprint. Broadcom’s loss might just be StorMagic’s gain.

Storage news ticker – January 10

Data at Rest (DAR) storage systems provider CDSG, with its DIGISTOR self-encrypting SSD, says the US’s National Security Agency (NSA) has certified its Citadel C Series Pre-Boot Authentication (PBA), powered by Cigent, which is now on the Commercial Solutions for Classified (CSfC) storage component list. The CSfC-certified drive provides a full pre-boot single layer of security to ensure sensitive data cannot be accessed, even if adversaries have physical access to the systems or media. The DIGISTOR PBA product line is now streamlined to focus on Citadel C Series while adding the Citadel C-PBA self-encrypting drive to its lineup.

…

Databricks and MIT Technology Review released a report looking at data intelligence and generative AI priorities across industries, including financial services, retail, and manufacturing. “Bringing breakthrough data intelligence to industries” reveals technology leaders’ (CIOs, CTOs, CDOs) generative AI adoption plans, challenges, and needs.

- Personalization and customer experience is the top use case for generative AI in the UK across industries.

- The UK leads the pack in EMEA when it comes to taking advantage of streaming data for real-time analytics, with 70 percent of respondents deeming this “important” compared to 67 percent across EMEA.

- Globally, energy and telecoms organizations are the fastest in shifting to a platform that enables the adoption of emerging technologies. 73 percent of respondents deem this important in the next two years, compared to 60 percent in healthcare.

- Financial services organizations are the most keen on adopting open source technologies to take advantage of the latest innovations, with 82 percent stating that this will be a priority in the next two years.

…

Wells Fargo analyst Aaron Rakers says Dell has started to see increased signs of positive pull-through for unstructured storage and servers. He says Dell is not yet calling a bottom/recovery in traditional server demand, and the company does not believe traditional workloads are migrating to AI-enabled servers. Rakers’ industry conversations lead him to continue to anticipate increasing/materializing signs of a recovery in traditional server spend as we move past Q1 2024.

…

Data lakehouse supplier Dremio announced a partnership with Carahsoft Technology Corp, the Trusted Government IT Solutions Provider. Carahsoft will serve as Dremio’s Master Government Aggregator, forming the company’s complete cloud and software portfolio for Government, Defense, Intelligence, and Education. The service is available through Carahsoft’s reseller partners and NASA Solutions for Enterprise-Wide Procurement (SEWP), Information Technology Enterprise Solutions – Software 2 (ITES-SW2), National Association of State Procurement Officials (NASPO), ValuePoint, National Cooperative Purchasing Alliance (NCPA), and OMNIA Partners contracts.

…

HPE is acquiring Juniper Networks in an all-cash $14 billion deal, with the transaction closing in late 2024 or early 2025. Analyst Aaron Rakers tells subscribers that, with Juniper, HPE’s portfolio will be weighted toward higher-growth, higher-margin businesses with large free cash flow potential. The Networking segment will increase from 18 percent of HPE’s total revenue to 31 percent and contribute up to 56 percent of HPE’s total EBIT. The acquisition brings complementary capabilities to deliver next-generation AI-native networking thanks to Juniper’s Mist AI portfolio (complement to Aruba Central/Intelligent Edge strategy). The acquisition expands HPE’s total addressable market into datacenter networking, firewalls, and routers, and grows Juniper’s footprint in datacenter and cloud providers.

…

Danish SaaS data protection company Keepit has secured $40 million in refinancing from HSBC Innovation Banking in partnership with the Export and Investment Fund of Denmark (EIFO). The debt facilities have been instrumental in fortifying the company’s offerings for future sustainability and means the company can continue its growth strategy at full throttle. Keepit will use the new capital to sustain the current growth trajectory. It also frees up capital for further investments in international expansion, hiring, business operations, and product development as Keepit continues to scale and build out its platform for SaaS data protection. This follows Keepit’s $30 million Series A funding round in 2020, and a total of $22 million debt financing in 2022.

…

Nodeum has a freely downloadable white paper looking at its Data Mover for unstructured data workloads in HPC. Data Mover is designed to control any data movements including task execution, filtering, priority management, workload manager integration, data integrity, storage connectivity, hook service, and user management and interfaces. Get the paper here.

…

American workwear brand Carhartt has consolidated multiple legacy backup tools with Rubrik Security Cloud to try to improve cyber resilience. After moving to Rubrik, Carhartt says it realized more than 50 percent in monthly cost savings, while significantly strengthening its data security capabilities. Prior to Rubrik, after an upgrade of a critical application failed, Carhartt admins discovered that application data hadn’t been backed up, forcing the team to reconstruct more than two weeks of data manually. The Carhartt team also discovered malware in backups from its legacy tools, resulting in weeks of searching data sets to manually complete the investigation. Carhartt now uses numerous Rubrik products including Anomaly Detection, Sensitive Data Monitoring, Threat Hunting, as well as its integration with Microsoft Sentinel. Read the Carhartt case study to find out more.

…

File system supplier WekaIO has received certification for an Nvidia DGX BasePOD reference architecture built on DGX H100 GPUs. It says this advances its journey to DGX SuperPOD certification. Nilesh Patel, chief product officer at WekaIO, said: “With our DGX BasePOD certification completed, our DGX SuperPOD certification is now in progress. With that will come an exciting new deployment option for WEKA Data Platform customers. Watch this space.”

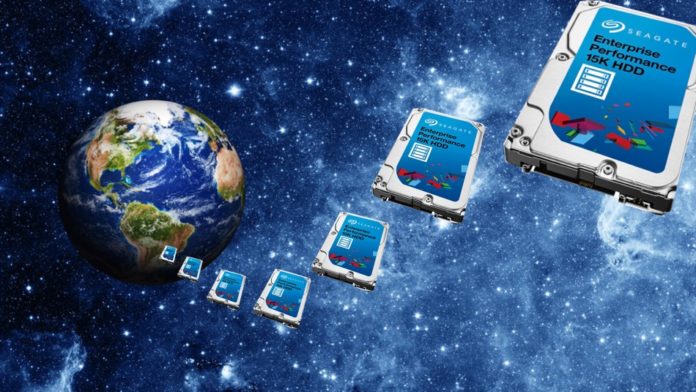

Nearline disk drive market showing signs of recovery

A sequential rise in nearline disk units and capacity shipped signals a market recovery, according to Wells Fargo analyst Aaron Rakers.

Analyst Trendfocus issued preliminary shipment numbers for 2023’s fourth quarter with between 27.6 million and 29.7 million HDDs delivered, flat sequentially and down 21 percent year-on-year. Total capacity shipped declined 6 percent year-over-year to 212 EB but increased 8 percent quarter-on-quarter.

That increase did not come from the desktop and consumer electronics (CE) sector, where unit shipments of 17-18 million slumped 28 percent from 24.1 million a year ago and declined 6 percent from the 18.5 million shipped in the third 2023 quarter. The fourth quarter total was divided between 3.5-inch desktop and CE drives, with around 10 million units, and approximately 8 million 2.5-inch mobile and CE drives.

Trendfocus estimates that there were almost 10 million nearline drives – 3.5-inch mass capacity devices – shipped in the quarter. Rakers calculated this to mean a 6 percent year-on-year decrease but a 12 percent quarter-on-quarter increase. Trendfocus’s nearline capacity shipped estimate for the quarter was around 159 EB, up 19 percent sequentially and down only 2 percent year-on-year.

Nearline capacity shipped declined 51 percent year-over-year in the second quarter and 41 percent in the third quarter, so a mere 2 percent decline in the fourth quarter is a significant change. It looks like nearline capacity shipped is going to start rising more strongly, with unit shipments following in its wake.

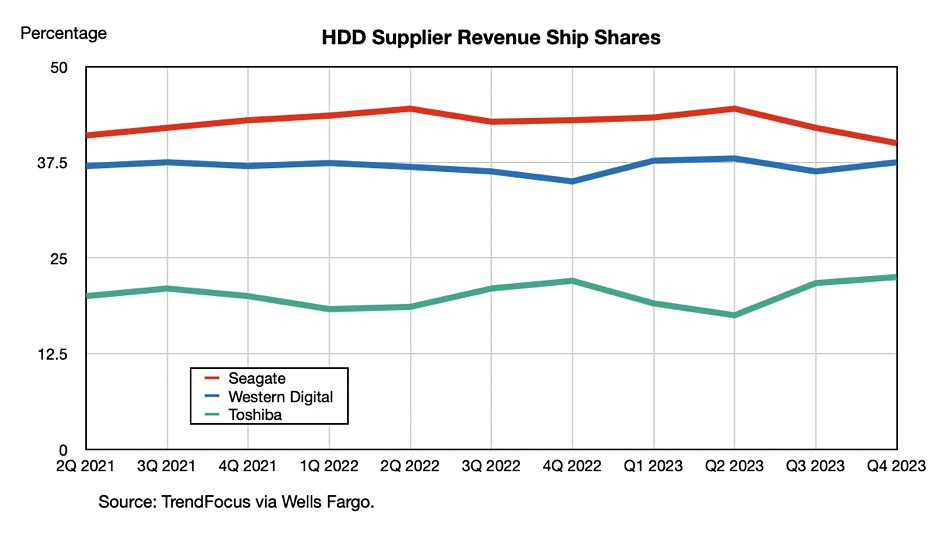

The unit shipment shares between the three HDD suppliers in the fourth quarter were:

- Seagate: 40 percent, down 2 percent Q/Q

- Western Digital: 37.5 percent, up 1.2 percent Q/Q

- Toshiba: 22.5 percent, up 0.8 percent Q/Q

A unit shipment share chart shows leader Seagate slipping in the past two quarters:

Juniper Networks kit that HPE will get if $14B buy clears

HPE will inherit a raft of Ethernet storage networking kit and more should the proposed purchase of Juniper Networks get the approval of shareholders and regulators. Our sister site The Register covered the news earlier today and here we look at the networking specialist’s portfolio.

Juniper supplies data center switches that support NVMe/RoCE v2 workloads. There are two Juniper switch product lines; the EX campus and branch switches and the QFX data center switches. Juniper also has Apstra network management software.

The EX line consists of;

- EX2300 – 1RU

- EX2300 Multigigabit, 1RU

- EX-2300-C – 1RU

- EX3400 – 1RU

- EX4100,

- EX4100 Multigigabit, 1RU

- EX4100F – 1RU

- EX4300 – 1RU

- EX4300 Multigigabit -1RU

- EX4400 – 1RU

- EX4400 Multigigabit – 1RU

- EX4400-24X – 1RU

- EX4600 – 1RU

- EX4650 – 1RU

- EX9200 – 5, 8 or 16RU

- EX9250 – 1 or 3RU

The QFX series switches have 100G and 400G speeds and support Priority Flow Control (PFC) and Explicit Congestion Notification (ECN). NVMe/TCP is supported by Juniper’s EVPN-VXLAN offering. Juniper’s Apstra netwprk management product can manage and provision VXLAN tunnels for storage traffic with telemetry data signalling IO throughput.

The QFX line looks like this;

- QFX5100

- QFX5110 – leaf, 1RU

- QFX5120 – spine, leaf, top of rack, 1RU

- QFX5130 – leaf, top of rack, 1RU

- QFX5200 – leaf, top of rack, to 100GbE, 1RU

- QFX5210 – leaf, top of rack, to 100GbE, 2RU

- QFX5220 – leaf, top of rack, 1RU (QFX5220-32CD), 4RU (QFX5220-128C)

- QFX5230 – spine, to 400GbE, 2RU

- QFX5700 – spine and leaf, to 400GbE, 5RU

- QFX10000 – spine and core switches

- QFX10002 – 2RU, fixed configuration

- QFX10008 – 13RU

- QFX10016 – 21RU

There is a lot of overlap and the hardware is sometimes identical; the EX4650 and QFX5120-48Y share the same box and the same chipset is used in the EX4600 and QFX5100.

Apstra is the management software, it has multi-vendor support and provides intent-based networking. This means customer admin staff specify the network topology, VLANs, desired capacity, redundancy requirements, access rules, etc., and the Apstra SW translate this to device-specific configuration and policies.

HPE would also acquire limited Fiber Channel networking with Juniper, as its QFX3500 switch supports native FC ports as well as Ethernet ports. It can act as an FCoE to FC gateway or as an FCoE transit device. All FCoE traffic must travel in a VLAN dedicated to transporting only FCoE traffic. Only FCoE interfaces should be members of an FCoE VLAN. Ethernet traffic that is not FCoE or FIP traffic must travel in a different VLAN. In effect the FC traffic is a guest on a Juniper netrwork and HPE will not gain native FC storage networking.There is more information to be found here.

HPE would gain cross-selling and up-selling capability with Aruba edge customers getting Juniper product pitches, and Juniper customers receiving Aruba pitches. Apstra would probably get extended to manage Aruba devices. Currently they are managed with Aruba Networking Central. We can expect gateways between the two and a possible subsequent merger.

The Juniper products will most likely have a GreenLake subscription business model added as well.

In acquiring Juniper, HPW would also get a stronger competitive stance versus Dell’s PowerSwitch LAN networking line. This HPE-Juniper deal could possibly spark a Dell network supplier acquisition in the future. No doubt Cisco is watching developments with interest too.

Recovering Cirata grows bookings, aims for cash flow break-even

Cirata, the renamed and recovering WANdisco, issued a preliminary trading update for the quarter ended December 31 showing bookings growth as it gets its business back on track.

The company has two lines of business, supplying replication-based active data integration (DI) and application lifecycle management (ALM) software to enterprise customers. It has increased its cash balance to $18.2 million from the guided $16 million. Sales bookings in the fourth quarter (Q4) were $2.7 million, up from $2.2 million a year ago and $1.7 million in Q3, meaning 23 percent growth year-on-year. Third quarter bookings of $1.7 million were sequentially higher than Q2 but lower than the $1.9 million reported for Q2 2022. This is despite some slippage of sales completion from Q2 to Q3 and again from Q3 to Q4.

CEO Stephen Kelly said: “FY2023 has been an eventful year for all Cirata stakeholders, a near collapse of the business followed by a herculean effort to rebuild from the ground up. The March 9 announcement represented an existential crisis. Against all odds, the turnaround is well underway. Our Q3 and our Q4 reflect the first steps of a company coming back ‘off the canvas’. Sequential growth in bookings through Q2, Q3, Q4 and transacting with companies such as GM, NatWest and Experian amplify the progress this company has made since the dark days after March 9.”

WANdisco revealed its gross sales mis-reporting and AIM stock market suspension on March 9 last year. This was attributed to a single and so far unidentified senior sales executive who nearly brought the company down.

Kelly has reorganized sales, with three CROs reporting to him. Rich Baker has been appointed as CRO International from being SVP Global Sales, Chris Cochran, who was VP for Global Alliances, is now CRO North America, and Justin Holtzinger, previously SVP Customer Success and Engineering, is now CRO for the ALM business. Kelly refers to his reorganization as removing a layer of management. Previously there was one single CRO and SVP for Global Sales, Frank Moser, reporting to the CEO.

In videotaped remarks, Kelly said Cirata had not focused on its ALM products in the past and that side of the business had flatlined. He wants to see growth from it in 2024.

Kelly said: “We completed the FY23 Turnaround Plan and now shift gears into the FY24 Growth Plan. We are getting into our stride, but this is minimum table stakes – we need to drive a much higher quality execution in our Go-to-Market model. The new structure of our sales organization with the leadership of Rich, Chris, and Justin reflects a flatter, sharper, more customer focused Cirata.” There will be improved visibility around pipeline build, predictability, and closure rates, claims Kelly, who’s hoping for significantly improved pipeline conversion.

He added: “With go to market resources, what we’ve struggled to do in the past … is working with customers on sales cycles. But what we’ve failed to do well is actually close them predictably. And there’s been slippage.” The sales reorg flattens the structure “so there’s only one level of management between myself, the chief executive, and the individual salesperson or the sales consultant.”

“The turnaround plan we shared with the investors during the summer is substantially completed … We’ve got ourselves ready, and match fit for FY24,” Kelly added. “We want to drive high quality growth with marquee Fortune 500 companies, and that’s my personal aspiration.”

From now, it’s all about sales growth, with a cash flow break-even target for the end of this year. CFO Ijoma Maluza said Cirata should be “generating positive cash as we move into 2025.”

Cirata is aiming for global market leadership in its product sector by 2028.

The company said we should expect the preliminary FY2023 results update between mid-March and mid-April 2024. It will provide full year 2024 guidance for both bookings and year-end cash balance in the earlier of the Q1 Trading Update or the FY23 preliminary results. An in-depth review and commentary of the Turnaround Plan will be provided in the FY23 results.

Discover the ‘2024 Block Data Storage Buyer’s Guide’

Webinar: We consume data, and we create it – exponentially. The expectation is that we will be using 180 zettabytes of data globally by 2025.

This means that what we do with data, how we store and access it, is a vitally important part of enterprise IT strategy. A comprehensive understanding of the optimal approach to data storage for any business or organization is essential, and so too is ensuring the right storage solution is in place to meet specific business needs.

Join our webinar on 25th January at 4pm GMT/11am ET/8am PT to hear how StorPool can offer an up-to-date approach based on the latest block storage, file storage and object data storage technologies. The company has just published its ‘2024 Block Data Storage Buyer’s Guide’ a free reference resource that provides clear, practical, and proven guidance for IT practitioners and business leaders with the responsibility for deploying all types of enterprise-grade data storage solutions.

StorPool experts Alex Ivanov (Product Lead) and Marc Staimer (President, Dragon Slayer Consulting) will use the webinar to explore the key challenges for organizations looking to upgrade their block data storage solutions in 2024 and share insight on how to tackle real-world storage problems. They’ll also offer tips on how to avoid common pitfalls when devising an effective storage strategy and comparing and contrasting different vendor block data storage systems.

Sign up to watch our ‘Radical Practical Approach to Buying Block Data Storage’ webinar on 25th January here and we’ll send you a reminder when it’s time to log in.

Sponsored by StorPool.

Micron unveils compact and power-efficient laptop memory module

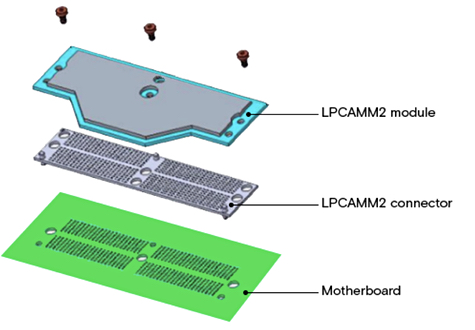

Micron has launched a smaller and faster notebook computer memory module that draws less electrical power than the SODIMMs currently used and is plugged, not soldered, onto a motherboard.

This means higher notebook performance, longer battery life, and more component freedom for motherboard designers. The LPCAMM2 (Low Power Compression-Attached Memory Module) is physically smaller than a SODIMM (Small Outline Dual In-line Memory Module) and based on LPDDR5 (Low Power Double Data Rate 5) DRAM. It has a shorter trace to the motherboard than SODIMM because it is compressed against a motherboard connector instead of using soldered pins. The device has dual channels and supports 128 bits.

Praveen Vaidyanathan, VP and GM of Micron’s Compute Products Group, said: “The LPCAMM2 product … will deliver best-in-class performance per watt in a flexible, modular form factor. This first-of-its-kind product will enhance the capabilities of AI-enabled laptops, whose memory capacity can be upgraded as technology and customer needs evolve.”

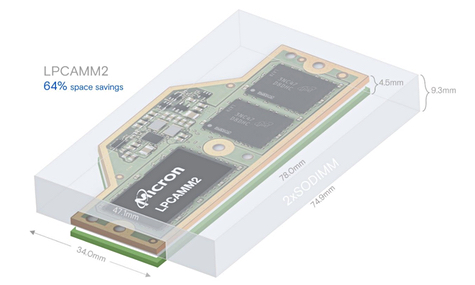

SODIMMs provide Synchronous Dynamic Random Access Memory (SDRAM) to a host computer. Micron provides 8-128 GB capacity SODIMMs built from 16 Gb to 64 Gb DDR5 SDRAM (Synchronous DRAM) devices with four or eight internal memory bank groups. They have a 4,800-6,400 megatransfers per second (MTps) data rate and can be dual-stacked to increase capacity. Micron’s LPCAMM2 package delivers a 64 percent volume space saving compared to a dual-stacked SODIMM module.

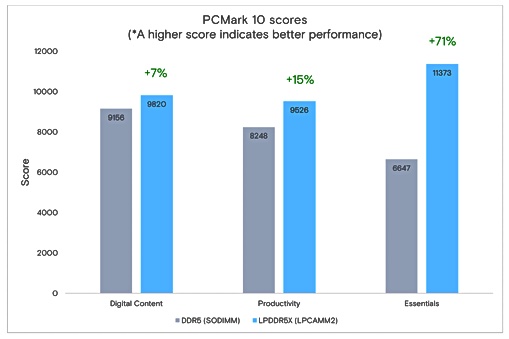

Its data rate is 7,500 MTps compared to current 5,600 MTps SODIMM speeds. Micron says LPCAMM2 speed is expected to increase to 8,500 MTps in 2025, when SODIMMs generally will rise to 6,400 MTps, and 9,600 MTps in 2026 compared to the 7,200 MTps expected from SODIMMs then.

This MTps speed advantage enables faster notebook application speeds. Micron provided PC Mark 10 scores comparing LPCAMM2 to SODIMMs:

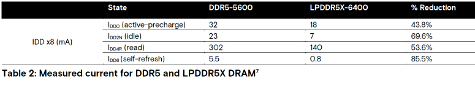

Micron provided power consumption comparisons with DDR5 5600 SODIMMs as well:

JEDEC has specified the CAMM2 standard, and we can expect other memory suppliers, such as Samsung, to launch CAMM2 products. We can expect capacities to head towards 128 GB per module and possibly beyond that to 192 GB.

Micron’s LPCAMM2 products allow laptop PC users the ability to upgrade their system memory configuration. LPCAMM2 DRAM uses less power than SODIMMs, provides faster performance, is physically smaller and is upgradeable. What is not to like?

Bootnote

Dell introduced its then-proprietary CAMM technology with its Precision 7670 laptop product in November 2022, and JEDEC then defined its CAMM2 standard based on it, during 2023. The JESD318 CAMM2 standard is available for download from the JEDEC website and CAMM2 could replace SODIMM technology. There is more LPCAMM2 information here and in a Micron blog.