Analysis. VAST Data is getting ready to launch its DataEngine software, providing automated pipeline operations to make AI faster and more responsive to events. We referenced a VAST Data Platform white paper writing this article. It’s long – 100 pages – but well worth a read.

Update: VAST DataEngine compute uses the Data Platform’s controller/compute nodes (C-nodes). 13 Aug 2024. VAST Co-founder says Cosmos event is not (just) about DataEngine. 15 Aug 2024.

The DataEngine is VAST’s latest layer of software on its Data Platform. This platform decouples compute logic from system state and has a DASE (Disaggregated and Shared-Everything Architecture) involving a VAST array consisting of x86-based controller nodes (C-nodes) which link to data storing all-flash D-nodes across an InfiniBand or RoCE with 200/400Gbps networking fabric. The C-node and D-node software can run in shared industry-standard servers.

The controller software has been ported to Nvidia’s Arm-powered BlueField-3 DPU (Data Processing Units). These are located in the D-node storage enclosures and also the storage controllers. In general, VAST’s containerized VastOS software runs in stateless multi-protocol servers and these include the C-nodes and BlueField-3 DPUs. The VAST array BlueField3 DPUs can be linked to similar DPUs in Nvidia GPU servers.

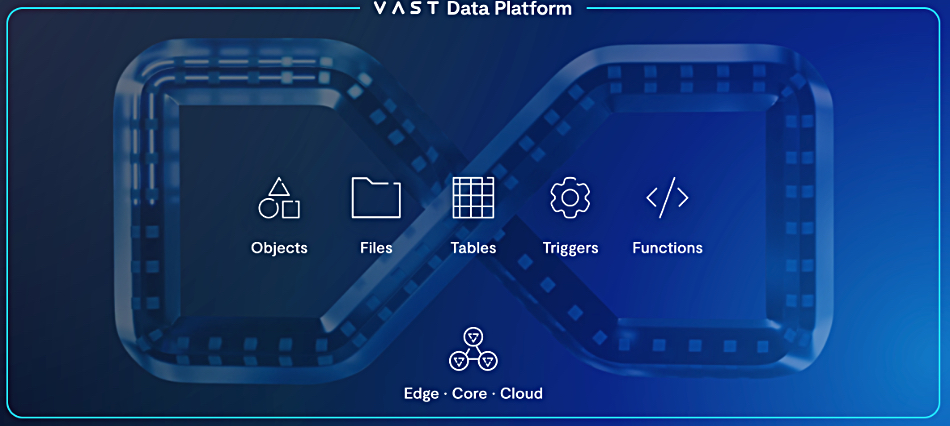

VAST has built an AI-focused data infrastructure Data Platform stack atop its DASE hardware/software DataStore base:

- DataCatalog – metadata about every file and object in the system

- DataBase – transactional data warehouse

- DataSpace – global namespace

- DataEngine – AI pipeline infrastructure operations

VAST tells us that the VAST DataBase (the table management and storage bits) runs on the VAST cluster’s C-nodes. The VAST Catalog is a table in the VAST Database that holds the namespace metadata extracted from a snapshot periodically. So that runs across the C-nodes too.

VAST should not be viewed as a typical storage array supplier – focussed on stored data block, file and object I/O, with its arrays at the beck and call of system and application servers to which they ship read data and from which they receive write data.

VAST’s data infrastructure stack contains elements which traditionally run on servers. It has blurred the boundary between storage array and application/system servers and is now a hybrid storage hardware/software and AI data processing infrastructure software business. Looked at another way, VAST provides an AI infrastructure software stack with an integrated multi-protocol storage array base – its very own idea of a converged infrastructure – with compute (Dell, HPE, Intel, Lenovo Supermicro + AWS, Azure and GCP compute instances) and networking (Arista, Cisco, HPE, Nvidia-Mellanox) partners.

Regard the DataEngine as server-level and not storage array-level software. It is an engine – with a self-starter as the thing can trigger events – that executes and orchestrates AI pipeline events and functions without an AI developer having to explicitly code and locate routines within the AI stack. Its scope is an organization’s entire VAST installation, across both the distributed on-premises and public cloud environments.

Its operation is based around event triggers and functions – both are stored as elements in the VAST DataStore. Any data I/O event in the VAST Data Store can be an event trigger – meaning any create, read, update or delete, known by the unlovely acronym CRUD. For example, a new .jpg file stored in a Photos folder could trigger a metadata gathering operation. Other events could be or a new row in a VAST table, and this includes new Kafka topics since they are stored as table rows, internal counters reaching set points or triggered functions completing.

When an event trigger takes place, the DataEngine will execute a function according to set event trigger rules. These rules could specify the execution environment – such as the fastest processing hardware or the lowest-cost hardware. A function is made up of one or more elements which “defines the execution requirements of a given function, say a GPU-powered inference engine performing facial recognition.”

There is a global workflow optimization engine which “will choose which function to perform a task based on cost. The VAST DataEngine also keeps information on the cost, CPU resources, and execution time of each function each time it’s run, and uses these factors to run functions in the optimal location each time.”

The execution requirements “would include hardware and location dependencies for functions orchestrated by the VAST DataEngine, or simply the execution method for functions performed by the cloud or other services outside the VAST DataEngine’s compute environment.”

The DataEngine operates on a so-called VAST Computing Environment and this has the global workflow optimization engine “that responds to event triggers, figures out the best place to run each instance of each function, and calls the services that perform those functions, from scraping metadata to matching millions of genome segments.”

It also has an “execution and orchestration engine that manages the containers that deliver the function’s services.”

An example shows how this could work. “A workflow may start by scraping the embedded metadata out of the incoming files into The VAST Catalog. An event trigger may then detect that a subset of those files are – photographs taken in Las Vegas over the New Year’s weekend – and cause an inexpensive inference function to run on those files and identify a smaller subset of images that include the image of a red car. Those files are subjected to more extensive processing to ultimately return the license plate number for a hit-and-run.”

The VAST Execution Environment “provides the computing resources for the VAST Data Platform to perform its work, and for the VAST DataEngine to process user functions. The VAST Data Platform can orchestrate all of its computing functions across a single pool of servers optimizing their utilization.”

Before the VAST DataEngine VAST clusters ran all the code for a C-Node or D-Node in a single container, plus one container for the cluster’s VMS management service. There are multiple service modules within each container of course. Now the VAST Execution Environment uses the DataEngine as the control plane orchestrator of Kubernetes pod(s) that run VAST containers along side customer provided containers that train deep learning models, and, for example, infer the photos that have faces, and recognize whose face it is for a social media tag.

The VAST Execution Environment orchestrates user provided containers along with the VAST containers, that provide all the services of the VAST Data Platform from rebuilding erasure code data after an SSD failure to processing SQL queries and running the DataEngine’s optimization engine itself: VAST containers will also run functions users create with VAST’s Python toolkit in an AWS Lambda-like environment.

We understand that part of the VAST Data Engine that will be shipped, the query engine, today runs an embedded Spark cluster across a subset of the CNodes, with a customer allocating a pool for the Engine. That’s how the Kafka compatible event broker and event triggers will run. Customers will be able run functions on CNodes. In the future VAST may be able to orchestrate heavy-weight functions running on some other compute resource.

There is much more to learn about the DataEngine, such as metadata scraping, PII detection and ransomware anomaly detection. Read the whole white paper linked above if you’re interested.

VAST is preparing a DataEngine marketing blast, with a Cosmos Online event in October where, we’re told, viewers can witness AI in action and see how world-class organizations have VAST-powered AI data pipelines. VAST co-founder Jenn Denworth commented: “You’re a bit askew from what we’re looking to announce.”