Slow and inconstant network connections are apparently no barrier to massive file transfers with the latest software update from Arcitecta.

Data manager and orchestrator Arcitecta’s Mediaflux Livewire software has been updated to cope better with unreliable and low-bandwidth network connections. Mediaflux logically combines disparate unstructured data stores – silos – into a single namespace covering file and objects stored in SSDs, disk or tape on-premises or in the public cloud. It has a metadata database and an integral Livewire parallelized data mover for cross-tier and cross-site transfers using TCP/IP. Arcitecta claims that, with the latest Mediaflux Livewire release, customers can securely and reliably transfer massive file volumes at “light speed” around the globe. (See bootnote.)

Jason Lohrey, founder and CEO of Arcitecta, enthused in a statement: “High-performance transmission solutions should make it easy and reliable for people and systems to move data to the right place at the right time, whether for sharing with colleagues or transmitting elsewhere for analysis. High-speed data transmission is a crucial component of modern data management and storage fabrics to enable a global mesh of managed data.”

Livewire supports streamed data sources, such as incoming network data pipes and traditional file-based data sources. It was initially designed for high-latency, high-performance network environments and wide-area networks (WAN) with latencies exceeding 200 milliseconds. Now it can cope with unreliable networks as well. “With the enhancements to Mediaflux Livewire, we have addressed the most demanding challenges at both ends of the spectrum – from the slowest and most difficult networks to the highest-performance networks. Mediaflux Livewire manages it all as part of an end-to-end data management solution.”

Livewire nodes can be scaled out to increase bandwidth and have reached the 65TB/hour level, with storage management at each end of the link. The software features auto-performance tuning, retry capabilities, and data checksums on transmission and storage for fault detection and recovery.

Note that 65TB/hour sustained for 24 hours would transfer 1.56PB of data, and Arcitecta would need just 15.4 hours to transfer 1PB. This puts it firmly in the high-speed data mover territory occupied by Zettar and Vcinity. Zettar needs 29 hours to transfer 1PB across 5,000 miles, while Vcinity transferred a petabyte across 4,350 miles in 23 hours, 16 minutes. It would be fascinating to see a speed test between the three suppliers.

Arcitecta claims customers with smaller networks – especially relative to the size of data they need to transmit – can keep large amounts of data synchronized between sites and transmit data in both directions, regardless of low network bandwidth and reliability. This is because of new features added to Livewire:

- Adaptive compression automatically auto-tunes the compression of any file or any file portion to decrease the amount of data transmitted to accelerate data transmission over low-bandwidth network connections.

- Providing reliable packet retransmission when connections fail on high-latency networks without impacting throughput, even with 100GbE or higher speed networks.

We asked how this is possible, and Lohrey explained: “The error handling adds immeasurable overhead to the speed of transmission. If there are continuous network dropouts, including no network at all, then overall performance will be impacted. However, the reliability of the transmission will not be affected – the data will eventually get there.”

“The point is that we added this level of resilience in a manner that does not affect the transmission speed when the networks are available – that’s not a guarantee. It should also be noted that there is significant amounts of parallelism – if you have 20 parallel connections, and one drops out, then you will have 19/20th of the throughput for that brief period in which the one connection is unavailable. You can also choose to send the same traffic through different nodes/routes in parallel – if someone cuts the network on one route and the network is still available on another route (e.g. there are multiple redundant cross-continental fiber cables, and someone cuts one), then there will be no reduction. The cost is N times the amount of data transmitted, N-1/N of it not needed unless there is an incident.”

Arcitecta claims Livewire’s new features significantly reduce the size of network traffic between sites and dramatically increases performance over low-bandwidth connections. We asked for more information about the adaptive compression and Lohrey replied: “Any available compression method can be utilized. We have not invented a new method of compression. We have optimized the pipeline with the available methods.”

“Not everything is compressible (you would be hard-pressed to further compress images compressed with wavelet-based compression), but there are many things that will compress, and there are things that will partially compress. That process should be completely transparent to the person, or system, that is transmitting the data. The transmission system should tailor the method of compression in response to the data being transmitted at any point in time.”

“This is what we have done – we have treated the data stream as unknown, and the system adapts the compression in response to the stream of bytes it is being presented with. The amount of effort put into the compression will automatically vary depending on the transmission rate of the network. The compression is highly parallel (just like the transmission). It also builds a predictive model of what will be compressible and what will not be. By looking at the data as a stream of bytes (not files), we can adapt the compression on the fly and squeeze the maximum compression out of any sequence of bytes.”

Livewire creates a visual representation of which files are at each site, and allows changes to be identified and transmitted without the need to ask whether those files are in sync. Livewire keeps this visual image indefinitely and at all locations.

Lohrey tells us: “We have been presented with situations where the networks are so slow, and minimal, that it’s impossible to find out what you need to transmit and transmit data – the act of asking has latency and consumes bandwidth that negatively impacts transmission performance. In some cases, impossible. To solve this problem, we completely removed the need to ask by the sender maintaining a model of what is at the receiver, so it never has to ask what needs to be transmitted – that is a significant form of compression.”

“The ultimate compression is not to transmit data at all, but rather to transmit the (much smaller) instructions that reproduce the data at the other side. That is a topic for a future conversation.”

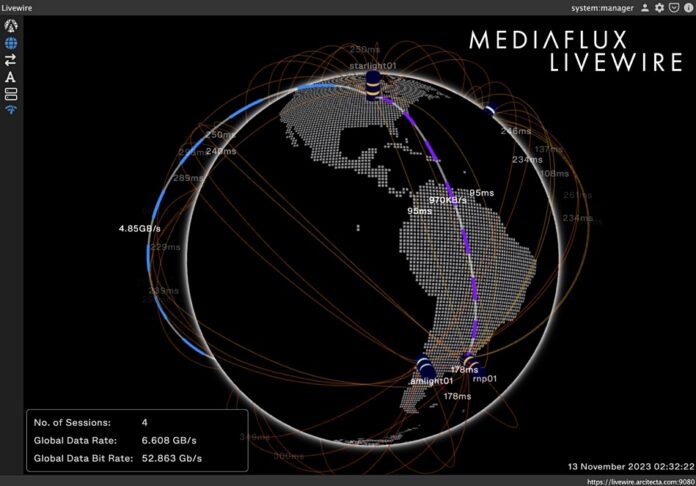

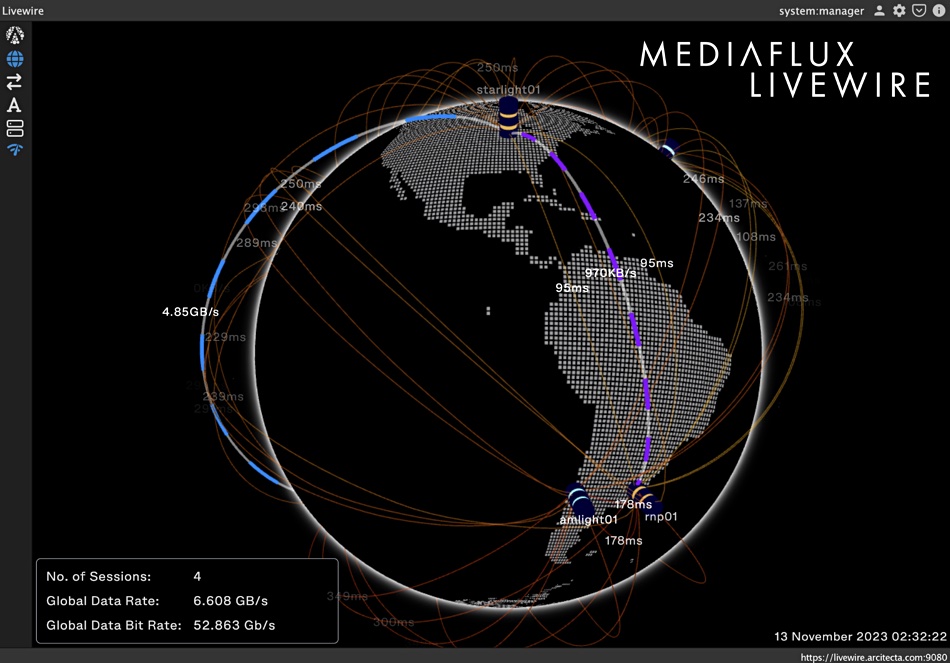

Livewire, according to Arcitecta, monitors network performance and transmissions with a 3D worldview of sites, ping times, and network traffic to clearly see point-to-point flows, the aggregate bandwidth, and whether there are any network connectivity issues. In real time, users can visualize the layout, flow rates, and network health for geolocated data endpoints. This, it claims, is unique.

Lohrey told us: “For low bandwidth/high-latency connections, we are building a ‘digital twin’ of what is at the other side to eliminate the synchronization network overhead. Who else is doing that?”

“We know what data is in any site that is accessible through a federation of servers and distributed queries that can be qualified by any form of metadata you can conceive of – others are likely to be doing that, but few will have the depth of metadata that we support. We have an integrated layer with maximized transmission for high-latency networks (for low through to high bandwidth networks) as part of the data fabric. Everyone else is a separate non-integrated product, as far as I can tell.”

“That integrated ecosystem includes geocoded 3D visualisation of the transmission end-points. We put that on a 2.5 meter high LED wall at Supercomputing 24 – it drew a lot of attention from the networking people who said ‘finally, they could see their global networks’. No one said, ‘I’ve seen that before’. Our data fabric is interconnected with our own, award-winning high-speed networking. That is unique.”

The firm boasted that Mediaflux Livewire has been awarded the “Most Complete Architecture” by the International Data Mover Challenge (DMC) at this month’s Supercomputing Asia 2024 (SCA24) running February 19–22 in Sydney, Australia.

Mediaflux Livewire is generally available as a standalone product or as part of the Mediaflux platform. Pricing is based on the number of concurrent users.

Bootnote

Regarding “light speed” Jason Lorry told us: “Our ability to digitally transmit data over vast distances is limited by the speed of light (2.998×108 meters per second in a vacuum). If we had perfect photo-couplers and electronics that had zero latency, then transmitting a single datum between Melbourne in Australia and New York in the United States – approximately 16,662 kilometers – would take around 56 milliseconds one way, or double that for the return journey – a theoretical asymptote of 112 milliseconds. Of course, the electronics and photo-couplers are not perfect, so a return trip between those two cities currently takes around 232 milliseconds. To illustrate the effect of that latency: at that rate if you were to wait for acknowledgment, you could type fewer than four characters per second.