There’s data moving and there’s high-performance computing data moving, which needs to shift massive data sets at high speed with minimal interference from transmission protocol chatter. We’ve previously written about Vcinity, a privately owned company which works in this space, and now we’d like to compare and contrast it with another HPC data mover: Zettar.

Update: Table updated 21,22, 23, 24 February 2024 and Zettar footnote added 24 February 2024.

The two companies have been operating in the specialized HPC area where large data sets need to be moved between HPC centers that can be on opposite sides of an ocean or continent. Normal TCP/IP only gets to use around 30 percent of a WAN link’s bandwidth; it is a chatty protocol with lots of network metadata messages flowing back and forth and no parallelism, unless you use multiple links and have some kind of parallelising front end box.

The demand for high-speed data movers is becoming evident in enterprises with generative AI training which relies on Large Language Model data sets, and also with larger amounts of data being used in cloud file-based collaboration services – think CTERA, Nasuni and Panzura.

There is already high-speed data moving tech available on the market that was developed for feeding data to Nvidia GPUs: GPUDirect uses RDMA to get data sent directly from storage drives in a server or array, bypassing the host CPU and its memory. However this technology is proprietary to Nvidia – so no good to Intel or AMD GPUs – and is also file-based.

B&F understands that more generalized high-speed data moving technology is needed. Vcinity and Zettar provide an informative glimpse into the high-speed data moving world that is entering enterprise computing. We also envisage that DPUs such as Nvidia’s BlueField and Intel’s IPU could become host devices for data moving software.

Zettar

Zettar was founded by CEO Chin Fang in 2008 and has raised some $225,000 in grant funding from the US National Science Foundation, a trifling amount in storage startup land. It works with academic and national scientific bodies and has notched up impressive data moving speed. For example, it claims to have pumped 1 PB of data across a 5,000-mile (8,046km) distance in 29 hours at a 94 percent bandwidth utilization level.

Users see an zx NFS appliance at either end of the Ethernet or InfiniBand link and these appliances can be clustered into a swarm of nodes, providing parallelism and scale-out functionality with a peer-to-peer relationship across the link. The proprietary and patented technology achieves latency-and-network-error insensitive TCP-based data transfers. The Zettar system can also transfer S3-compliant objects. It can send and receive data simultaneously, and support multiple users (tenants) each with their own read and write area.

Zettar’s software can handle the transfer of lots of small files through to very large ones. It can transfer a fixed set of files and objects but also send streaming data, appending it to a destination file. A scientific paper, High Performance Data Transfer for Distributed Data Intensive Sciences, can tell you more about Zettar’s technology. For example it tells us that Zettar’s technology “has the ability to manage and to use raw flash storage devices distributed across the cluster nodes directly. Thus it takes full advantage of such devices’ performance potential, without paying the overhead of a local file system and, possibly, the overhead of a parallel file system for aggregating distributed flash devices. … ZX can aggregate all flash storage devices across all nodes into a scale-out data transfer buffer.”

It is partnering Intel, Nvidia and others. Zettar is working with Intel on IPU (Infrastructure Processing Unit) support. Four IPUs can be aggregated into two dual-node clusters, one either end of a link. A demonstrated transfer rate is basically 100 Gbps line rate.

Vcinity

Vcinity was founded ten years later than Zettar, in 2018, by CEO Harry Carr, who used it as a vehicle to buy Bay Microsystems’ high-speed networking assets that year. Carr was also Bay Microsystems’ President and CEO.

The VDAP (Virtual Data Access Platform) technology is based on using an RDMA tunnel inside an IP network. IP packets flow between UDP ports. Clients mount NFS/SMB endpoints at each end of the link. There can be 3 to 8 links between the endpoints, with data striped across them. VDAP is lossless and speed scales with available bandwidth, with Vcinity supporting scale out to multiple 100 GBps links.

Vcinity’s VDAP moved a 10 GB dataset across a 3,600 mile distance in 60 seconds, we’re told. It also moved 1PB @97 Gbps over 4,350 miles in 23 hours 16 minutes, which Vcinity claimed beat Zettar. This used a 100 Gbps WAN with 70 ms round trip time.

Vcinity’s footprint expands beyond the HPC space and it has enterprise clients in the Federal, power and utilities, oil and gas, telecommunications, healthcare, financial services, and transportation markets. AWS, Dell, GCP, IBM, Intel, Hammerspace, Snowflake and Teradata all work with Vcinity. Asked if had arrangements with Egnyte, CTERA, Nasuni and Panzura, COO and Chief Product Officer Russ Davis said Vcinity had deals with three of the four. It is also present in the AWS and Azure marketplaces and works with Ubercloud in the engineering suimulation market.

Regarding Hammerspace, Carr told us: “They are the fastest thing in the West, I think at the moment, from a filesystem perspective.”

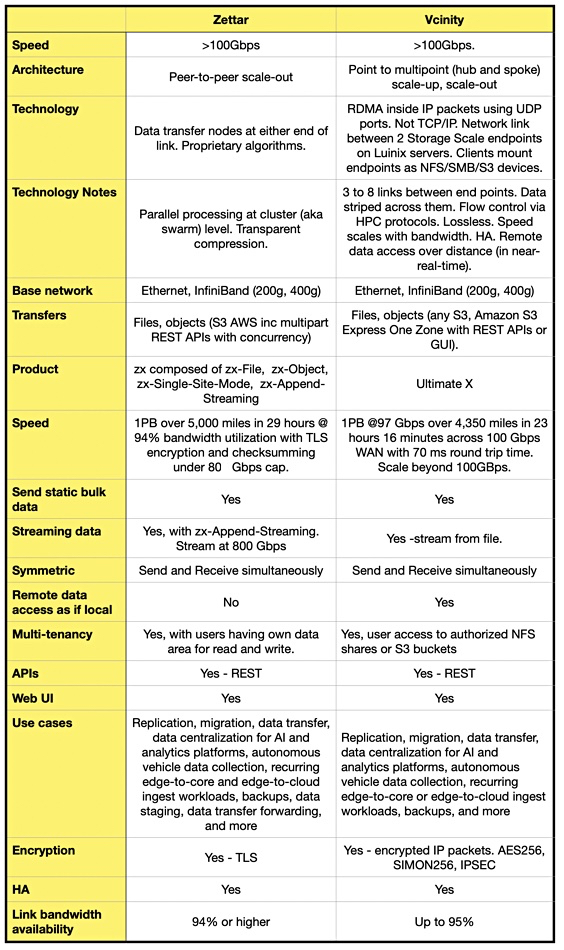

A table compares the Zettar and Vcinity offerings:

Davis said of Zettar: ”They’re good. They’re fast. They’ve been focused on just the HPC world. … They’re an up and comer without a doubt, right. They can move data fast, [just] not as fast as we do.”

Carr said that Vcinity was out of the hardware business, but you still need front-end link hardware to go fast. “If you look at us, a typical VM will do a couple of gigabits per second across the LAN. Bare metal will double up that to 4, 5 Gbps. But if you want to go 10 or above, you need a piece of hardware. So we use … FPGA boards and Intel-based FPGAs that somebody could buy through distribution.” He added: “While you can choose hardware assist (like an easy-to-acquire FPGA), you are not required to use a variety of hardware for the technology to function, which is the case for Zettar.”

He noted that, with AWS: “They have this capability called F1. … It’s basically an FPGA instance that you can upload firmware to. So, even if you’re in the cloud, if you want 10 gig and above, we would just connect to an f1 instance and AWS and away you go. And that’s something that we’ve actually done a lot of testing with AWS.”

Vcinity was also AWS’ launch partner when it introduced the S3 Express One Zone storage tier.

Zettar footnote

Zettar CEO Chin Fang say Zettar does point-to-point data moving and not traffic aggregation, meaning multiple source points’ data combined across a single link. He tells me: “regarding Vcinity’s claim of Transferring 1 Petabyte Across the U.S. in Just Over 23 hours/23 hours @100Gbps / ~2,800 Miles … it’s crucial to note that their achievement involves traffic aggregation across three pairs of data sources and destinations, as depicted in their Technology Brief, please see the picture below. This signifies traffic aggregation rather than a straightforward single-source, single-destination point-to-point transport.

In his mind it’s important to distinguish between traffic aggregation and point-to-point data transport. He says: “Achieving high point-to-point speeds (single data source and single data destination) is exceedingly challenging. Vcinity’s actual capability in the context of point-to-point data transport is, at best, approximately 33.3Gbps, calculated as 100Gbps divided by 3.”