Jimmy Tam is Peer Software’s CEO and reckons Peer’s multi-master replication is something that cloud file data services gateways can’t match.

Tam talked about this in a briefing call and claimed the gateway folks, companies like CTERA, Nasuni, and Panzura, rely on having a single central copy of data to which all edge sites deferred; their single source of truth, their master data file so to speak. Maintaining this concept grows increasingly difficult as the amount of data involved increases, from tens to hundreds of petabytes and on to the exabyte level, he went on to claim.

Tam said: “How do you handle that scale? Number one, you need to create a scale-out system, right? Just like all the storage suppliers have a scale-out systems; like Dell PowerScale is a scale-out system. NetApp also has a scale-out system.”

“We have adapted our technology to accommodate scale out from a distributed system perspective. So, as our storage partner scales out, our software scales out so that we can put more and more agents to match those subsets of their scale-out architecture. And then we’re parallel processing that to distribute their distributed storage into multiple sites.”

These gateways want to replace filers in Tam’s view, but they are not primary storage suppliers: “[When] writing data the edge, what we need is an overlay on top of the original storage companies that we really trust, like Dell PowerScale, previously Isilon, NetApp, Nutanix files; we need an overlay on top of them, because we need a distributed systems to make sure that the data as we wrote it to the edge gets propagated to the central sites or the cloud or wherever else we want it.”

He says that level of scale defeats restoring an affected live system from a tape backup, asking rhetorically: “Imagine if ransomware hits? Am I going to be restoring 100 petabytes? How long will that take?”

Peer with GFS v6.0 can provide replication that enables a live failover to a hot standby site, Tam claimed, saying: “It starts at how do you accommodate to move that much data every single day and that’s where the scale-out architecture, the optimizations and network traffic, as well as ability to multiple parallel process that replication across multiple sites” comes in.

Tam claims the cloud storage gateway suppliers can’t match this either: “because, ultimately, the cloud storage gateway companies are cloud storage gateways. They’re not primary storage. And at this scale, petabytes and petabytes and petabytes scale, [customers are] realising the cloud storage gateways weren’t meant to write a massive amount of files quickly, every single day.”

Tam does not want Peer to replace filers. He wants Peer GFS to move data between filers, to augment their operation, and has struck deals with filer suppliers to do this.

Multiple masters

He thinks that internationally distributed enterprises will have moving master files. A media company may create a project file set in one location and it then follows the sun and moves to another location and then another, all the while with edge sites and remote workers needing to access it.

Another part of this media business could have a separate project with its own master file set which also moves due to project workflow needs. There are multiple master data sets and their location changes Tam says that the Peer Global File System (GFS) has been built to handle this situation.

He said: “The concept of one datacenter, one main source of truth; that’s out the window just because how we’ve changed and adapted, especially through COVID.”

“Our customers want multi-master systems, because you never know where the source of truth is going to be the next day, because people want a very flexible workforce, where you’re going to pull teams of people together, based on expertise. If the expertise in London, even though we’re a USA headquartered company, why don’t we create the centre of truth in London for that project? Hopefully, they’re doing that.”

“And then the company say, hey, we have another project coming out. The best person for that’s out of Tokyo. So let’s create Tokyo as main centre of truth for that project. And then another project spins up in New York, right. Just as the workflows are becoming much more fluid, and you’re creating pods of excellence, centres of excellence everywhere, the storage infrastructure, t and architecture has to be has to be fluid and flexible to accommodate that as well.”

The idea of having a single data center of truth in a public cloud can’t cope. Whatever happens anywhere around the world, in all the traditional cloud storage gateway edge sites, gets loaded up to the single centre of truth in the cloud. So when Tokyo comes online, after Turin goes away, it’s there up in the Amazon cloud ready and waiting for you. What Tam is saying is that’s just too slow. Datasets are so huge now that you cannot do that. What you have to do instead is keep a constant amount of replication going on between the various edge sites, because you cannot have a single centre because it simply can’t keep up.

Tam said:”That’s exactly it. When you make the cloud the source of truth, you’ve done that for simplicity’s sake, to accommodate the IT administrator, but you’re not serving the customer.”

He said: “We see the cloud as what they were originally meant for. Number one is going to be it’s powerful elasticity. So I’m creating all this data in these multi-master systems on premises everywhere. The cloud is going to be my ultimate repository for my backup and my archives, to make sure that I have everything stored in one place and have it right. That is my final and full copy of everything.”

Peer’s replication supports a hybrid on-premises to public cloud environment.

An AWS Storage blog, “Create a cross-platform distributed file system with Amazon FSx for NetApp ONTAP” says most organizations would like to enjoy the benefits of the cloud while leveraging their existing on-premise file assets to create a highly resilient hybrid enterprise file share. This can bring the challenging requirement of having different storage systems that exist at the edge, in the data center, and AWS to work together seamlessly, even in the face of disaster events.”

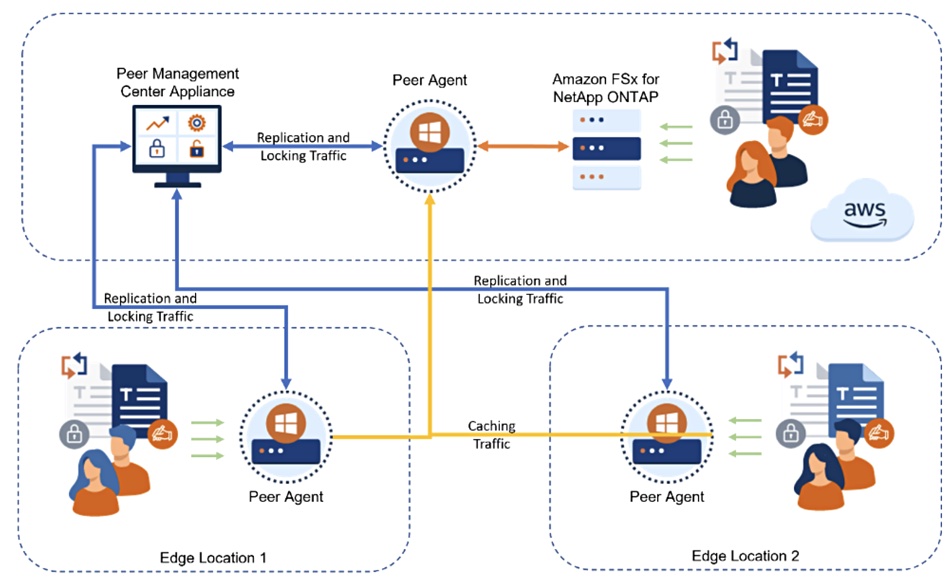

Blogger Randy Seamans writes: “I explore how Peer Software’s Global File Service (“PeerGFS”) allows customers to access files from the edge, data centers, and AWS through the use of cross-platform file replication, synchronization, and caching technologies. I also explore how to prevent version conflicts across active-active storage systems through integrated distributed file locking. I utilize Amazon FSx for NetApp ONTAP as the repository of record in AWS and Windows SMB, NetApp, Nutanix, or Dell storage for the on-premises edge and data center storage.”

He covers four situations:

- File caching from FSx for ONTAP to on-premises edge Windows file storage

- Distributed file system between on premises, edge, and data center storage with FSx for ONTAP

- Continuous data protection and high availability from on-premises storage to FSx for ONTAP

- Migration from on-premises storage to FSx for ONTAP

Caption: A typical configuration of PeerGFS file caching from FSx for ONTAP to two on-premises Windows file servers.

The public cloud is a globally distributed set of data centers

Much as Tam separates Peer GFS use from an edge to a single data center or cloud center master data set idea, he says the cloud is not, in fact, a single location. It’s composed of regions and zones, each with their own data center infrastructure. A filer’s instantiation in the cloud, like FSx ONTAP, isn’t necessarily a single instantiation. There could be FSx ONTAP instances in many zones and regions for resilience and also for follow-the-sun type working.

Tam said: “The customers requirement is this: if one availability zone goes down, and I log back in to the next availability zone, where it’s still live, how do I make sure that all my profiles, my virtual desktop profiles, and the data is also there? Because Central is down? You will you need a distributed system, you need an active-active distributed system. … AWS is missing a distributed system, so AWS partnered with us.”

In other words there is a need for multi-master file replication inside a public cloud, and Peer has an arrangement with AWS to support this for AWS’s first-party managed FSx ONTAP service.

It is partnering Liquidware to provide, as an AWS blog title says, “Amazon WorkSpaces Multi-Region Resilience with ProfileUnity and PeerGFS”. This works with FSx ONTAP, and enables the ability “to replicate a user’s profile and data to standby Amazon WorkSpaces in a separate Region. This offers a business continuity and disaster recovery solution for your end users to remain productive during disruptive events.”

A Liquidware blog – How to Configure Amazon WorkSpaces Multi-Region Resilience with Liquidware ProfileUnity and PeerGFS– discusses this and explains how “to replicate a user’s profile and data to the standby WorkSpaces offering a true business continuity and disaster recovery solution ensuring your end users remain productive during disruptive events.”

We can go further. We are in a multi-cloud era, with enterprises not wishing to be locked in to a single public cloud provider if they can avoid it. Tam said: “A government entity here in the USA that has mandated a resilient multi-cloud strategy. Because the government, the military, cannot put all their eggs in one basket, they need that risk abatement.”

But “AWS can only do it with their traditional active-passive technology with manual failover. But, in our world, everything is active-active and in real time.”

So, as night follows day, there is also a need for replication between file processing instances across public clouds. Now, with Peer providing the dataset mobility plumbing to facilitate this, between, say AWS and Azure, we have multi-master, multi-cloud replication.