You don’t. It’s too much data and takes too long. That’s the view of Jason Lohrey, founder and CEO at Australian data management company Arcitecta.

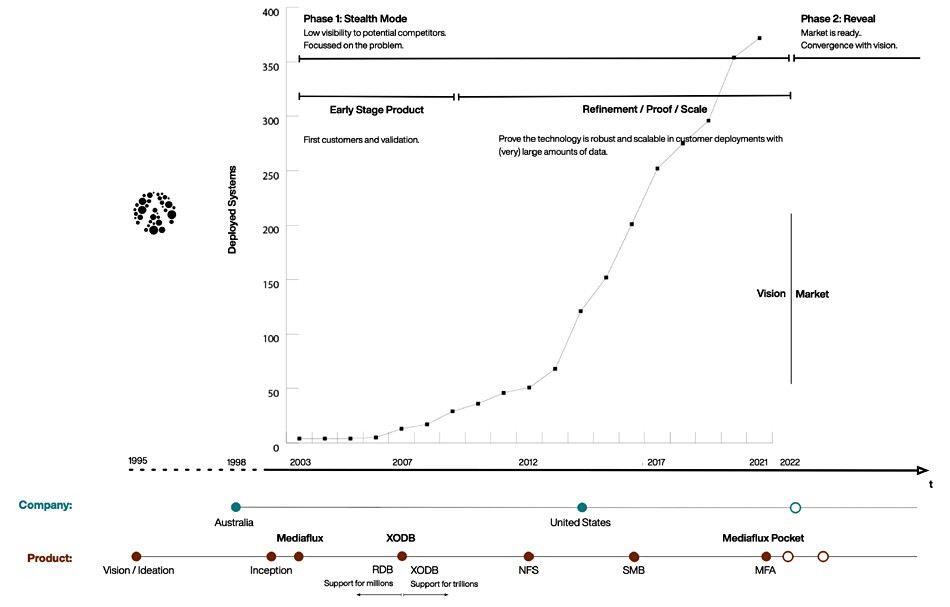

He founded the company in 1998 and grew it without venture capital funding to provide its Mediaflux data management software. It now has more than 350 customers, with many in the life sciences and research community, and has decided to exit its stealth mode of operation.

Lohrey’s outfit maintains that “for years, IT administrators have worked hard to back up an increasing tsunami of data, and with each passing year, that has become harder to manage. In some cases, backup has been abandoned altogether.”

Arcitecta’s point is that when datasets reach a 100PB size then it is simply not feasible to back them up – it takes too long: “When we have 100 petabytes, we’ll be walking away from Veaam or Rubric or Veritas or Commvault or whoever.”

The claim of the startup is that its Mediaflux product will protect the data. How? “Traditionally, backup systems sit outside of the file system. But when you actually are the file system … you are continuously backing things up.”

The software can do that because: “As in our secret sauce, we’ve actually merged the database and the file system into one.” Lohrey is referring to the data set and a metadata database describing the data.

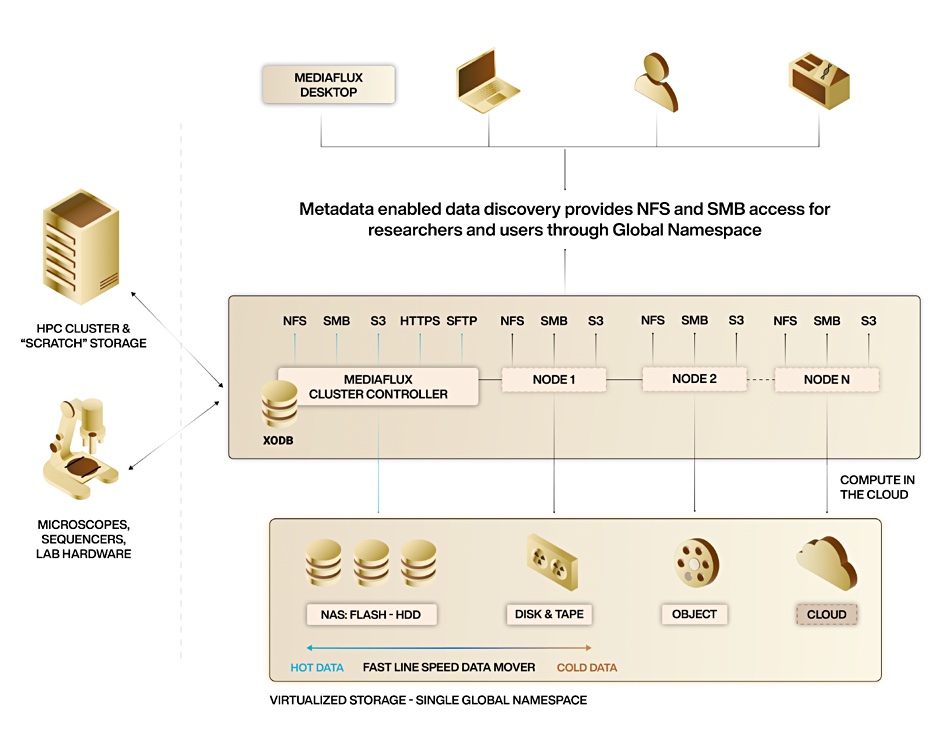

Mediaflux is a storage-virtualizing data management software layer that presents a single global namespace to clients (accessing systems) and can manage and access all kinds of data on any storage system. There are Mediaflux desktop agents that enable users and researchers to access files in this namespace via NFS and SMB protocols. They connect to a scale-out Mediaflux controller which, in turn, reads and writes data from/to primary SSD and HDD storage, secondary disk, tertiary disk and tape, and also public cloud storage. It supports file and S3 protocols.

Mediaflux maintains a metadata store, its own self-written and highly-compressed NoSQL database, that includes versions of all data objects. That means if miscreants penetrate a customer’s system and corrupt, encrypt or delete files, then the Mediaflux controller simply reverts to an earlier point in time copy and effectively restores the file to a good state.

Lohrey described his approach to databases: “I’ve got a great interest in actual information density reduction. So how do you represent a huge amounts of things in the smallest possible space so that the database is really the core ingredient for this to work at scale.”

Livewire

The Mediaflux software suite includes a Livewire data mover that uses the metadata database. It moves files regardless of size or type and scales as needed by coalescing small files together or splitting large files into optimal chunk sizes for the underlying compute or network infrastructure.

Livewire is positioned as an end-to-end data management system, not just a data mover, that manages the overall transmission pipeline, including storage at each end, and optimizes file transfers across limited, highly-contended or latent networks.

Arcitecta says it can move billions of files at ultra-fast, nearline speeds across global distances and over high-latency networks. Livewire and Mediaflux gained awards at an International Data Mover Challenge at Supercomputing Asia 2022 for its ability to move data at scale. It has been clocked at over 10.5GBs on a 100GE network and Arcitecta says it can transmit multiple terabytes of data in minutes across the globe over a similar network.

Livewire can be purchased as a standalone product or as a fully-integrated component of the Mediaflux data management product.