Four block storage suppliers use public cloud ephemeral storage drive instances. So can they be persistent despite being ephemeral?

Yes and no. Let’s take the three public clouds one by one and look at their ephemeral storage, and then take a gander at each four suppliers that provide storage software in the cloud based on this ephemeral storage.

Amazon

The Elastic Compute Cloud (Amazon EC2) service provides on-demand, scalable computing capacity in the AWS cloud. An Amazon user guide says EC2 instance store provides temporary block-level storage for instances. This storage is located on disks that are physically attached to the host computer. Specifically the virtual devices for instance store volumes are ephemeral[0-23] where [0-23] is the number of ephemeral volumes. The data on an instance store volume persists only during the life of the associated instance – if you stop, hibernate, or terminate an instance, any data on instance store volumes is lost. That’s why it’s called ephemeral.

The EC2 instance is backed by an Amazon Elastic Block Store (EBS) volume which provides durable, block-level storage volumes that you can attach to a running instance. The volume persists independently from the running life of an instance.

Azure

Azure’s ephemeral OS disks, actually SSDs, are different from its persistent disks. They are locally attached storage components of a virtual machine instance – either in VM Cache or VM Temp disk – and their contents, which are stored in SSDs, are not available after the instance terminates.

Azure documentation explains: “Data Persistence: OS disk data written to OS disk are stored in Azure Storage” whereas with ephemeral OS disk: “Data written to OS disk is stored on local VM storage and isn’t persisted to Azure Storage.”

When a VM instance restarts, the contents of its persistent storage are still available. Not so with ephemeral OS disks, where the SSDs used can have fresh contents written to them by another public cloud user after the instance terminates.

The Azure documentation makes it clear: “Ephemeral OS disks are created on the local virtual machine (VM) storage and not saved to the remote Azure Storage. … With Ephemeral OS disk, you get lower read/write latency to the OS disk and faster VM reimage.”

Also: “Ephemeral OS disks are free, you incur no storage cost for OS disks.” That is important for our four suppliers below, as we shall see.

Google also makes a distinction between local ephemeral SSDs and persistent storage drives. A web document reads: “Local solid-state drives (SSDs) are fixed-size SSD drives, which can be mounted to a single Compute Engine VM. You can use local SSDs on GKE to get highly performant storage that is not persistent (ephemeral) that is attached to every node in your cluster. Local SSDs also provide higher throughput and lower latency than standard disks.”

It explains: “Data written to a local SSD does not persist when the node is deleted, repaired, upgraded or experiences an unrecoverable error. If you need persistent storage, we recommend you use a durable storage option (such as persistent disks or Cloud Storage). You can also use regional replicas to minimize the risk of data loss during cluster lifecycle or application lifecycle operations.”

Google Kubernetes engine documentation reads: “You can use local SSDs for ephemeral storage that is attached to every node in your cluster.” Such raw block SSDs have an NVMe interface. “Local SSDs can be specified as PersistentVolumes. You can create PersistentVolumes from local SSDs by manually creating a PersistentVolume, or by running the local volume static provisioner.”

Such “PersistentVolume resources are used to manage durable storage in a cluster. In GKE, a PersistentVolume is typically backed by a persistent disk. You can also use other storage solutions like NFS. … the disk and data represented by a PersistentVolume continue to exist as the cluster changes and as Pods are deleted and recreated.”

Our takeaway

In summary we could say the ephemeral storage supplied by AWS, Azure, and Google for VMs and containers is unprotected media attached to a compute instance. This storage is typically higher speed than persistent storage, much lower cost, and has no storage services, such as snapshotting, compression, deduplication, or replication.

Further, suppliers such as Silk, Lightbits, Volumez or Dell’s APEX Block Storage provide public cloud offerings that enhance the capabilities of either the native ephemeral media or persistent block storage, adding increased resiliency, faster performance, and the necessary data services organizations require.

Let’s have a look at each of the four.

Dell APEX Block Storage

Alone of the mainstream incumbent vendors, Dell offers an ephemeral block storage offering called Dell APEX Block Storage. AWS says Dell APEX Block Storage for AWS is the PowerFlex software deployed in its public cloud: “With APEX Block Storage, customers can move data efficiently from ground to cloud or across regions in the cloud. Additionally, it offers enterprise-class features such as thin provisioning, snapshots, QoS and volume migration across storage pools.”

There is a separate Dell APEX Data Storage Services Block which is not a public cloud offering using AWS, Azure or Google infrastructure. Instead customers utilize the capacity and maintain complete operational control of their workloads and applications, while Dell owns and maintains the PowerFlex-based infrastructure, located on-premises or in a Dell-managed interconnected colocation facility. There is a customer-managed option as well.

A Dell spokesperson tells us that, in terms of differentiation, Dell APEX Block Storage offers two deployment options to customers: A performance optimized configuration that uses EC2 instance store with ephemeral storage attached to it; and a balanced configuration that uses EC2 instances with EBS volumes (persistent storage) attached to it.

Dell says APEX Block Storage offers customers superior performance by delivering millions of IOPS by linearly scaling up to 512 storage instances and/or 2048 compute instances in the cloud. It offers unique Multi-AZ durability by stretching data across three or more availability zones without having to replicate the data.

Lightbits

Lightbits documentation states: “Persistent storage is necessary to be able to keep all our files and data for later use. For instance, a hard disk drive is a perfect example of persistent storage, as it allows us to permanently store a variety of data. … Persistent storage helps in resolving the issue of retaining the more ephemeral storage volumes (that generally live and die with the stateless apps).”

We must note that a key differentiation point to make regarding ephemeral (local) storage, is that it requires running the compute and the storage in the same VM or instance – the user cannot scale them independently.

It says its storage software creates “Lightbits persistent volumes [that] perform like local NVMe flash. … Lightbits persistent volumes may even outperform a single local NVMe drive.” By using Lightbits persistent volumes in a Kubernetes environment, applications can get local NVMe flash performance and maintain the portability associated with Kubernetes pods and containers.

Abel Gordon, chief system architect at Lightbits, told us: “While the use of local NVMe devices on the public cloud provides excellent performance, users should be cognizant of the tradeoffs, such as data protection – because the storage is ephemeral – scaling, and lack of data services. Lightbits offers an NVMe/TCP clustered architecture with performance that is comparable to the instances with local NVMe devices. With Lightbits there is no limitation with regard to the IOPS per gigabyte; IOPS can be in a single volume or split across hundreds of volumes. The cost is fixed and predictable – the customer pays for the instances that are running Lightbits and the software licenses … For Lightbits there is no additional cost for IOPS, throughput, or essential data services.”

Silk

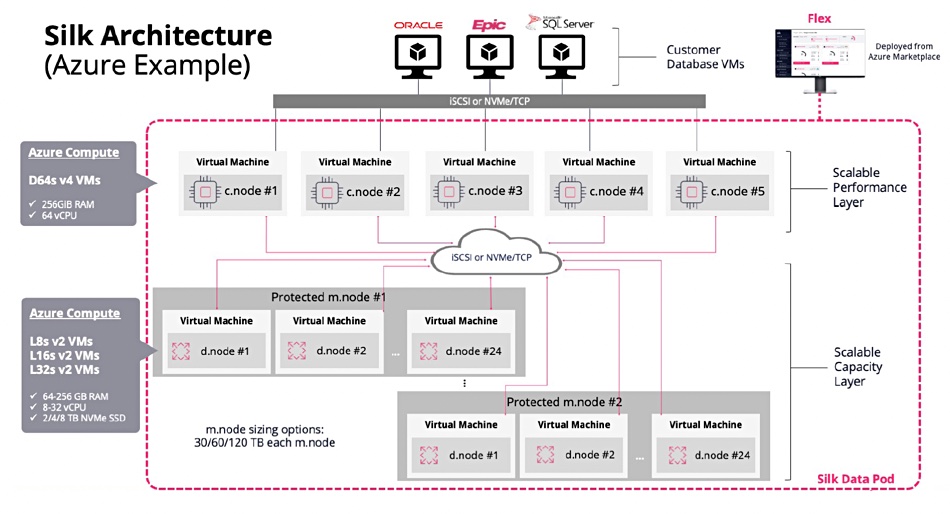

Silk, which supplies ephemeral disk storage for AWS, Azure and the Google Cloud, has two sets of Azure Compute instances running in the customer’s own subscription. The first layer (called c.nodes – compute nodes) provide the block data services to the customer’s database systems, while the second layer (the d.nodes – data nodes) persists the data.

Tom O’Neil, Silk’s VP products, told B&F: “Silk optimizes performance and minimizes cost for business-critical applications running in the cloud built upon Microsoft SQL Server and Oracle and deployed on IaaS. Other solutions are focused more on containerized workloads or hybrid cloud use cases.”

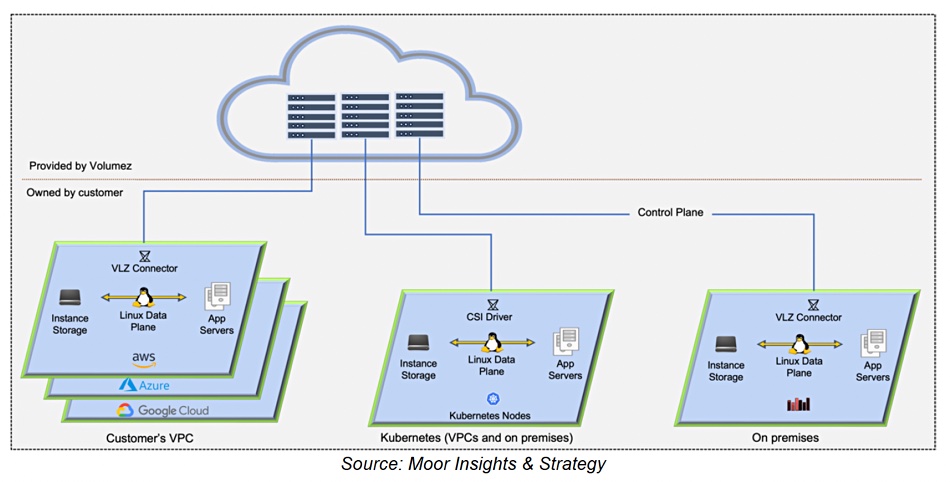

Volumez

Volumez is composable infrastructure software for block and file storage in the cloud (AWS, Azure) and used by developers to request storage resources, similar to the way they request CPU and memory resources in Kubernetes. It separates the Volumez cloud-hosted storage control plane, data plane, which runs in customer virtual private clouds and datacenters. The Volumez control plane connects NVMe instance storage to compute instances over the network, and composes a dedicated, pure Linux storage stack for data services on each compute instance. The Volumez data path is direct from raw NVMe media to compute servers, eliminating the need for storage controllers and software-defined storage services.

The vendor says it “profiles the performance and capabilities of each infrastructure component and uses this information to compose direct Linux data paths between media and applications. Once the composing work is done, there is no need for the control plane to be in the way between applications and their data. This enables applications to get enterprise-grade logical volumes, with extreme guaranteed performance, and enterprise-grade services that are built on top of Linux – such as snapshots, thin provisioning, erasure coding, and more.”

It claims it provides unparalleled low latency, high bandwidth, and unlimited scalability. You can download a Volumez white paper here to find out more.