Red Hat today released OpenShift Container Storage 4.5 to deliver Kubernetes services for cloud-native applications via an automated data pipeline.

Mike Piech, Red Hat cloud storage and data services GM, Piech, said in his launch statement: “As organizations continue to modernise cloud-native applications, an essential part of their transformation is understanding how to unlock the power of data these applications are generating.

“With Red Hat OpenShift Container Storage 4.5 … we’ve taken a significant step forward in our mission to make enterprise data accessible, resilient and actionable to applications across the open hybrid cloud.”

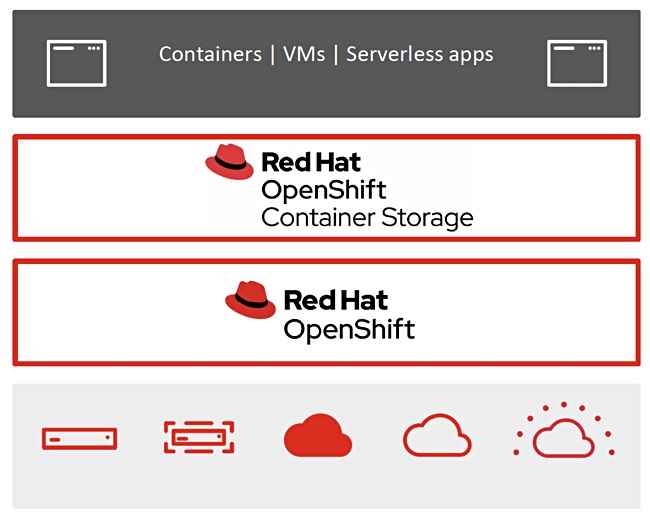

OpenShift is RedHat’s container orchestrator, built atop Kubernetes. Ceph open source storage provides a data plane for the OpenShift environment.

The automated data pipeline is based on notification-driven architectures, and integrated access to Red Hat AMQ Streams and OpenShift Serverless. AMQ Streams is a massively scalable, distributed, and high-performance data streaming platform based on the Apache Kafka project.

OpenShift Serverless enables users build and run applications so that, when an event-trigger occurs, the application automatically scales up based on incoming demand, or scales to zero after use.

Red Hat says that, with the recent release of OpenShift Virtualization, users can host virtual machines and containers on a single, integrated platform which includes OpenShift Container Storage. This is what VMware is doing with its Tanzu project.

New features in OpenShift Container Storage 4.5 include:

- Single, integrated, storage facility for containers and virtual machines,

- Shared read-write-many (RWX) block access for enhanced performance,

- External mode deployment option with Red Hat Ceph Storage, which can deliver enhanced scalability of more than 10 billion objects, without compromising performance.

- Integrated Amazon S3 bucket notifications, enabling users to create an automated data pipeline to ingest, catalog, route and process data in real time.