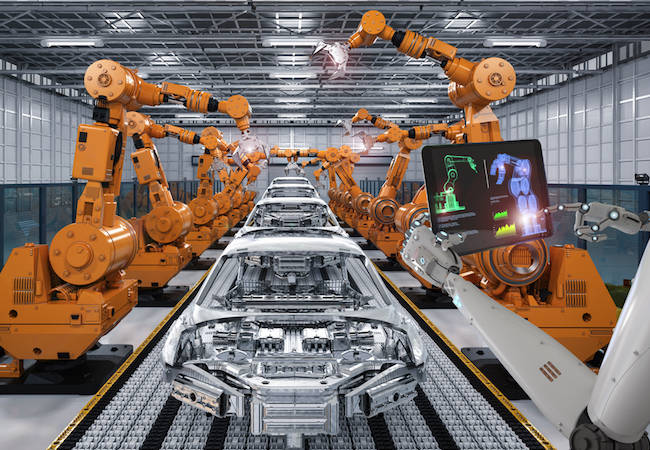

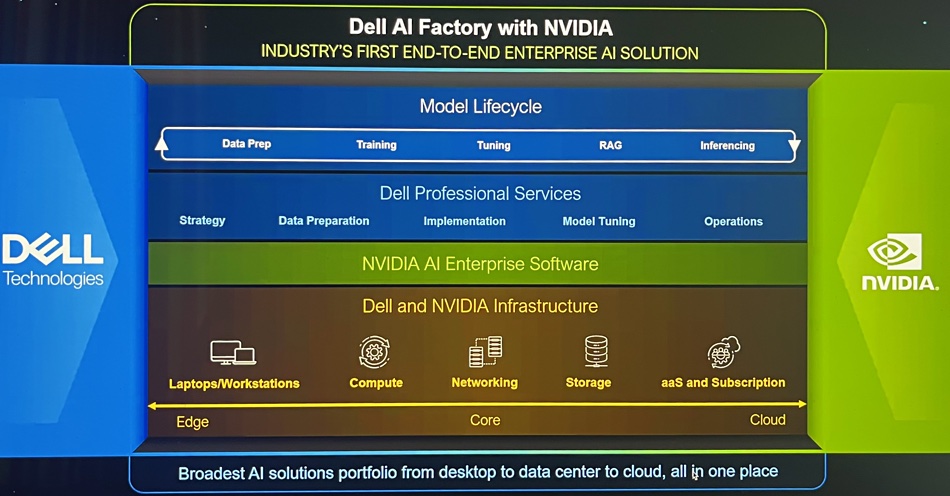

Dell is building an Nvidia-based AI Factory so customers can use Dell servers, storage, and networking to serve proprietary data to RAG-integrated AI inferencing systems for better analyses.

Update. PowerScale performance numbers and product availabilities added. 19 March 2024.

It claims that by 2025, two thirds of business will have a combination of GenAI and Retrieval-Augmented Generation (RAG) to help with domain specific self-service knowledge discovery. This will, according to an IDC AI report, improve decision efficacy by 50 percent. Dell says it has an AI portfolio that will help customers adopt GenAI better than any other supplier.

Wedbush financial analyst Matt Bryson told subscribers: “The ripple impact that starts with the golden Nvidia chips is now a tidal wave of spending hitting the rest of the tech world for the coming years. We estimate a $1 trillion-plus of AI spending will take place over the next decade as the enterprise and consumer use cases proliferate globally in this Fourth Industrial Revolution.”

Dell wants its AI portfolio to ride this tidal wave, and it has six components:

- AI Factory

- GenAI with Nvidia and RAG

- GenAI model training

- PowerEdge servers with Nvidia GPUs

- PowerScale storage for Nvidia’s SuperPOD systems

- Dell data lakehouse

These are buttressed by Dell’s professional services organization and supplied through its APEX subscription-based business model.

The AI Factory is the end-to-end system that includes the other items on the list. It is slated to integrate Dell’s compute, storage, client device, and software capabilities with Nvidia’s AI infrastructure and software suite, underpinned by a high-speed networking fabric.

The GenAI infrastructure is composed from PowerEdge servers with Nvidia GPUs, PowerScale scale-out filers, and PowerSwitch networking with added RAG, which uses Nvidia microservices such as NeMo. Dell says new data can be rapidly ingested to update the semantic search database. However, as we have noted, ingesting all of an organization’s proprietary data into a semantic search database is a non-trivial exercise.

Dell has reference architectures combining its server, storage, and networking gear with Nvidia GPU servers and software. Its x86 servers include the R760xa, XE8640, and XE9690. The Nvidia GPUs include the L40s and H10, but newer models will be supported too.

The XE9680 uses Nvidia’s H200 GPU plus the newly announced and more powerful air-cooled B100 and the liquid-cooled HGX B200. The HGX H200 has twice the performance and half the TCO of the HGX H100 GPU.

Dell will also support Nvidia’s GB200 superchip with its up to 20x better processing performance and 40x lower TCO for at-scale inferencing, compared to the eight-way HGX H100 GPU. The GB200 is positioned as a real-time inferencing chip, capable of running multi-trillion parameter models.

All this kit can be used, Dell says, both for AI model training and inference. It can use BlueField-3 Ethernet or InfiniBand networking. The PowerEdge 760xa uses Nvidia’s Omniverse OVX 3.0 platform while the XE9680 has Nvidia’s Spectrum-X Ethernet AI fabric.

Dell’s PowerScale storage now has Nvidia DGX SuperPOD validation, the first Ethernet-connected storage to get that qualification, with PowerScale claimed to exceed the SuperPOD benchmark requirements. Dell has not disclosed specific benchmark numbers.

A Dell PowerScale Solutions Overview doc says PowerScale now (PCIe 5-based F710 with OneFS 9.7) has 2x faster steaming writes and reads performance than the previous generation (PCIe 3-based F600 and OneFS 9.4) plus up to 90 percent higher performance/watt and up to 2.5x improvement in high concurrency workloads.

In GPUDirect terms the F600 did 1.26 GBps/node sequential writes and and 5.26 GBps sequential reads. A 2x improvement makes that 2.52 GBps/node read bandwidth and 10.52 GBps/node write bandwidth for the F710. Other systems go much faster. DDN’s AI400X2 Turbo does 37.5 GBps/node when writing and 60 GBps/node when reading

The data lakehouse element of this soup-to-nuts AI portfolio uses Starburst Presto software, Kubernetes-organized lakehouse system software, and scale-out object storage based on Dell’s ECS, ObjectScale or PowerScale storage products.

Availability

- Dell AI Factory with NVIDIA is available globally through traditional channels and Dell APEX now.

- Dell PowerEdge XE9680 servers with NVIDIA B200 Tensor Core GPUs, NVIDIA B100 Tensor Core GPUs and NVIDIA H200 Tensor Core GPUs have expected availability later this year.

- Dell Generative AI Solutions with NVIDIA – RAG is available globally through traditional channels and Dell APEX now.

- Dell Generative AI Solutions with NVIDIA – Model Training will be available globally through traditional channels and Dell APEX in April 2024.

- The Dell Data Lakehouse is now available globally.

- Dell PowerScale is validated with NVIDIA DGX SuperPOD with DGX H100 and NVIDIA OVX solutions now.

- The Dell Implementation Service for RAG is available in select locations starting May 31.

- Dell infrastructure deployment services for model training is available in select locations starting March 29.

Bootnote

The “IDC Futurescape: Worldwide Artificial Intelligence and Automation 2024 predictions” report costs $7,500.