IDC’s converged systems tracker for Q2 2020 shows a decline in a “difficult quarter”. But HCI sales did grow well in China and Japan, and HPE did well with its HCI systems.

As is now traditional, VMware led Nutanix and these two were a long way ahead of trailing pair HPE and Cisco.

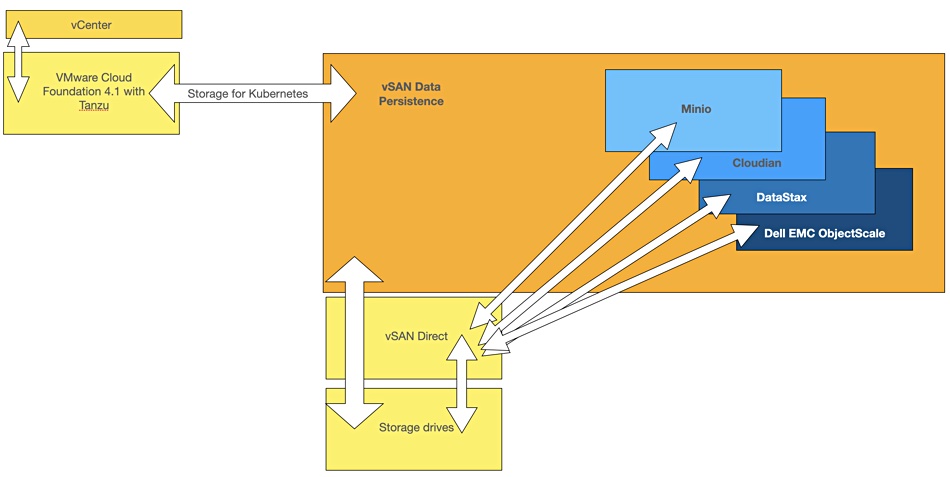

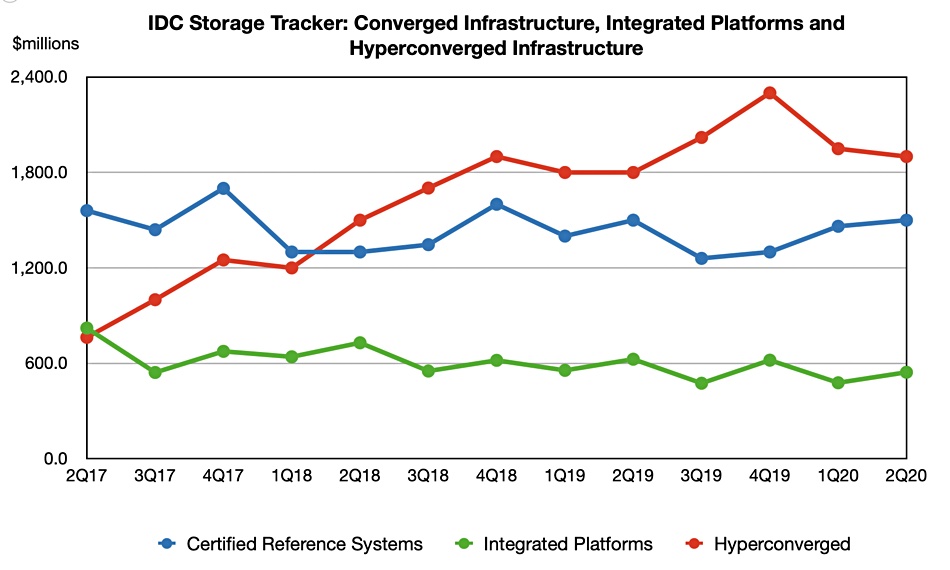

IDC splits the market three ways;

- HCI – $1.9bn revenues, 1.1 per cent growth, 47.1 per cent revenue share

- Certified Reference Systems & Integrated Infrastructure – $1.5bn -7.6 per cent decline, 39.1 per cent share

- Integrated Platforms – $544m -13.1per cent decline, 13.8 per cent share

IDC senior research analyst Paul Maguranis said in a statement: “The certified reference systems & integrated infrastructure and integrated platforms segments both declined this quarter while the hyperconverged systems segment was able to witness modest growth despite headwinds in the market.”

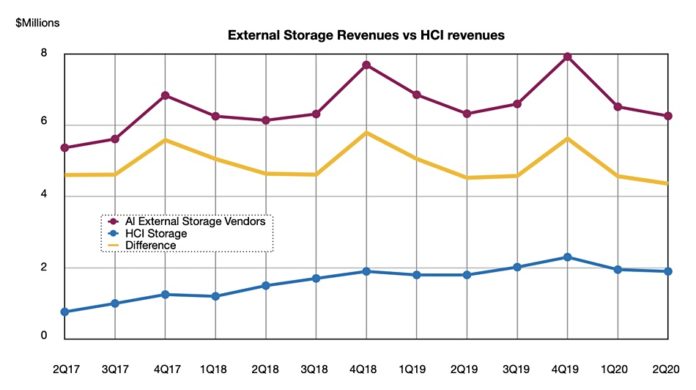

Charting revenue share trends since 2017 shows that certified reference systems like FlexPod are closing the gap with HCI (hyperconverged infrastructure), as HCI revenues decline from a peak at the end of 2019;

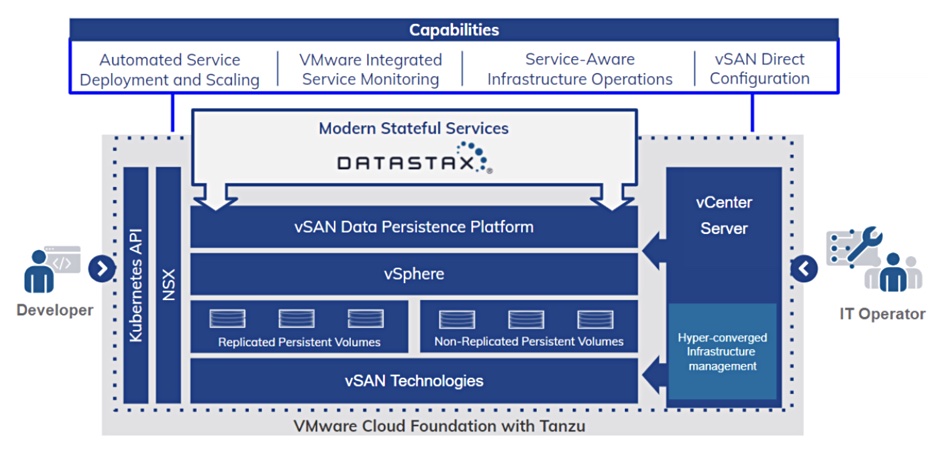

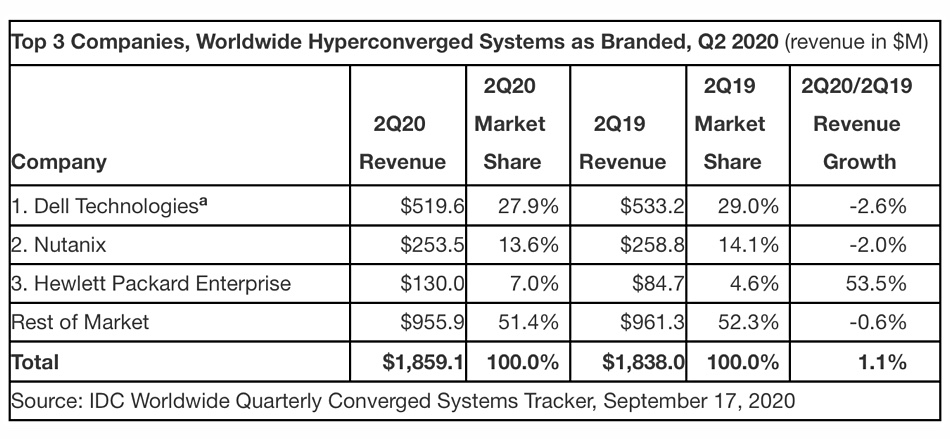

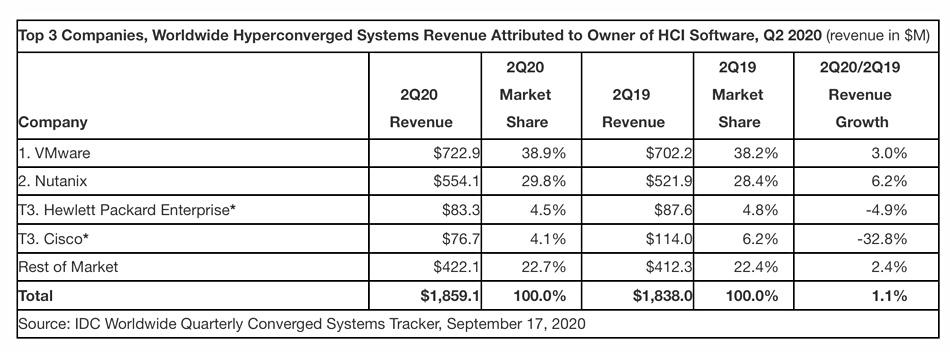

IDC looks at the top three vendors in the HCI market, checking the branded product sales and, separately, the HCI software owner’s sales. Both VMware and Nutanix HCI software is sold by other vendors such as HPE.

Branded HC sales numbers show a growth spurt by HPE;

HPE’s Y/Y 53.5 per cent revenue growth, from $84.7m to $130m, stands out from declines by Dell, Nutanix and the rest of the market. The picture revealed by slicing revenues by SW owner is quite different.

HPE’s 2Q20 revenues were $83.3m, meaning it sold ($130m – $83.3m = ) $46.7m of someone else’s HCI SW running on its servers. We understand this to be a mix of VMware and Nutanix. Without this software, HPE’s own SimpliVity HCI sales – (IDC only counts SimpliVity) – fell 4.9 per cent. HPE notes Nimble dHCI grew 112 per cent year-over-year in the period.

This HCI software owners table also shows Cisco suffering a 32.8 per cent fall in HCI revenues Y/Y. Both VMware and Nutanix outgrew the market.

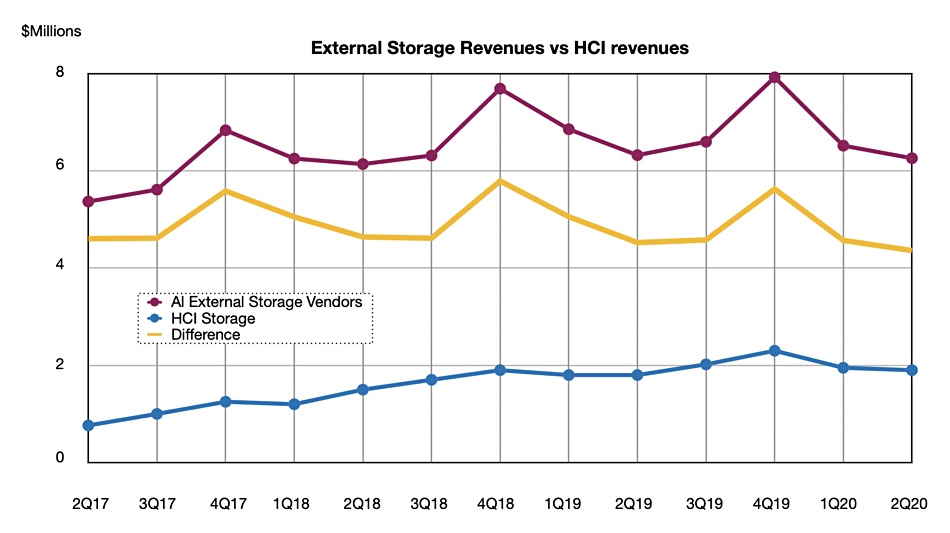

We checked to see if HCI is taking more revenue from the overall external storage market. The Q2 IDC numbers for both storage categories are:

- HCI – $1.9bn revenue; 1.1 per cent change Y/Y.

- External storage – $6.26bn revenue, -1.1 per cent change Y/Y.

Charting the trends to see the longer term picture shows a growing gap between the two;

The HCI-external storage sales gap (blue line on chart) has declined for the most recent two quarters in what could be a seasonal pattern. The blue line shows fourth quarter HCI sales in any year on the chart peak higher than fourth quarter external storage sales.