The focus this week is on Cisco and Cohesity partnering to help security ops people fight malware, TrendFocus’s disk ship data for the third quarter — cue a PC drive increase, and exec changes at DDN’s Tintri business unit.

Cohesity links Helios to Cisco’s SecureX

Cohesity’s Helios management system has been integrated with Cisco’s SecureX security risk monitoring and response system. It means SecureX admins can get to see Cohesity’s DataProtect product’s (anomaly spotting, resource management, migration, backup and recovery status info) through their dashboard alongside SecureX’s existing capabilities to automatically aggregate signals from networks, endpoints, clouds, and apps.

This aggregation and correlation of Cisco and Cohesity information should help an IT Security team see the emergence of malicious activity more quickly and fully, view operational performance, and shorten threat response times. The scope of ransomware attacks can be understood better and the security operations (SecOps) team initiate a workflow to restore compromised data or workloads to the last clean snapshot.

Al Huger, Cisco’s VP and GM of Security Platform and Response, said: “Cisco SecureX’s comprehensive security platform offers customers a system-wide view of security threats and issues. Adding the Cohesity Helios data protection and … data management solution to Cisco SecureX provides businesses with superior ransomware detection and response capabilities.”

Cohesity is now a Cisco Secure Technical Alliance Partner and a member of Cisco’s security ecosystem. The Cohesity-Cisco relationship has enabled:

- Cohesity Helios as a validated, S3-compatible backup, disaster recovery, and long-term retention solution for Cisco Secure Workload (formerly Cisco Tetration);

- Cohesity ClamAV app on Cohesity Marketplace based on a Cisco open source antivirus solution;

- Cohesity integrated secure, single sign-on (SSO) with Cisco Duo.

Every Cisco Secure product includes Cisco SecureX. The integrated solution and support are generally available from Cisco worldwide.

Tintri exec churn

DDN’s Tintri business unit has seen three senior executives leave and a new one appointed:

Phil Trickovic was appointed SVP of Revenue for Tintri in April, coming from two years at Diamanti. He had previously been Tintri’s VP of worldwide Sales and Marketing.

General manager and SVP Field Operations Tom Ellery resigned in June to join Kubernetes-focussed StormForge.

Paul Repice, Tintri’s VP Channel Sales for the Americas and Federal, left in March this year to join DataDobi as its VP Sales Americas.

Amy Mollat-Medeiros, SVP Corporate Marketing & Inside Sales for Tintri and DDN brands, resigned in June and joined Tom Ellery at StormForge to become SVP Marketing and SDR.

Graham Breeze was appointed Tintri’s VP of Products in March and came from 18 months at Diamanti. He’d also been at Tintri before, in the office of the CRO.

Christine Bachmayer was promoted to run Tintri’s EMEA marketing in April.

Recent Tintri Glassdoor reviews are uniform, being pretty negative. We hear changes are coming.

TrendFocus disk ship data: PC drive shipments increase

Thank you Wells Fargo analyst Aaron Rakers for telling subscribers that TrendFocus’s disk ship data for 2021’s third quarter saw about 67.8 million units shipped, up seven per cent year-on-year. Seagate had the leading share, at 42 per cent, Western Digital was ascribed around 37.5 per cent and Toshiba the rest, some 21 per cent.

It’s estimated that 19.3 million nearline disk drives were shipped — more than 250EB of capacity. This compares to the year-ago quarter when 13.3 million nearline disks hit the streets. Rakers thinks Seagate and Western Digital have a near-equal nearline disk ship share at 43 to 44 per cent.

Trend Focus estimates there were ~21 million 2.5-inch mobile and consumer electronics disk drives shipped, lower than the ~26 million shipped a year ago.

There were ~23.5-24.0 million 3.5″ desktop/CE disk units shipped shipped in the third quarter; an unexpected increase on the year-ago 21.5 million drives.

Shorts

Civo, a pure-play cloud native service provider powered by Kubernetes, announced general availability of its production-ready managed Kubernetes platform. It claimed that, at launch, it is the fastest managed Kubernetes provider in the world — deploying a fully usable cluster in under 90 seconds.

Cohesity has joined the Dutch Cloud Community — an association of hosting, cloud and internet service providers — as a supporting member. So what? Mark Adams, Cohesity’s Regional Director NEUR, said: “We are keen to work with the cloud community to offer either a customer-managed solution, or our Cohesity-managed SaaS implementation, or as some organisations prefer, a mix of both offerings. Together with this community, we will help service providers to consolidate silos and unleash the power of data and drive profitable growth for cloud and managed services platforms.”

DataStax, which supplies the Astra DB serverless database built on Cassandra, has new capabilities in the open-source GraphQL API, enabling developers to develop applications with Apache Cassandra faster and manage multimodel data with Apollo The API is available in Stargate, the open-source data gateway.

Cloud-based file collaborator Egnyte announced its Enterprise Plan ransomware protection is now available as part of its entry-level Business Plan (which starts at $20 per user per month). The offering can detect more than 2000 ransomware signatures, block attacks immediately, and automatically alert admins of the infected endpoint. New signatures are crowdsourced daily. It is also announcing a Ransomware Recovery solution as part of its Enterprise package. The recovery capability allows customers to “look back” at previous file snapshots to determine at which point ransomware infected a file and restore data to that point with a single click.

The latest version of FileCloud’s cloud-agnostic enterprise file sync, sharing and data governance product integrates with Microsoft Teams, so that organisations can share files and links from a single workspace. FileCloud can be self-hosted on-premises, operated as IaaS, or accessed in the cloud.

Iguazio, calling itself the MLOps (machine learning operations) company, today announced its software’s availability in the AWS Marketplace. This software automates machine learning (ML) pipelines end-to-end and accelerates deployment of artificial intelligence (AI) to production by 12x.

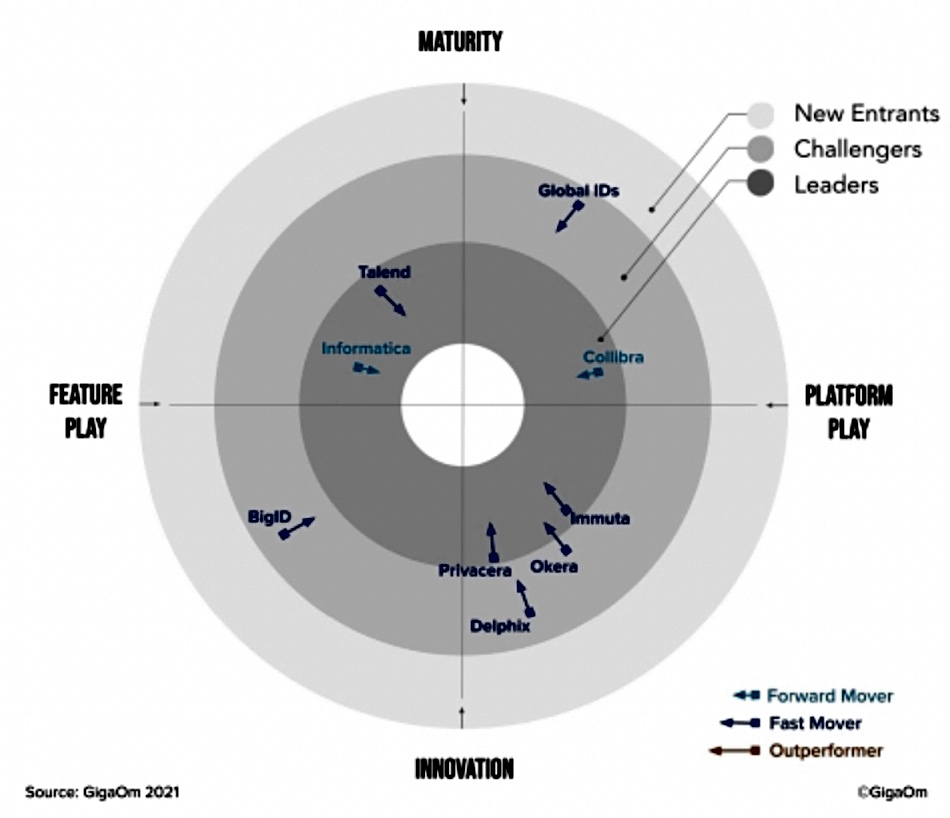

Immuta, a universal cloud data access control supplier, announced it was named a Leader in the GigaOm Radar Report for Data Governance Solutions. The company is positioned in the Leader category as a “Fast Mover,” the most innovative, and ahead of all other data access control providers.

iXsystems and Futurex have announced the integration of iXsystems’ TrueNAS Enterprise with Futurex’s Key Management Enterprise Server (KMES) Series 3 and Futurex’s VirtuCrypt Enterprise Key Management. This uses the Key Management Interoperability Protocol (KMIP) and enables centralised key management for TrueNAS.

Kingston Technology Europe announced its forthcoming DDR5 UDIMMS have receivedIntel Platform Validation and claims it is the first and arguably most important milestone in validating compatibility between Kingston DDR5 memory solutions and Intel platforms utilizing DDR5.

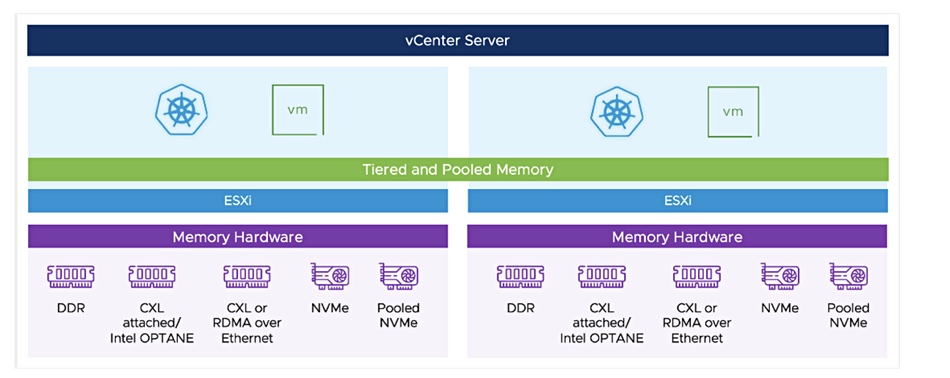

Lenovo has joined Nvidia’s early access program in support of Project Monterey, with its use of the BlueField-2 SmartNIC to offload host server CPUs. It means Lenovo’s ThinkAgile VX and ThinkSystem ReadyNodes will support the BlueField-2 SmartNIC.

Scalable, high-performance file system supplier ObjectiveFS has announced its v6.9 release. This includes new features, performance improvements and efficiency improvements, such as integrated Azure blob storage support, Oracle Cloud support, macOS extended ACL, cache performance, memory usage improvements, compaction efficiency and more. For the full list of updates in the 6.9 release, see the release note.

Phison offers two grades of its Write Intensive SSD. The standard grade comes in a 2TB capacity and is capable of sustained writes of 1GB/sec. Its write endurance is 3,000TB — compared to a typical consumer-level SSD’s endurance of around 600TB. The pro grade is available in either 1 or 2TB capacities, both capable of sustained writes of 2.5GB/sec — more than three times a typical SSD’s speed of 0.8GB/sec. The 1TB pro grade SSD delivers write endurance of 10,000TB, and the 2TB 20,000TB.

In a heavy workload of ten drive writes a day, a standard grade endurance SSD will survive for 300 days of sustained work. And the pro grade 1TB and 2TB models survive 1000 and 2000 days respectively — possibly outlasting the machines they’re running in. The typical SSD running the equivalent workload will only survive 60 days. Phison is now offering write-intensive SSDs through OEMs serving professional users such as PNY.

Pure Storage announced the release of a new Pure Validated Design (PVD) in collaboration with VMware to provide mutual customers with a complete, full-stack solution for deploying mission-critical, data rich workloads in production on VMware Tanzu. It provides an architecture, including design considerations and deployment best practices, that customers can use to enable their stateful applications like databases, search, streaming, and AI/machine learning apps running on VMware Tanzu to have access to the container-granular storage and data management provided by Portworx.

Rambus has developed a CXL 2.0 controller with zero-latency integrated Integrity and Data Encryption (IDE) modules. The built-in IDE modules employ a 256-bit AES-GCM (Advanced Encryption Standard, Galois/Counter Mode) symmetric-key cryptographic block cipher. Check out the technical details on the CXL 2.0 controller with IDE here and the CXL 2.0/PCI Express 5.0 PHY here.

Seagate has launched a $169.99 Game Drive for Xbox SSD. It features a lightweight, slim design with an illuminating Xbox green LED bar, USB 3.2 Gen-1 universal compatibility, 1TB capacity, compatibility with Xbox Series X, Xbox Series S and any generation of Xbox One, and installation in under two minutes through Xbox OS. The drive comes with a three-year limited warranty and three-year Rescue Data Recovery Services.

Seagate has developed a Kubernetes CSI driver for its Exos disk drive. It’s available for download under Apache v2 license from Github and can be used by any customer running Seagate storage systems with 4xx5/5xx5 controllers.

Swordfish 1.2.3, having been approved by the SNIA Technical Committee as working draft, is now available for public review. Swordfish defines a comprehensive, RESTful API for managing storage and related data services. V1.2.3 adds enhanced support for NVMe advanced devices (such as arrays), with detailed requirements for front-end configuration specified in a new profile, enhancements to the NVMe Model Overview and Mapping Guide.

Cloud data warehouser Snowflake announced its next Global Startup Challenge for early stage companies developing products for its Data Cloud. The Challenge invites entrepreneurs and early stage organisations, that have raised less than $5 million in funding, to showcase a data application with Snowflake as a core part of the architecture. It offers the three competition finalists the opportunity to be considered for an investment (total of up to $1 million across the three finalists), and global marketing exposure.

Storage array software supplier StorONE has signed a strategic distribution agreement with Spinnaker to distribute StorONE’s S1 Enterprise Storage Platform software in the EMEA market.

Data manager and archiver StrongBox Data Solutions (SBDS) has announced a partnership in the UK and Benelux with value-added distributor Titan Data Solutions. Titan will offer end-to-end data management solutions and cybersecurity services.

For the fourth consecutive time, data integration and data integrity supplier Talend announced it has been recognised by Gartner as a Leader in data quality solutions as described in the 2021 Magic Quadrant for Data Quality Solutions.

TimescaleDB, which supplies a relational database for time-series data, announced the new Timescale Cloud, an easy and scalable way for developers to collect and analyse their time-series data. This offering is built around a cloud architecture, with compute and storage fully decoupled. All storage is replicated, encrypted, and highly available; Even if the physical compute hardware fails, the storage stays online and the platform immediately spins up new compute resources, reconnects it to storage, and quickly restores availability.

Veeam has announced an update to its Backup & Replication product, v11a, offering Red Hat Virtualization backup support, and native backup and recovery to Amazon Elastic File Systems and Microsoft SQL databases. There’s more support for archive storage backup, and security integrations with AWS Key Management Service and Azure Key Vault to safeguard encrypted backup data from ransomware. Kasten K10 v4.5 will be able to direct backups of Kubernetes clusters that leverage VMware persistent volumes to a Veeam Backup & Replication repository where its lifecycle can be managed and additional Veeam features and capabilities leveraged.

Veritas has announced the Veritas Public Sector Advisory Board. This consists of “renowned public sector experts” who will advise Veritas, already the leading provider of data protection for the public sector, on ongoing developments such as the recent Executive Order on Improving the Nation’s Cybersecurity. It will work closely with Veritas executives to help prioritize the most important programs and initiatives in addition to recommending actions and direction on strategic business opportunities and go-to-market, route-to-market, customer and operational strategies for the public sector.

Hyperconverged infrastructure software provider Virtuozzo has acquired the technology and business of Jelastic, a multi-cloud Platform-as-a-Service (PaaS) software company, following a ten-year partnership. It says bringing Jelastic’s platform and application management capabilities in-house completes Virtuozzo’s core technology stack, delivering a fully integrated solution that supports all relevant anything-as-a-service (XaaS) use cases — from shared hosting to VPS to cloud infrastructure, software-defined storage and application management and modernisation.

VMware announced an upcoming update to VMware vSphere with Tanzu so that enterprises can run trials of their AI projects using vSphere with Tanzu in conjunction with the Nvidia AI Enterprise software suite. Nvidia AI Enterprise and VMware vSphere with Tanzu enable developers to run AI workloads on Kubernetes containers within their VMware environments. The software runs on mainstream, Nvidia-Certified Systems from leading server manufacturers, providing an integrated, complete stack of software and hardware optimized for AI.

Customer wins

The Hydroinformatics Institute in Singapore (H2i) uses Iguazio’s software on AWS to build and run a real-time Machine Learning pipeline that predicts rainfall by analysing videos of cloud formations and running CCTV-based rainfall measurements. Gerard Pijcke, Chief Consultancy Officer, H2i, said: “With Iguazio, we are able to analyze terabytes of video footage in real time, running complex deep learning models in production to predict rainfall. Repurposing CCTV-acquired video footage into rainfall intensity can be used to generate spatially distributed rainfall forecasts leading to better management of urban flooding risks in densely populated Singapore.”

StorMagic announced that Giant Eagle, Inc. a US food, fuel and pharmacy retailer with more than 470 locations across five states, has selected StorMagic SvSAN virtual SAN software and SvKMS encryption key management to store and protect in its 200-plus supermarkets with in-store pharmacies. Today, SvSAN is running on three-node Lenovo clusters at each store, and SvKMS on three virtual machines at its primary datacentre.

People moves

Remember Milan Shetti? He was SVP and GM of HPE’s storage business unit, leaving in March last year, and before that CTO of the Datacenter Infrastructure and Storage divisions. He’s being promoted from President to CEO at Rocket Software, an IBM systems focused business supplying software to run on legacy kit.

John Rollason resigned from NetApp, where he was senior director for global revenue marketing after being senior director for EMEA marketing. An ex-SolidFire marketing director, he quit in August this year and has become a part-time marketing consultant at Nebulon. He is also MD at REMLIVE in the UK, which is an electrical safety warning indicator specialist.

Keith Parker, Product Marketing Director at Pavilion Data, is leaving for another opportunity.

Ceph hardware and software system builder SoftIron has appointed Kenneth Van Alstyne as its CTO, responsible for “building out SoftIron’s technology strategy and roadmap as the company advances its mission to re-engineer performance and efficiency in modern data infrastructure through its task-specific, open source-based solutions.” He comes from Peraton, the US Naval Research Laboratory, QinetiQ North America and Knight Point Systems.