Cloud-based data lakes store data in cloud object storage and that data needs protecting – that’s the whole pitch made by SaaS data protection suppliers such as Commvault, Druva, HYCU and Clumio. Data lake suppliers such as Databricks say they look after data protection, but that is not down to the S3 or Azure Blob data storage layer. AWS protects the S3 infrastructure and AWS Backup provides S3 object data protection, but these vendors argue it can be limited in scale.

Basic refresher

Broadly speaking data can be stored as blocks, files or objects. Data stored in blocks is located on a storage medium at a location relative to the medium’s start address. The medium, such as an SSD, disk drive, tape or optical disk, is organized into a sequential set of blocks running from the start block to the end block. For example, a piece of data, such as a database record, could be start at block 10,052 and run for 50 blocks to block 10,102.

The device can be virtualized and referred to as a LUN (Logical Unit Number) which could be larger or smaller than a physical device. Both file and object data is ultimately stored in blocks on storage media with an internal and hidden logical-to-physical mapping process translating file and object addresses to device block addresses.

A file is stored inside a hierarchical file:folder address space with a root folder forming the start point and capable of holding both files and sub-folders in a tree-like formation. Files are located by their position in the file:folder structure.

An object is stored in a flat address space using a key generated from its contents.

S3, Amazon Web Services’ Simple Storage Service, is an object storage service for storing data objects on storage media. It currently stores about 280 trillion objects, according to Michael Kwon, Clumio’s marketing VP.

An object is a set of data with an identity. It is not a file with a filesystem folder-based address nor is it a set of storage drive blocks with a location address relative to the drive’s start address, as in a SAN with its LUNs (virtual drives).

S3 objects are stored in virtual repositories called buckets which can store any amount of data. They are not fixed in size. Buckets are stored in regions of the AWS infrastructure, meaning they have a two-part address: region name followed by a user-assigned key.

S3 buckets have access controls which define which users can access them and what can be done to the bucket’s contents. There are eight different classes of S3 storage: Standard, Standard-Infrequent Access (Standard-IA), One Zone-Infrequent Access (One Zone-IA), Intelligent-Tiering, S3 on Outposts, Glacier Instant Retrieval, Glacier Flexible Retrieval, and Glacier Deep Archive.

S3 objects can be between 1 byte and 5TB in size, with 5TB being the maximum S3 object upload size. Data objects larger than 5TB are split into smaller chunks and sent to S3 in a multi-part upload operation.

Since S3 buckets can store anything, they can store files. An object can have a user-assigned key prefix that represents its access path. For example, Myfile.txt could have a BucketName/Project/Document/Myfile.txt prefix.

There is a flat S3 address space, unlike a hierarchical file:folder system, but S3 does use the concept of a folder to group and display objects within a bucket. The “/“ character in an object’s key prefix separates folder names. So, in the key prefix BucketName/Project/Document/Myfile.txt prefix, there is a folder, Project, and sub-folder, Document, with both classed as folders.

All this means that data stored in an S3 bucket can look as if it is stored and addressed in a file:folder system but it is not. An object’s key is its full path, in this case BucketName/Project/Document/Myfile.txt. AWS uses the / character to delineate virtual Project and Document folders but they are not used as part of the object’s location process, only for grouping and display purposes.

The S3 folder construct is just used to group objects together but not to address them in a hierarchical fashion. We are now at the point where we understand that S3 buckets are effectively bottomless. How is data in them protected?

S3 data protection

An organization supplying services based on data stored in S3 buckets could have a data protection issue, say some vendors. For example, data lakehouse supplier Databricks has its Delta Lake open source storage layer running in AWS. This runs on top of Apache Spark and uses versioned Parquet files to store data in AWS cloud object storage, meaning S3.

AWS protects the S3 storage infrastructure, ensuring it is highly durable. It states: “S3 Standard, S3 Intelligent-Tiering, S3 Standard-IA, S3 Glacier Instant Retrieval, S3 Glacier Flexible Retrieval, and S3 Glacier Deep Archive redundantly store objects on multiple devices across a minimum of three Availability Zones in an AWS Region.”

Amazon S3 also protects customers’ data using versioning, and customers can also use S3 Object Lock and S3 Replication to protect their data. But these don’t necessarily protect against accidental or deliberate data loss, software errors and malicious actors.

Amazon has made AWS Backup for S3 generally available to “create periodic and continuous backups of your S3 bucket contents, including object data, object tags, access control lists (ACLs), and other user-defined metadata.”

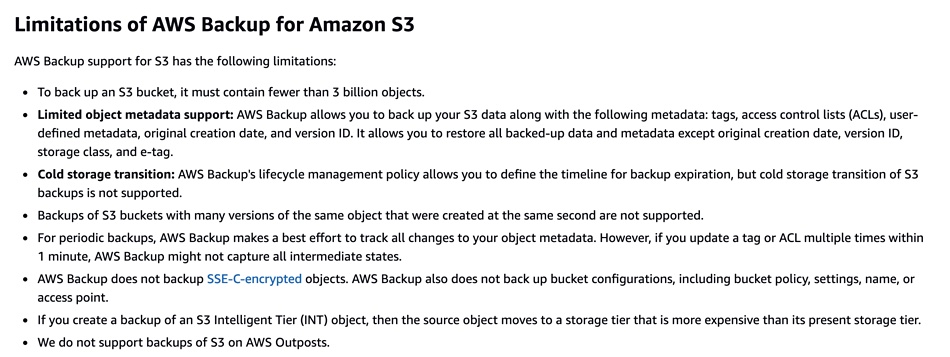

But Chadd Kenney, VP of Product at Clumio, said AWS Backup is limited to backing up 3 billion objects. “You can see it on their site that it’s a 3 billion object limit,” he said. In his view this is not enough: “You need to get to exabytes scale, and you need to get to billions and billions of objects.”

S3 data lake protection

Databricks VP for field engineering Toby Balfre told us: “Databricks uses Delta Lake, which is an open format storage layer that delivers reliability, security and performance for data lakes. Protection against accidental deletion is achieved through both granular access controls and point-in-time recovery capabilities.”

This protects Delta Lake data – but it does not protect the underlying data in S3.

Clumio’s Ari Paul, director of product and solutions marketing, said: “If you have a pipeline feeding data into a Snowflake, external table or something, what happens if the source data disappears? Our approach is that we protect data lakes from the infrastructure level.”

Data lakes and lakehouses can use petabytes of data in the underlying cloud object storage and it cannot be protected by AWS Backup once it scales up to the 3 billion object limit.

Clumio’s S3 backup runs at scale and has two protection technologies. The Inventory feature lists every object that’s changed in the last 24 hours and is used as a delta to run a backup. Secondly, Event Bridge sees all object changes in a 15-minute period and does a micro or incremental backup of them.

Kenney said: “We do an incremental backup of those object changes. And then every 24 hours, we will validate that all those object changes were correct. So we never miss objects. It’s kind of a dual validation system.” This provides a 15-minute RPO compared to Inventory’s 24-hour RPO.

Clumio mentions a customer who found AWS Backup inadequate at its scale. Kenney told us: “AWS Backup was their [original] solution, but they had 18 billion objects in a single bucket.”

Clumio currently claims to be able to back up 30 billion S3 objects and, Kenney tells us: “We’re now at 50 billion internally.”

The takeaway here is that end users with data lake-scale S3 storage, meaning more than 3 billion objects per bucket, may need to look at third-party data protection services.