Maximum SSD capacity is expected to double from its current 61.44 TB maximum by mid-2025, giving us 122 TB and even 128 TB drives, with the prospect of exabyte-capacity racks.

Five suppliers have discussed and/or demonstrated prototypes of 100-plus TB capacity SSDs recently.

Western Digital demonstrated a 128 TB SSD at FMS 2024 in Santa Clara, California, this month. It uses WD and Kioxia’s BiCS8 technology with 218-layers and QLC format cells. We anticipate WD productizing this as a successor to its current Ultrastar DC SN655, with its 3.84 TB to 61.44 TB capacity range, PCIe gen 4 interface, and BiCS5 112-layer TLC NAND technology.

Huawei said it has a 128 TB SSD planned at its A800 storage array launch event in Berlin in May.

Phison, which extended its capabilities from producing SSD controllers to building SSDs this year with its Pascari brand drives, has a planned 128 TB capacity drive on its roadmap.

Samsung suggested having a 122.88 TB SSD drive when it introduced its 61.44 TB BM1743 SSD in June. And SK hynix subsidiary Solidigm demonstrated a 122 TB successor to its 61.44 TB D5-P5336 SSD at FMS 2024. This is a PCIe gen 4 drive using QLC flash, with 1.27 million random read IOPS and 7.2/3.3 Gbps sequential read/write bandwidth.

We must also mention IBM and Pure. Pure Storage builds its proprietary Direct Flash Module drives instead of using commercial SSDs. These currently top out at 75 TB raw capacity with a 150 TB version due later this year or early in 2025.

IBM’s proprietary Flash Core Modules (FCMs) have up to 38.4 TB raw NAND capacity and provide a maximum of 87.96 TB with onboard compression. Their next iteration could double capacity to 76.8 TB raw and 175.9 TB compressed.

Controllers for 100-plus TB capacity SSDs will have a more difficult job, with extra memory and processing power needed to store and manage the operations and metadata for a 128 trillion byte store. Wear-leveling, garbage collection processing, and error correction code (ECC) will be more intensive. The controller will need more onboard memory or access to a host server’s memory for this.

With the per-die capacity increasing, the IO path from the controller to each die may well need broadening to stop IO operation time lengthening.

RAID concerns

Systems with enclosures full of high-capacity SSDs will need to cope with drive failure and that means RAID or erasure coding schemes. SSD rebuilds take less time than HDD rebuilds but higher-capacity SSDs take longer. Looking at a 61.44 TB Solidigm D5-P5336 drive, its max sequential write bandwidth is 3 GBps. For example, rebuilding a 61.44 TB Solidigm D5-P5336 drive with a max sequential write bandwidth of 3 GBps would take approximately 5.7 hours. A 128 TB drive will take 11.85 hours at the same 3 GBps write rate. These are not insubstantial periods.

Kioxia has devised an SSD RAID parity compute offload scheme with a parity compute block in the SSD controller and direct memory access to neighboring SSDs to get the rebuild data. This avoids the host server’s processor getting involved in RAID parity compute IO and could accelerate SSD rebuild speed.

Rack capacity

VAST Data’s Ceres enclosure holds 22 x E1.L format 9.5 mm drives in up to 61.44 TB capacities, using Solidigm QLC drives. That’s 1.35 PB in a 1RU enclosure. The average data reduction ratio, according to VAST, is 3:1, meaning an effective 4.2 PB capacity (PBe) per rack unit.

Let’s put 40 Ceres enclosures in a rack to get 54 PB (167.4 PBe) of capacity. Now imagine Ceres using 128 TB SSDs, giving us 2.82 PB per box (8.7 PBe), and 112.6 PB (349.2 PBe) per 40-Ceres rack.

A comparison with disk drive density could look at Seagate’s Corvault enclosures with 106 3.5-inch drives in a 4RU chassis. Populating it with 32 TB HAMR drives would provide 3.39 PB and a rack of 10 Corvaults would deliver 33.9 PB, less than a third of the Ceres 128 TB SSD rack capacity (112.6 PB). A Ceres rack would have more capacity than three Corvault racks, meaning savings in datacenter space, power, and cooling costs.

Comparing effective PB capacity basis, after data reduction, would favor SSDs even more. The net result in total cost of ownership $/TB cost will be interesting to understand and could prompt a move to flash from disk for nearline storage. Pure Storage is certainly convinced of this.

SSD capacity roadmap

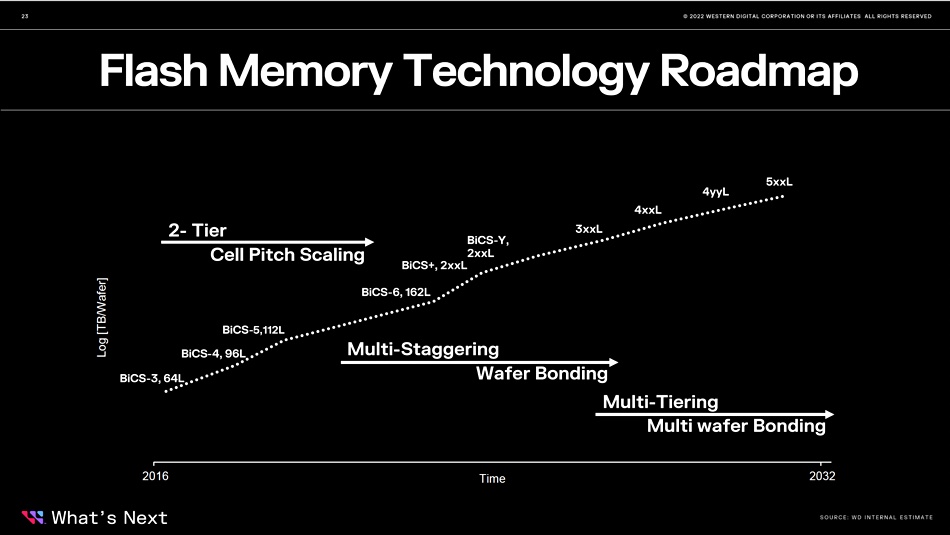

The SSD capacity roadmap moving on from 128 TB SSDs with around 200-layer NAND looks like achieving 400 layers, which would double capacity from the 128 TB SSD to 256 TB. That means we could envisage Ceres using 256 TB SSDs, giving us 5.63 PB per box (17.46 PBe), and 225.3 PB with 40 Ceres boxes in a rack (698.4 PBe).

Extend the roadmap out to the 800-layer NAND area and we double capacity again, with a 40-box Ceres rack providing 450.6 PB raw and 1.4 EB effective capacity; we’re looking at a 1-plus exabyte rack that could perhaps arrive in the 2030-2040 time frame. If this happens, comparing it with a Corvault rack of, let’s say, 100 TB HAMR drives with a 106 PB total capacity, SSDs would appear more favorable. The Ceres rack, at 450.6 PB, would have four times more raw capacity than the Corvault rack.

Does the hyperscaler nearline disk drive market face an existential crisis based on this scenario? Western Digital has signaled a slowdown in the 3D NAND layer race, suggesting that the arrival of 400-layer and 800-layer NAND could be slower than previously assumed. If the HDD suppliers can increase their capacity at a higher rate than the SSD suppliers, their hyperscaler nearline drive market should survive for a good few years yet.

Bootnote

VAST customers typically have 20 to 30 Ceres shelves per rack, with power delivery to the racks an issue.