Research house TrendForce detects signs of the standardized HBM (High Bandwidth Memory) market splintering with HBM4 as suppliers and customers seek extra performance.

HBM is a set of memory die bonded to an interposer device which connects them to GPUs faster and with greater capacities than the x86 socket-connected DIMM approach can provide.

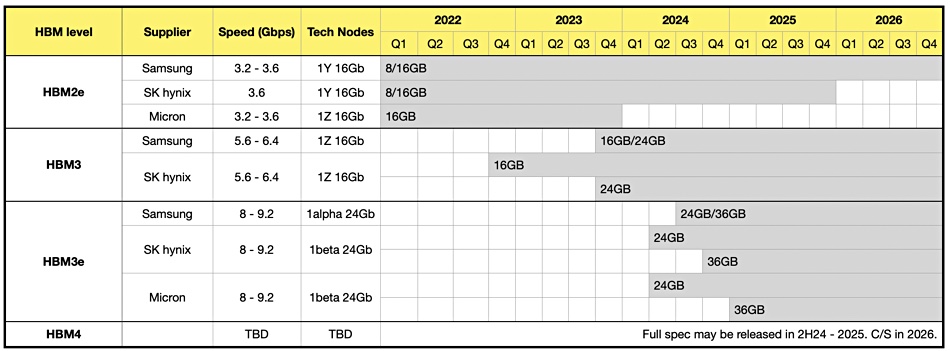

There have been three main HBM generations and a fourth is being defined. A table lists their characteristics and suppliers:

TrendForce says Nvidia, which has used SK hynix HBM products until now, is set to diversify its supply and is evaluating HBM3e chips from Samsung and Micron. It says Micron provided 24 GB samples using its 8-hi (layers) chip to Nvidia by the end of July, SK hynix in mid-August, and Samsung in early October.

Nvidia can take up to two quarters to verify a supplier’s HBM product so preliminary results could be expected by the end of this year for the early submissions with full results by the end of 2024’s first quarter. Nvidia can then decide from which supplier to purchase products.

As AMD and Intel are also shipping GPUs, and GPU demand is being pushed up by generative AI workloads, the total HBM market is set to increase in size quite rapidly.

TrendForce believes that the fourth generation, HBM4, will launch in 2026 “with enhanced specifications and performance tailored to future products from Nvidia and other CSPs.” HBM4 will feature a 12 nm process bottommost logic die supplied by semiconductor foundries to the memory fabricators. “This advancement signifies a collaborative effort between foundries and memory suppliers for each HBM product,” the analysts say.

TrendForce thinks HBM4 is set to expand from the current 12-hi to 16-hi stacks, spurring demand for new hybrid bonding techniques. The 12-hi HBM products will launch in 2026 with 16-hi products following in 2027.

It sees an HBM customization trend emerging, moving away from an interposer connecting the memory to GPUs, and exploring options like stacking HBM directly on top of the logic SoC (GPU) as a way of gaining extra performance and perhaps lowering costs.

They say this “is expected to bring about unique design and pricing strategies, marking a departure from traditional frameworks and heralding an era of specialized production in HBM technology.”

This means that HBM customers, the GPU and other logic SoC suppliers, could be locked in to an HBM vendor because of specialized design and connectivity options. Alternatively, HBM suppliers could start selling their own HBM + logic SoC (processor) products.