DDN is launching an Infinia scale-out object storage software product designed from the ground up for AI and cloud environments and optimized for flash drives.

Infinia has been developed because DDN realized it couldn’t bolt on new features to EXAScaler and develop the storage qualities needed to cover edge site, datacenter and public cloud distributed storage needs. Such data will inevitably need immense scale, shared resources and storage efficiency. It will be metadata-intensive, filtered, and used by a complex mix of applications – such as those involved in end-to-end AI model development and production. Data governance will also be a concern.

Eight years ago, DDN recognized that a new storage platform needed to be developed, joining companies such as Quantum (Myriad), StorONE, and VAST Data in this regard. Like Quantum, DDN has an existing customer base which it can use for storage development strategy validation, future needs identification, and help with product development and testing.

CTO James Coomer told us: “We’ve had a very talented bunch of people from all kinds of places, some ex-parallel file system people, some people from IBM, some people from enterprise storage companies. They’ve been sitting in their basement and basically writing a whole new, very ambitious storage platform called DDN Infinia – completely ground up built, not open source, just built by DDN engineers. Absolutely everything done from scratch; we’ve been very ambitious about it.”

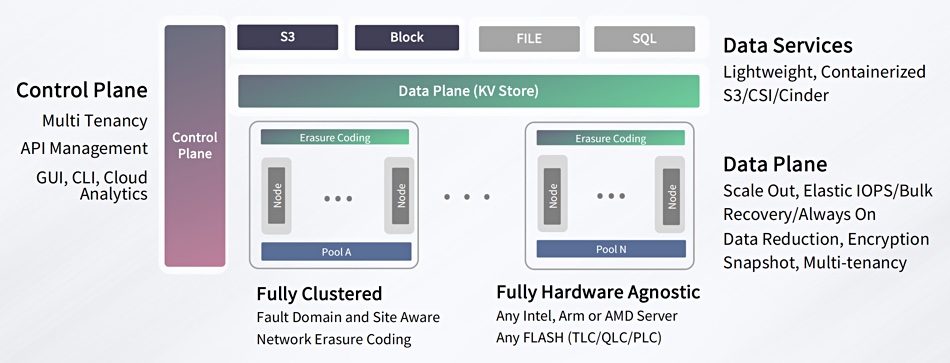

Infinia is not a development of DDN’s existing EXAScaler Lustre parallel filesystem-based product. It is software-defined and has separate control and data planes. The data plane is based on key:value object storage implemented in clustered pools formed from server/storage nodes. It has always-on data reduction, encryption, snapshots and multi-tenancy, with bulk recovery and elastic IOPS.

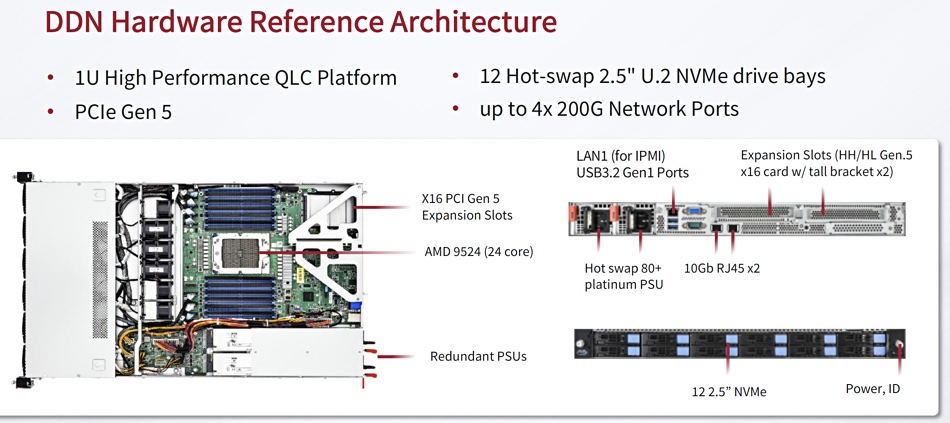

The node systems can be any Intel, Arm or AMD server fitted with flash drives – be they TLC, QLC or PLC. Hardware reference architectures specify node hardware characteristics.

The clusters are built up from Ethernet-connected nodes on-premises or in the cloud and can scale out to hundreds of S3 petabytes. They use network erasure coding and are fault domain and site aware. There are no restrictions on object size or metadata, and there is multi-part upload support. DDN says there is sub-millisecond latency for Puts and Gets.

There are hybrid I/O engines autonomously optimizing for low latency, sequential streaming data, drive IOPS and metadata-intensive workloads. There is no read/modify/write overhead, as log-structured operations are used. The storage operations are byte-addressable with wide striping for large I/O blocks. Capacity space for both metadata and data is elastic.

The Infinia keyspace has a tree structure to track site (realm), clusters, tenants, sub-tenants and data sets. It is sharded across the entire Infinia infrastructure. All tenants and sub-tenants are assigned a share of Infinia’s resource performance and capacity when they are created. This prevents noisy neighbor problems happening. The capacity is thin-provisioned and each tenant’s and sub-tenant’s data is encrypted. Service levels can be dynamically adjusted.

New tenants and services can be deployed in around four minutes. Infinia has S3 support now, and coming block, file and SQL access protocols layered on top of the data plane. These data services are lightweight and there is containerized code supporting S3, CSI and Cinder.

Infinia’s control plane looks after metadata, and is multi-tenant, has API management, a GUI, a CLI, and cloud analytics. Software upgrades involve zero downtime and their are no LUNs to administer or manual system tuning.

Infnia can be used to build an object store, a data warehouse or a data lake. Building a data lake involves setting up a new tenant on a cluster, setting capacity boundaries and performance shares for the tenant in a quality of service policy. Then storage buckets can be created, objects put in them and the data lake content built up. The self-service setup process has four overall commands and takes tens of seconds.

DDN suggests Infinia can function as an enterprise S3 object store with high-throughput and low latency, multi-tenacy support, more simplicity than Ceph and much higher performance.

Infinia is suited for outer ring use cases in AI with DDN’s existing EXAScaler storage providing an inner ring with extreme scale, efficiency and performance.

The Infinia 2023 roadmap includes a full set of S3 object services, NVMeoF block services for Kubernetes, and an SQL query engine.

In 2024 DDN intends to add a parallel filesystem designed for AI and the cloud, an advanced database, and data movement.