IBM has launched a new Storage Scale appliance with twice the throughput of the current high-end ESS 3500.

Storage Scale is IBM’s established parallel file system software previously called GPFS and popular in HPC and allied scenarios. The ESS appliances are integrated scale-up/scale-out – to 1,000 appliances – storage systems with Storage Scale installed. The current ESS 3500 is built from 24-slot 2U nodes with dual active:active controllers, NVMe SSDs or IBM’s proprietary Flash Core Modules (FCMs), and 100 Gbit Ethernet or 200 Gbit HDR InfiniBand ports with a maximum 126 GB/sec throughput per node. The rapid increase in AI workloads has prompted IBM to boost the box as it were.

Denis Kennelly, IBM Storage general manager, said in a statement: “IBM Storage Scale System 6000 … brings together data from core, edge, and cloud into a single platform with optimized performance for GPU workloads.

“The potential of today’s new era of AI can only be fully realized, in my opinion, if organizations have a strategy to unify data from multiple sources in near real-time without creating numerous copies of data and going through constant iterations of data ingest.”

He said he sees Storage Scale and the 6000 as the top end of an information supply chain that collects data from multiple sources, consolidating workloads, and pumps it out to GPU servers for AI training and inferencing.

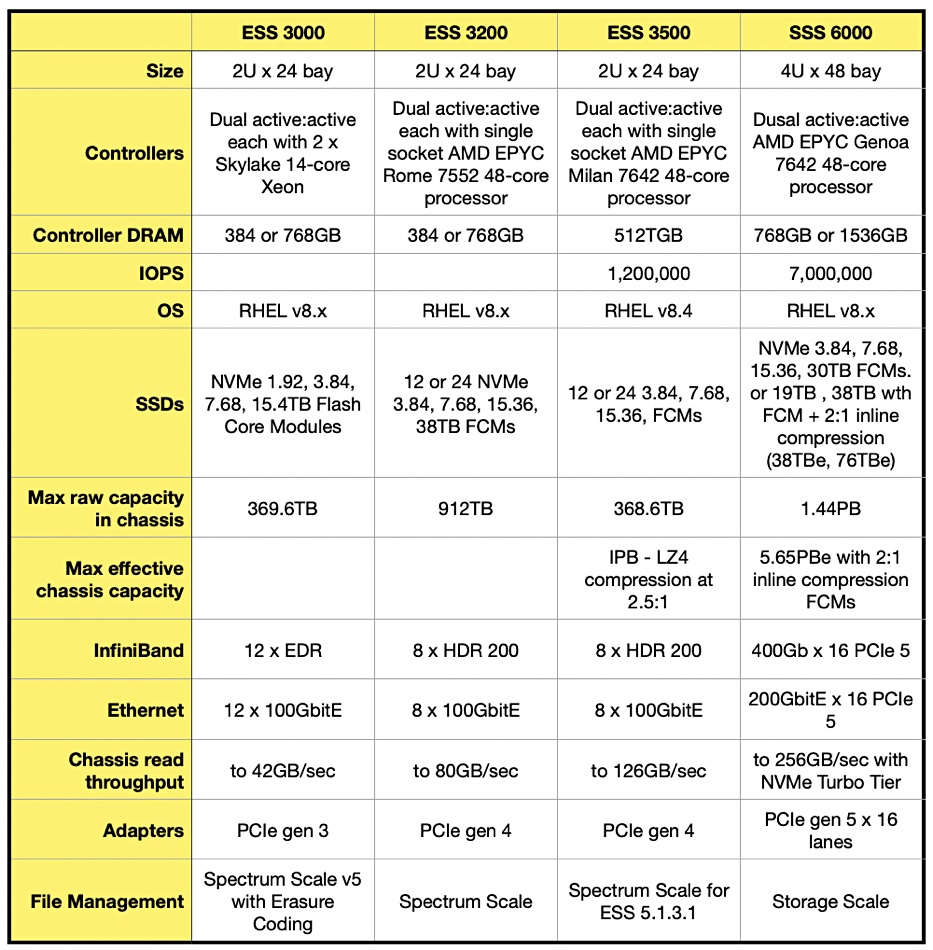

The SSS (Storage Scale System) 6000 datasheet helped provide the information in this table:

IBM has increased the chassis size from the 24-slot 2RU format used in the prior ESS appliances to a 48-slot 4RU design. It has also doubled the raw NAND drive maximum capacity to 30 TB and will later add inline compressing FCMs with either 38 TB or 76 TB effective capacity at a 2:1 compression ratio. The 6000 is based on the PCIe gen 5 bus, twice as fast as the ESS 3500’s PCIe gen 4 interconnect.

It also supports 400 Gb InfiniBand vs the 3500’s 200 Gb InfiniBand links, and 200 GbitE vs the 3500’s 100 GbitE. Put the drive capacity and link speed increases together with newer CPUs and more controller DRAM, and the maximum per-chassis data transfer rate shoots up from ESS 3500’s 126 GBps to 256 GBps.

IBM says the 6000 has an NVMe turbo tier for extra small file transfer speed. As is the case with ESS 3500, Storage Scale in the 6000 supports Nvidia’s GPUDirect CPU-bypassing storage protocol for fast data delivery to Nvidia GPUs.

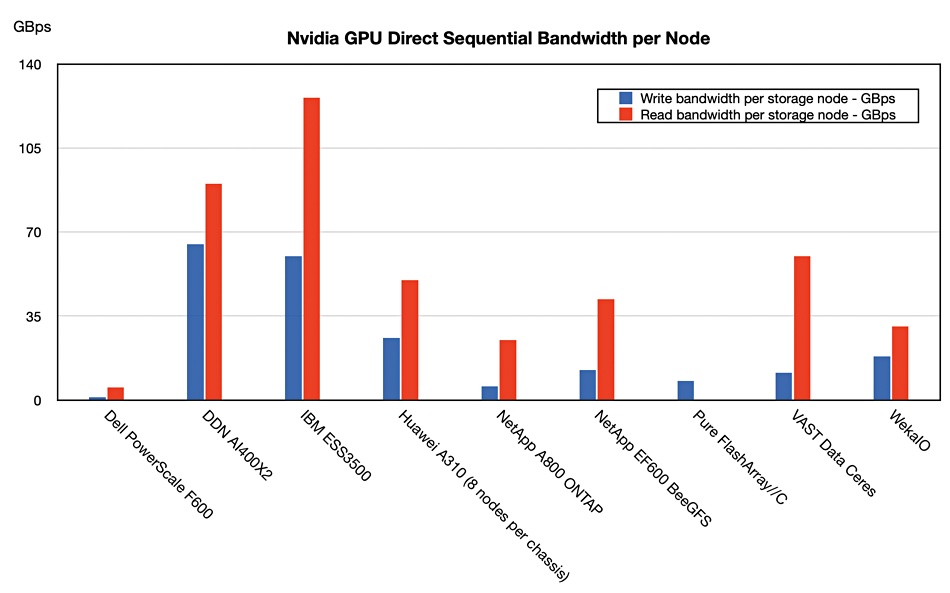

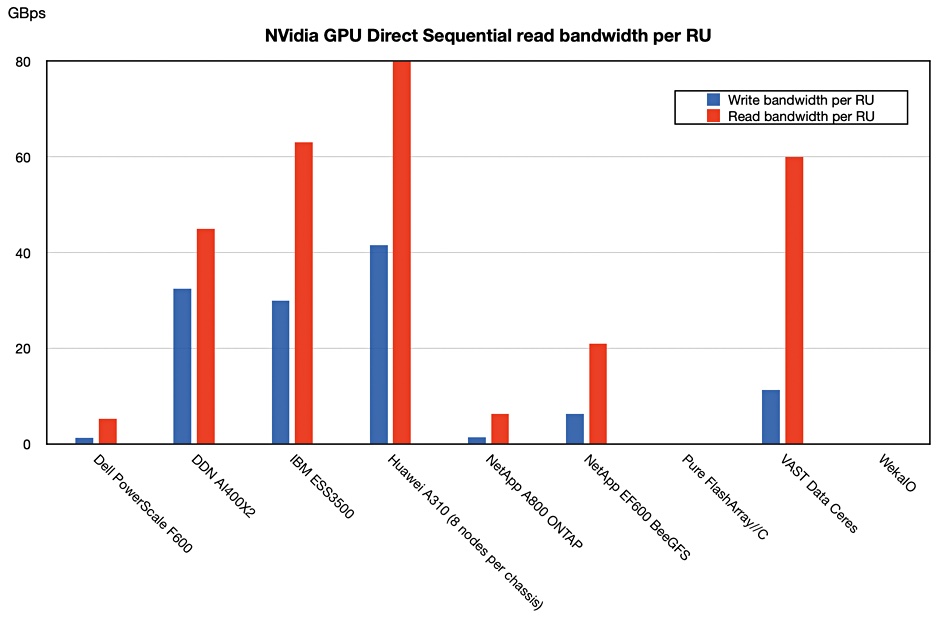

The 6000 is 2.5x faster than market-leading competitors, IBM claims without naming them. Existing GPUDirect-support suppliers include Dell (PowerScale), DDN, Huawei, NetApp, Pure Storage, and WekaIO.

We think that GPUDirect testing should show the SSS 6000 is the fastest GPUDirect data supplying system of all on a per-node basis but still not as fast as Huawei’s A310 on a per RU basis – an estimated 64 GBps read bandwidth vs the A310’s 80 GBps.

The 6000 will get new NVMe FCMs based on QLC (4bits/cell) NAND in the first half of 2024. These will have 70 percent lower cost, we’re told, and use 53 percent less energy per TB than the current top capacity 15.36 TB flash drives for the ESS 3500. This will enable the 6000 to support 2.5x the amount of data in the same floor space as the ESS 3500. This is based on a configuration using the 38 TB FCM drives with up to 2:1 inline compression coming in 1H 2024 with 900 TB/rack unit of floor space vs the previous generation 2RU Scale System 3500 using 30 TB flash drives with 360 TB/rack unit of floor space.

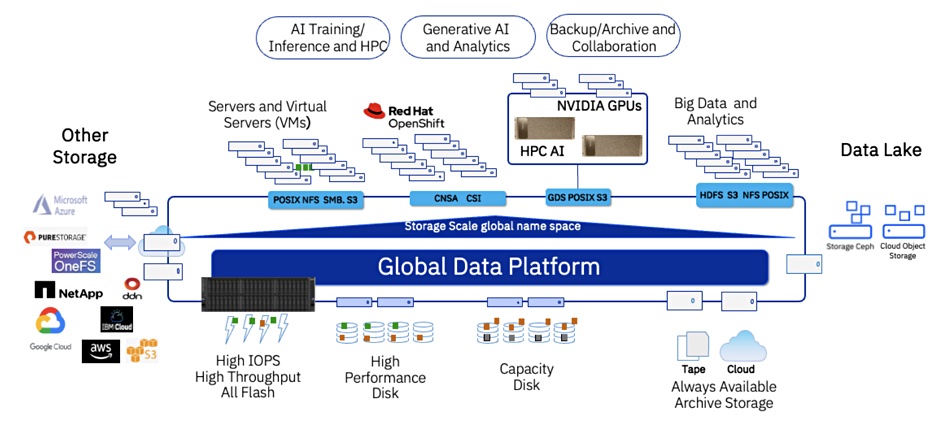

As well as putting out its new box, IBM said that the Storage Scale 6000 can be a collection point for data from multiple sources, a global data platform, as a diagram indicates:

This includes collecting data from external arrays such as Dell PowerScale, NetApp, and Pure Storage, and the cloud, and also dispersing data to tape systems or the cloud for longer-scale storage.

For more information, read David Wohlford’s blog and check out an SSS 6000 datasheet here. Wohlford is a worldwide Senior Product Marketing Manager for AI and Cloud Scale in IBM Storage.