Snowflake has announced improved support for external data, app development, generative AI models, data governance, and cost management directly in its data warehouse cloud at the Snowday 2023 gabfest.

The data warehouse darling is building an all-in-one data cloud service that customers can use as an abstraction layer over public cloud services, data lakes, and lakehouses, with app development, cost management, and governance capabilities services. Many of the new items are due in the near future, but the intent that users need never leave the Snowflake environment is clear.

“The rise of generative AI has made organizations’ most valuable asset, their data, even more indispensable. The company is making it easier for developers to put that data to work so they can build powerful end-to-end machine learning models and full-stack apps natively in the Data Cloud,” said Prasanna Krishnan, Snoeflake senior director of product management.

Christian Kleinerman, SVP of Product, said: “Snowflake is making it easier for users to put all of their data to work, without data silos or trade-offs, so they can create powerful AI models and apps that transform their industries.”

The service/function names to look out for are Iceberg, Snowpark, Horizon, Cortex, Snowflake Native App Framework, Snowflake Notebooks, Powered by Snowflake Funding, and Cost Management Interface. Grab a favorite beverage and we’ll start with Iceberg.

Iceberg

Snowflake has a coming public preview of its work to integrate external Apache Iceberg format tables with its SQL-based data warehouse, and allow access to Iceberg data from other data engines. It says Iceberg tables enable support of data architectures such as data lakes, data lakehouse, and data mesh, as well as the data warehouse.

Iceberg Tables are a single table type that brings the management and performance of Snowflake to data stored externally in an open format. They make it easier and cheaper to onboard data without requiring upfront ingestion. Iceberg Tables can be configured to use either Snowflake or an external service like AWS Glue as the table’s catalog to track metadata, with a one-line SQL command to convert to Snowflake in a metadata-only operation. Apache Spark can use Snowflake’s Iceberg Catalog SDK to read Iceberg Tables without requiring any Snowflake compute resources.

Snowflake says it has expanded support for semi-structured data with the ability to infer the schema of JSON and CSV files (generally available soon) in a data lake. It’s also adding support for table schema evolution (generally available soon).

Snowpark

Snowpark is a facility for the deployment and processing of non-SQL code. More than 35 percent of Snowflake’s customers use Snowpark on a weekly basis (as of September 2023), and it is also being employed by Python developers for complex ML model development and deployment. New functionality includes:

- Snowpark Container Services (public preview soon in select AWS regions): This enables developers to deploy, manage, and scale containerized workloads within Snowflake’s fully managed infrastructure. Developers can run any component of their application – whether ML training, a ReactJS front end, a large language model, or an API – without needing to move data or manage complex container-based infrastructure. Snowpark Container Services provides an integrated image registry, elastic compute infrastructure, and RBAC-enabled, fully managed Kubernetes-based clusters with Snowflake’s networking and security controls.

- Snowflake Notebooks (private preview): A development interface that offers an interactive, cell-based programming environment for Python and SQL users to explore, process, and experiment with data in Snowpark. Notebooks allow developers to write and execute code, train and deploy models using Snowpark ML, visualize results with Streamlit chart elements, and more within Snowflake’s unified platform.

- Snowpark ML Modeling API (general availability soon): This enables developers and data scientists to scale out feature engineering and simplify model training for faster and better model development. They can implement popular AI and ML frameworks natively on data in Snowflake without having to create stored procedures.

- Snowpark Model Registry (public preview soon): This builds on a native Snowflake model entity and enables the scalable deployment and management of models in Snowflake, including expanded support for deep learning models and open source large language models (LLMs) from Hugging Face. It also provides developers with an integrated Snowflake Feature Store (private preview) that creates, stores, manages, and serves ML features for model training and inference.

For use cases involving files like PDF documents, images, videos, and audio files, you can also now use Snowpark for Python and Scala (generally available) to dynamically process any type of file.

Snowflake Native App Framework

The Snowflake Native App Framework (general availability soon on AWS, public preview soon on Azure) provides customers with the building blocks for app development, including distribution, operation, and monetization within Snowflake’s platform. That is, they can write their own apps to process Snowflake data and sell them to other customers through the Snowflake Marketplace.

Such developers can use Snowflake’s new Database Change Management (private preview soon) to code declaratively and templatize their work to manage Snowflake objects across multiple environments. The Database Change Management features serve as a single source of truth for object creation across various environments, using the common “configuration as code” pattern in DevOps to automatically provision and update Snowflake objects.

Snowflake also announced the private preview of the Snowflake Native SDK for Connectors, which provides core library support, templates and documentation.

With Snowpark Container Services as part of Snowflake Native Apps (integration in private preview), developers can bring in existing containerized workloads, for an accelerated development cycle, or write app code in the language of their choice and package it as a container.

Horizon

With more data types and tables supported, Snowflake is expanding its Horizon governance capability, which looks after compliance, security, privacy, interoperability, and access capabilities in its Data Cloud. Horizon is getting:

- Additional Authorizations and Certifications: Snowflake recently achieved compliance for the UK’s Cyber Essentials Plus (CE+), the FBI’s Criminal Justice Information Services (CJIS) Security Policy, the IRS’s Publication 1075 Tax Information Security Guidelines, and assessments by the Korea Financial Security Institute (K-FSI), as well as StateRAMP High and US Department of Defense Impact Level 4 (DoD IL4) Provisional Authorization on AWS GovCloud.

- Data Quality Monitoring (private preview): This is used by customers to measure and record data quality metrics for reporting, alerting, and debugging. Snowflake is unveiling both out-of-the-box and custom metric capabilities for users.

- Data Lineage UI (private preview): The Data Lineage UI gives customers a bird’s eye visualization of the upstream and downstream lineage of objects. Customers can see how downstream objects may be impacted by modifications that happen upstream.

- Differential Privacy Policies (in development): Customers can protect sensitive data by ensuring that the output of any one query does not contain information that can be used to draw conclusions about any individual record in the underlying data set.

- Enhanced Classification of Data: Custom Classifiers (private preview), international classification (generally available), and Snowflake’s new UI-based classification workflow (public preview) allow users to define what sensitive data means to their organization and identify it across their data estate.

- Trust Center (private preview soon): This centralizes cross-cloud security and compliance monitoring to reduce security monitoring costs, resulting in lower total cost of ownership (TCO) and the prevention of account risk escalations. Customers will be able to discover security and compliance risks based on industry best practices, with recommendations to resolve and prevent violations.

Cortex

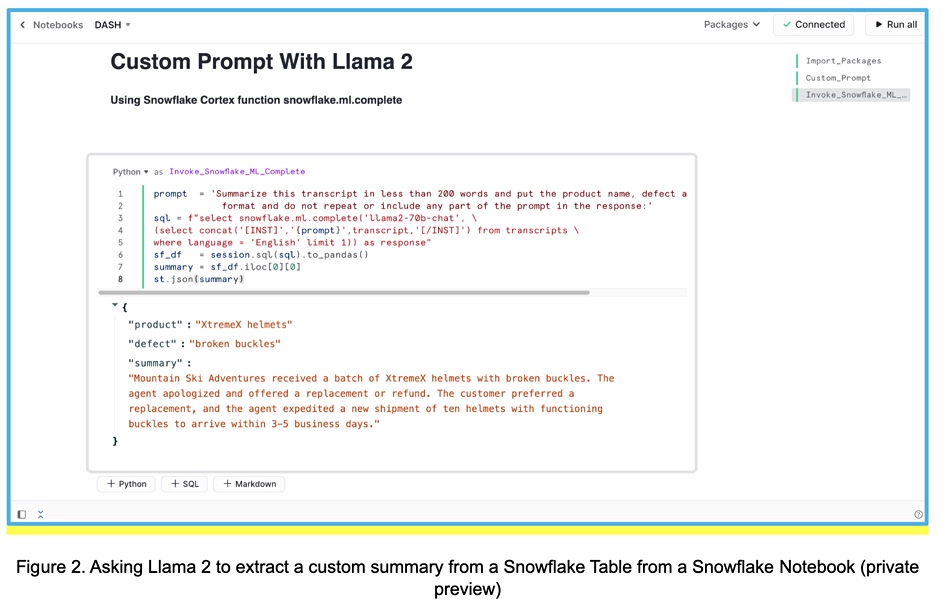

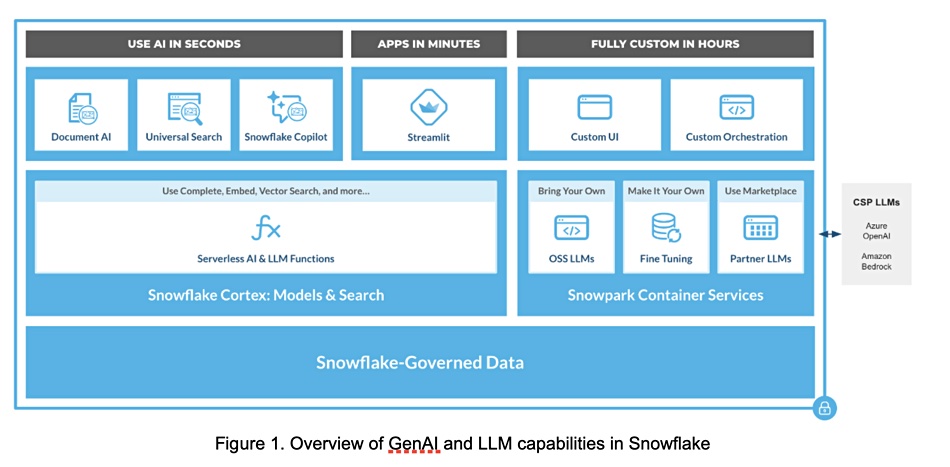

Snowflake Cortex says (available in private preview) is an intelligent, fully managed service that offers access to AI models, LLMs, and vector search functionality to enable organizations to quickly analyze data and build AI applications. It gives users access to a growing set of serverless functions that enable inference on generative LLMs such as Meta’s Llama 2 model, task-specific models to accelerate analytics, and advanced vector search functionality.

Snowflake Cortex is also the underlying service that enables LLM-powered experiences that have a full-fledged user interface. These include Document AI (in private preview), Snowflake Copilot (in private preview), and Universal Search (in private preview).

Cortex includes a set of general-purpose functions that use both open source and proprietary LLMs to help prompt engineering to support a broad range of use cases. Initial models include:

- Complete (in private preview): Users can pass a prompt and select the LLM they want to use. For the private preview, users will be able to choose between the three model sizes (7B, 13B, and 70B) of Llama 2.

- Text to SQL (in private preview): This generates SQL from natural language using the same Snowflake LLM that powers the Snowflake Copilot experience.

These functions include vector embedding and semantic search functionality so users can contextualize the model responses with their data to create customized apps in minutes. This includes:

- Embed Text (in private preview soon): Transforms a given text input to vector embeddings using a user selected embedding model.

- Vector Distance (in private preview soon): To calculate distance between vectors, developers will have different functions to choose from: cosine similarity, vector_cosine_distance(), L2 norm – vector_l2_distance(), and inner product – vector_inner_product().

- Native Vector Data Type (in private preview soon): To enable these functions to run against your data, vector is now a natively supported data type in Snowflake in addition to all the other natively supported data types.

Streamlit in Snowflake (public preview): With Streamlit, developer teams can accelerate the creation of LLM apps, with the ability to develop interfaces in just a few lines of Python code and no front-end experience required, we’re told. These apps can then be deployed and shared across an organization via unique URLs that use existing role-based access controls in Snowflake, and can be generated with just a single click. Learn more here.

Powered by Snowflake Funding

This Powered by Snowflake Funding Program intends to invest up to $100 million dollars toward the next generation of startups building Snowflake Native Apps. It features venture capital firms Altimeter, Amplify Partners, Anthos, Coatue, ICONIQ Growth, IVP, Madrona, Menlo Ventures, and Redpoint Ventures. AWS is helping by providing $1 million in free Snowflake credits on AWS over four years to startups building Snowflake Native Apps.

Snowflake says apps that are Powered by Snowflake benefit from the speed, scale, performance of Snowflake’s platform for accelerated time to market, improved operational efficiency, and a more seamless customer experience. With the Snowflake Native App Framework, developers can build an app, market, monetize, and distribute it to customers across the Data Cloud ecosystem via Snowflake Marketplace, all from within Snowflake’s platform.

Stefan Williams, VP Corporate Development and Snowflake Ventures, said in a statement: “A new way to deploy enterprise applications is emerging as companies look to bring their apps and application code closer to their data.” In other words, startups can build apps directly in the Snowflake data cloud and compete for VC funding and AWS credits.

Williams added: “Innovative enhancements in AI enabled through Snowpark, the Snowflake Native App Framework, and Snowflake’s … data privacy, security, and governance make it easier than ever for startups to build, deploy, and monetize enterprise apps. With our venture capital partners and AWS, the Powered by Snowflake Funding Program will accelerate this new era of software development.”

Potential developers can register their interest here.

Cost Management Interface

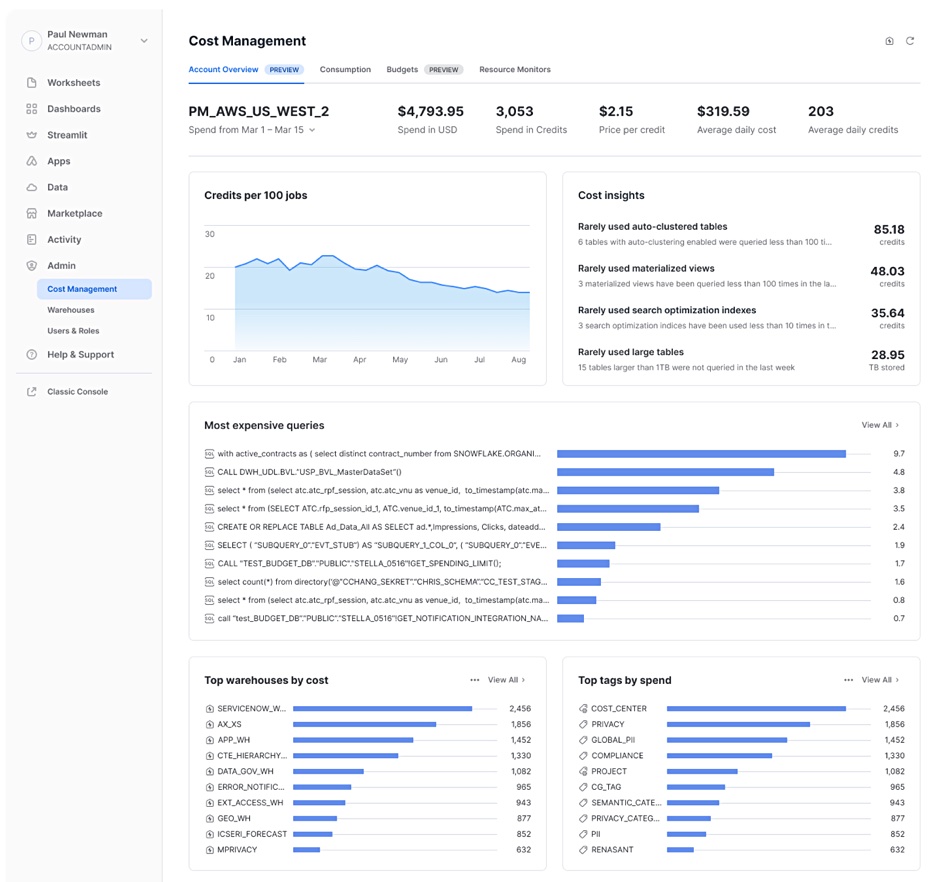

Customer admins will be able to manage and optimize their Snowflake spend with this interface, getting visibility into account-level usage and spend metrics. The Cost Management Interface in Snowsight has an Account overview that provides a look at account-level consumption and spend, including dollars and credits spent over a specified time period, average daily spend, top warehouses by cost, the most expensive queries etc. The trend in “credits per 100 jobs” graphic below further highlights how the effective value of a Snowflake credit changes over time.

They will be able to optimize their resource allocation on Snowflake through recommendations (private preview soon).

Snowflake has also introduced Budgets in public preview on AWS. Budgets allow admins to set spend controls at an account level or drill down to more granular levels for a fixed calendar month that resets on the first day of the month. Both Budgets and Resource Monitors will be accessible via the Cost Management Interface, allowing admins to control Snowflake spend from one place for a better user experience.

The Snowflake data warehouse has coming support for ASOF joins (in private preview soon), which will enable data analysts to write simpler queries that combine time series data. It’s improving support for advanced analytics by increasing the file size limit for loading large objects (up to 128 MB in size). This will be in private preview soon.

Snowflake is flooding customers with news about wider access to data, better app development facilities, and generative AI model support. The company doesn’t want to lose customers to Databricks, Dremio, or any other mass data-organizing and analyzing, model training upstarts. When CEO Frank Slootman says Amp It Up he really does mean what he says.

To find out more, explore a plethora of Snowflake blogs here.