My mental data and storage classification landscape has been getting confused and briefings with suppliers made it obvious a refresh was needed.

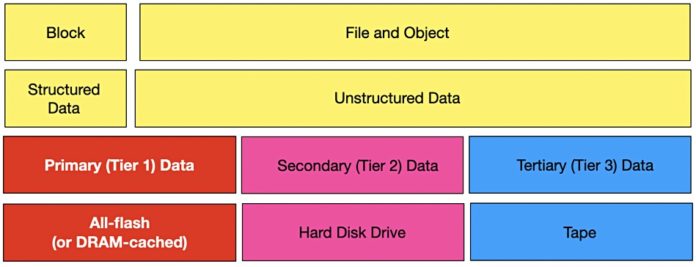

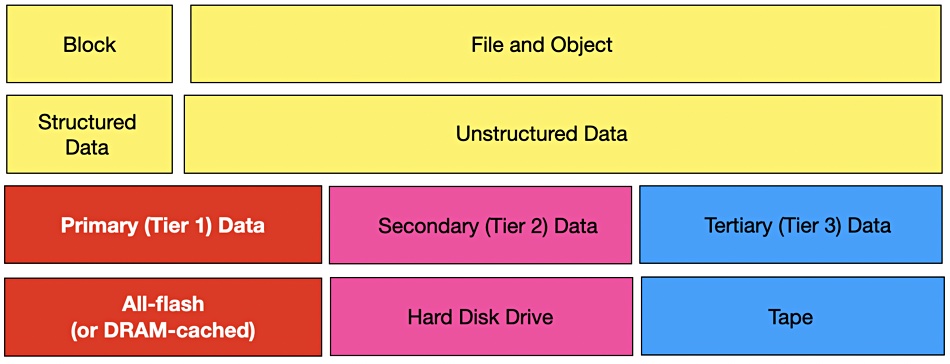

Going back to the basic block-file-object data types I had a landscape clear-out and then tidied everything up — notably in the mission-critical-means-more-than-block area. See if you agree with all this.

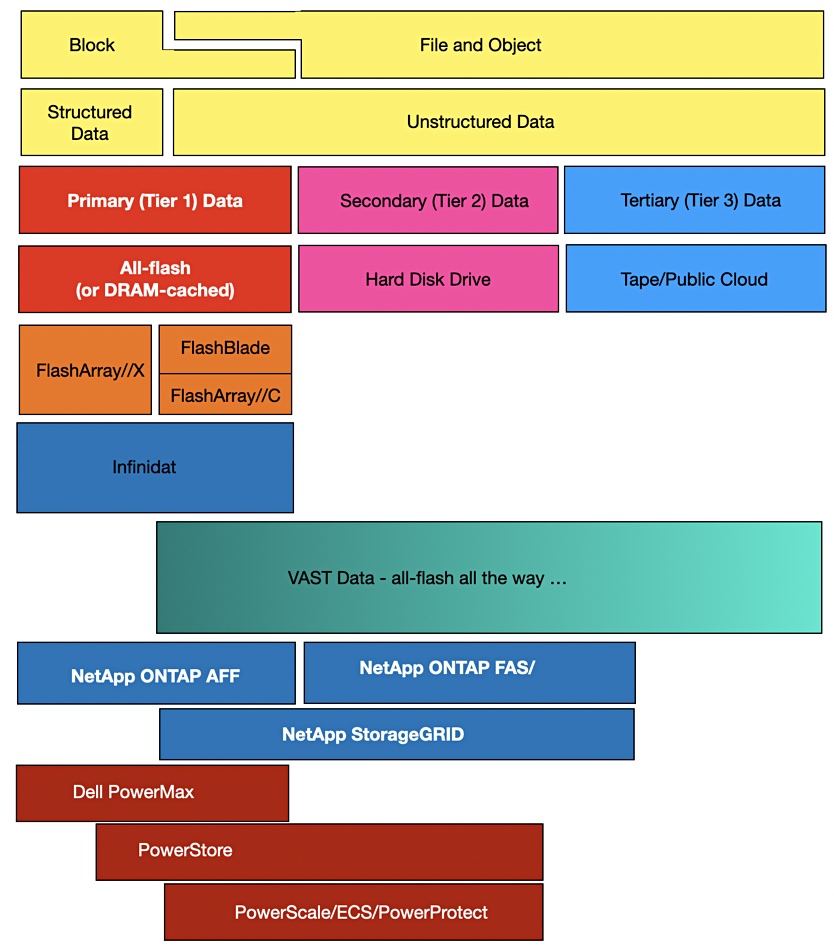

Update. Suppliers and storage categories diagram updated to remove VAST DAta from block category and add FlashArray//C. 25 Oct 2020.

Block storage is for structured — meaning relational or traditional database — data, and is regarded as tier 1, primary data, and also as mission-critical data. If the ERP database stops then the business stops. Typically such data will be stored in all-flash arrays attached to mainframes — big iron — or powerful x86 servers.

Illustrating this diagrammatically started out being simple, on the left, but then became more complex as we moved rightwards:

File and object data is regarded as unstructured data, also often as secondary data — which it may be, but that is not the whole story. Because file data certainly, and object data also, can be mission-critical. It can be primary data and will be put in all-flash arrays as well.

It’s different from block-based primary data where the applications are all database-centric.

Primary file and object data storage tends to be used in complex application workflows where applications work in sequence to process the data towards a desired end result. Think entertainment and media with a sequence of steps from video capture through special effects stages with layers of effects built up, through editing to production. The same set of files is being used, added to, and extended in a concurrent, real-time fashion needing low latency and high throughput.

The same is true in a hospital medical imaging system, though with fewer stages. There is image capture which can involve many and large scan files, analysis and then comparison with potentially millions of other files to find matches, and follow treatment and outcomes trails to find the best remedial procedure.

This needs to be done in real time, while the consultant doctor is with the patient and reviewing the captured image scans.

These both seem similar to a high-performance computing (HPC) application involving potentially millions of files with low-latency and parallel, high-throughput access by dozens of compute cores running complex software to simulate and calculate results based on the data.

We might say that primary file and object storage is needed by mission-critical applications (at the user level certainly and organisation level possibly) which have enterprise HPC characteristics. A separate use case is for fast restore of backup data.

Secondary and tertiary data

Secondary data is different. It is unstructured, file and object-based, not block, and it is much less performance-centric, both in a latency and throughput sense. The need is more for capacity than performance. This does not, therefore, need the speed of all-flash storage and we see hybrid flash/disk or all-disk arrays being used.

Tertiary data is the realm of tape, of cold storage, of low access rate archives. These can be either be on-premises in tape libraries or in the public cloud, hidden behind service abstractions such as Glacier and Glacier Deep Archive.

Suppliers

Having laid out this data and storage landscape we can match some suppliers’ products to it and see how valid the ideas are.

Let’s start with a straightforward matching exercise: Pure Storage. Its FlashArrays are for storing and accessing primary block data. Its FlashBlade products are for storing and accessing primary unstructured data and for the fast restore of backup data.

Infinidat is another straightforward case. Its arrays store block data, hence primary data and, although predominantly disk-based, have effective DRAM caching and caching software to provide faster-than-all-flash performance. Recently it has launched an all-flash system with the same DRAM caching.

VAST Data supplies a one-trick hardware pony — this is meant in a positive way — an all-flash system with persistent memory used to hold metadata, and compressed and deduplicated data stored in QLC flash. This so-called Universal Storage is presented as a single store for all use-cases except primary block data.

NetApp supplies all-flash FAS for primary data, both file and block, hybrid FAS systems for secondary unstructured file data, and StorageGRID systems for object data, both primary (all-flash StorageGRID) and secondary.

A look at Dell’s products suggests that PowerMax is for primary block data, PowerStore for primary block and file data and secondary file data, and PowerScale for primary and secondary unstructured file data. ECS is for object data.

We can position Qumulo too. It’s for primary and secondary file data, with WekaIO targeting primary file data with its high-performance file software.

The two main take-aways from this exercise are that, first, unstructured data refers to both primary and secondary data storage, and second, that primary unstructured data is being used in enterprise HPC applications across a variety of vertical markets.