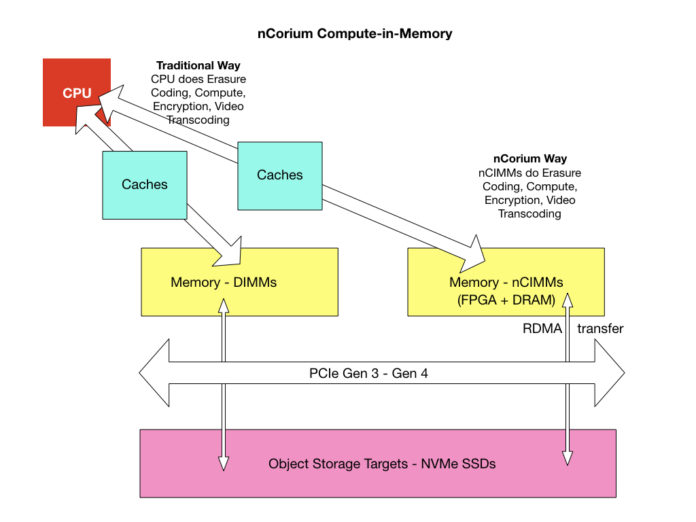

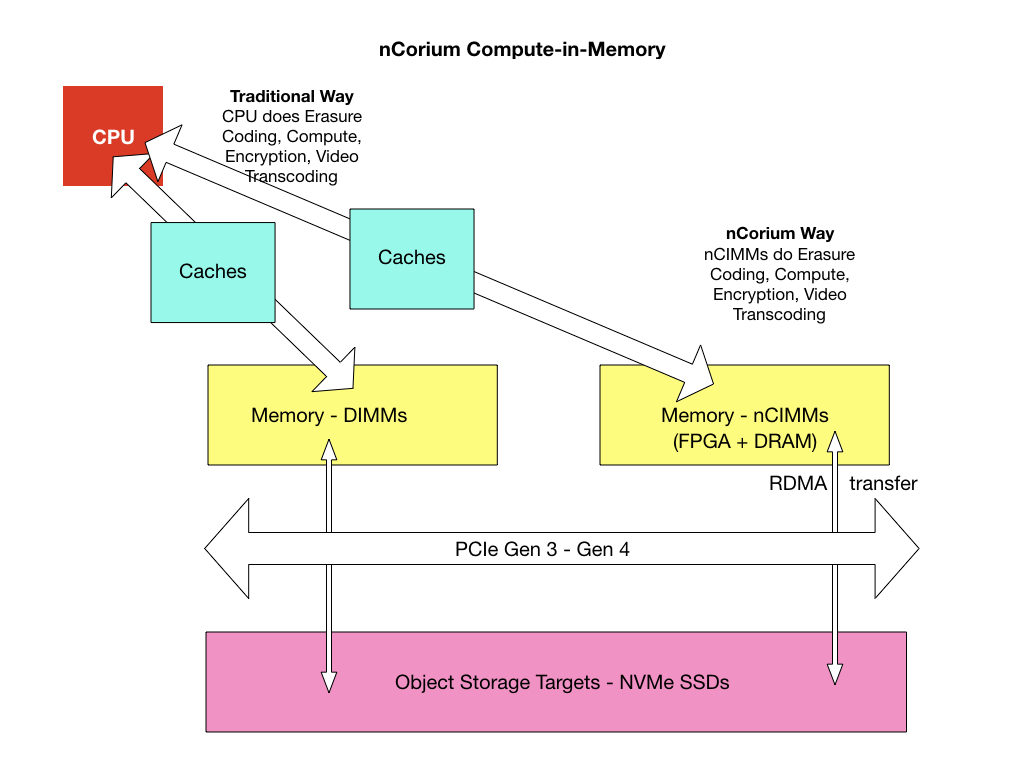

nCorium rejects the idea of performing compute in storage to accelerate storage IO. The early stage storage startup suggests we do it in memory instead, using FPGA-enhanced DIMMs to give host servers a greater boost.

The offloading of repetitive storage operations from a host CPU is being pioneered by NGD, with in-situ processing inside NVMe SSDs. Samsung, ScaleFlux and Eideticom are also active in the field. These are all examples of computational storage. But nCorium takes a different tack, intended to deliver storage computation in memory. And no, they are not just the same words in different order.

Systems are much faster when low-level storage compute work is performed in memory before processed data hits the storage drives, nCorium argues.

The company’s first prototype does erasure coding using its compute-in-memory modules (nCIMMs). Over time nCorium wants to add compression and encryption, with artificial intelligence, machine learning and video transcoding also on the roadmap. Watch this space.

Storage computation in memory

Corium is presenting today at SC19 in Denver and will demo the offloading of compute-intensive storage IO-related functions to speed overall server application processing.

According to the SC19 abstract, nCIMMs “replace the traditional DIMMs in the server architectures and offer a path to achieve an order of magnitude better compute performance at the socket level.”

Early nCorium testing at Los Alamos National Labs (LANL) with parallel filesystems suggests it can speed host servers five times or more, depending upon the volume of storage grunt work.

The demo rig included a FlacheStreams Storage Server, OEMed from a company called EchoStreams, using Kioxia PCIe Gen 4 NVMe SSDs, SSDs and nCorium’s Compute-in-Memory Modules (nCIMMs).

nCorium and LANL used the nCIMMs to compute Reed-Solomon erasure coding and this gives the host system more CPU cycles for app work, with the repetitive storage grunt work offloaded to nCorium’s ‘acceleration engines’.

In an abstract of their SC 19 presentation, nCorium’s CTO Suresh Devalapalli and CEO Arvindh Lalam and LANL scientist Brett Neuman, argue the “mismatch between compute and data movement from the network to system memory, system memory to the CPU caches, and system memory to storage devices creates significant bottlenecks in streaming applications. nCorium’s technology merges compute capabilities into the memory channel that allows applications to offload compute and I/O intensive tasks to low power reconfigurable compute engines that deliver higher throughputs at lower energy consumption.”

The idea is to reduce the amount of data movements between storage devices and memory, and also between memory and the host’s CPUs via the CPU caches. Such data movements are carried out when data is erasure-coded, compressed, encrypted, and also with video transcoding and types of AI and machine learning work.

nCorium offloads this repetitive work to a dedicated FPGA compute engine colocated with memory in its nCIMMs. These sit between a server CPU and caches and the PCIe bus-connected storage devices. RDMA techniques are used to move data between storage and the nCIMMs.

The nCIMMs sit in the data path and accelerate it. Kioxia, the company formerly known as Toshiba Memory Corp, is keen on the idea because its PCIe Gen 4 SSDs push the nCIMM-using system faster still – eight to 10 times faster than a server configured with traditional memory DIMMs and SSDs.