Here’s another great big batch of storage news items, two dozen or so of them, to give you a flavour of what’s going on apart from the headline items.

Delphix and 451 DataOps report

A 451 DataOps report found;

- 13 per cent of enterprises report data growth in excess of 2TB per day.

- 47 per cent say it takes 4-5 days to provision a new data environment.

- 66 per cent cite compliance and improved security as the number one business benefit of DataOps

The report was commissioned by Delphix, a DataOps evangelist, and we thought this could be a pay-for-play job but, we’re told, 451 was exploring DataOps adoption before Delphix got involved, starting the research a year ago and concluding this January.

Researcher Matt Aslett was, we’re also told, genuinely surprised by an incredibly strong response from the surveyed users.

Get the report here (registration required.)

Fujitsu’s SAP-happy HCI

Fujitsu says its PRIMEFLEX for VMware VSAN hyper-converged system that’s optimised for SAP applications uses a PRIMERGY RX4770 M4 server. This is a 2U 4-socket system with Xeon SP silver, gold or platinum processors (up to 28 cores each), up to 6,144 GB DDR4 memory with 2,666 MHz (48 DIMM slots).. There are 8x PCIe Gen 3 slots, and up to 16x 2.5-inch HDD/SSD + 1x ODD or up to 12x PCIe 2.5-inch SSD SFF.

The vSAN storage for SAP HANA is based on NVMe disks or cache and SATA SSD for data.

The HCI SW technology stack comes from VMware. All SW which is currently supported by VMware vSAN can also be used with PRIMEFLEX or VMware vSAN. Network Virtualisation can be optionally integrated with VMare NSX.

Quobyte’s HPC storage gets into African pest control

HPC storage software producer Quobyte has teamed up with the Centre for Agriculture and Bioscience International (CABI) to help African farms fight pest infestations better.

The Quobyte software runs on the UK’s JASMIN supercomputer and contributes to a PRISE (Pest Risk Information Service ) project via a big data analytics process which helps small farms in Ghana, Kenya, and Zambia.

CABI is an international nonprofit that uses science and technology to solve some of the world’s problems in agriculture and the environment.

PRISE uses earth observation technology, satellite positioning, weather, and pest lifecycle information to forecast the risk of pest outbreaks and so enable farmers to take preventative steps to reduce crop losses and ensure better yields. The farmers can, for example, identify a disease, spray a pesticide, adjust irrigation, and so forth.

The project is able to issue forecasts a week or more in advance, and will roll out in more countries early next year, There are plans to bolster the service by collecting data from additional countries, including crowdsourced data from the fields, supplied by farmers – to increase output and improve prediction modelling accuracy.

Contributors to PRISE include King’s College London, UK Space Agency, Swiss Agency for Development and Cooperation, Australian Centre for International Agricultural Research, and the UK Science and Technology Facilities Council (STFC) Centre for Environmental Data Analysis.

Shorts

Atto Technology will exhibit its XstreamCORE 8200 at he HPA Tech Retreat, Feb. 11-15, Palm Desert, CA. It is a hardware-accelerated protocol bridge with storage controller features that allows multiple workstations to share a pool of SAS storage over an iSCSI or iSER network. The product features two 40Gb Ethernet input connectors and four x4 12Gbit/s Mini-SAS HD connectors. It achieves up to 1.2 million 4K IOPS and 6.4GB/sec throughput per controller with only two microseconds of added latency.

Atto says that, with XstreamCORE 8200 you can easily configure and manage pooled block storage using an interface less complex than traditional server interfaces. The pooled storage can be assembled using new or re-purposed compatible storage hardware.

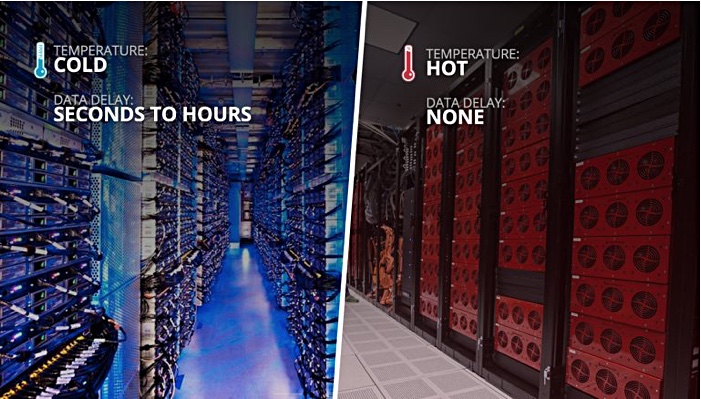

A Backblaze blog discusses how hot and cold in data storage describe the availability of the stored data and how often it’s typically accessed. It takes a look at the differences between hot and cold storage, the advent of hot cloud storage, and which temperature is right for your data storage strategy.

Caveonix announced a collaborative relationship with Dell EMC to offer a hardened risk and compliance management solution for services providers based on its RiskForesight product. It’s designed to enable service providers to offer continuous cyber and compliance risk management for customers in hybrid and multi-cloud deployments on dedicated or multi-tenant configurations.

Analytics startup Databricks banks big bucks. It’s raised a massive $250m in an E-round taking its total raised to $498.5 and valuation to $2.75bn. Not bad for a company founded in 2014. Multi-round investor Andreesen Horowitz led the round with existing investors lNew Enterprise Associates, Battery Ventuires and Green Bay Ventures pumping cash in, along with Microsoft and the Coatue Management hedge fund.

DDN (DataDirect Networks) says HPC storage provider RAID Inc. is a preferred reseller of DDN’s distribution of the Lustre scalable parallel file system software.

Dell EMC will ship a ruggedised version of Azure Stack starting this quarter. It includes server, storage, networking and Azure Stack, which should be suited for edge use cases in the military, energy and mining industries.

Cloud scale-out filesystem SW startup Elastifile now has support for dynamic provisioning of container volumes via a new software driver compliant with the Container Storage Interface (CSI) specification. The CSI driver integrates the Elastifile Cloud Filesystem’s NFS capability with Kubernetes and other container orchestration products.

The FCIA (FIbre Channel Industry Association) has a FICON webcast on Feb. 20, 10am PT // 1pm ET. It says FICON (Fibre Channel Connection) is an upper-level protocol supported by mainframe servers and attached enterprise-class storage controllers that utilise Fibre Channel as the underlying transport. The webcast described some of the key characteristics of the mainframe and how FICON satisfies the demands placed on mainframes for reliable and efficient access to data. FCIA experts gave a brief introduction into the layers of architecture (system/device and link) that the FICON protocol bridges.

MapR Ecosystem Pack (MEP) 6.1, gives developers and data scientists new language support for the MapR document database and support for Container Storage Interface (CSI). The CSI support provides persistent storage for compute running in Kubernetes-managed containers. MapR says that, unlike the Kubernetes Flexvol-based volume plugin, storage is no longer tightly coupled or dependent on Kubernetes releases. An Apache Kafka Schema Registry allows the structure of streams data to be formally defined and stored, letting data consumers better understand data producers. More info here.

Pavilion Data Systems, an NVMe-oF storage technology business, has joined the Storage Networking Industry Association (SNIA) to help create standards around NVMe-oF for data management and security, adding to its ongoing efforts as a contributor to NVM Express.

Quest has joined the Veeam Alliance Partner Program. Its Quest QoreStor software-defined secondary storage with deduplication has been verified as a Veeam Ready Repository. With it, Quest says, customers can accelerate their Veeam backups by more than 300 per cent, and reduce backup storage requirements by more than 95 per cent.

Rambus announced the tapeout of its GDDR6 PHY on TSMC 7nm FinFET process technology. This provides the industry’s highest speed of up to 16 Gbit/s, providing a maximum bandwidth of up to 512 Gbit/s. GDDR6 is applicable, it says, to a broad range of high-performance applications including networking, data centre, advanced driver assistance systems (ADAS), machine learning and artificial intelligence (AI.) It’s available from Rambus for licensing.

MRAM developer Spin Memory, previously known as Spin Transfer Technologies, says an additional investor, Abies Ventures of Tokyo, Japan, has joined its Series B funding round. It joins existing investors Applied Ventures LLC, Arm, Allied Minds, Woodford Investment Management and Invesco Asset Management. Spin Memory intends its MRAM to replace embedded SRAM and a range of other non-volatile memories.

SwiftStack has signed Carahsoft as a reseller, with Carahsoft acting as SwiftStack’s Federal Distributor and Master Aggregator, making the company’s industry-leading multi-cloud storage products available to the public sector and Carahsoft’s reseller partners via the company’s NASA Solutions for Enterprise-Wide Procurement (SEWP) V Contract. SwiftStack’s storage products are available immediately via Carahsoft’s SEWP V contracts NNG15SC03B and NNG15SC27B.

Contaner system monitoring startup Sysdig says that, in 2018, it more than tripled the number of Fortune 500 customer deployments, with over 40 per cent of deployments using the Sysdig platform for both security and visibility use cases – up from 5 per cent this time last year. It nearly doubled its global head count and grew to over 30 locations world-wide. Downloads of Falco, the company’s open source container runtime security offering which is now a Cloud Native Computing Foundation Sandbox project, grew six times over in 2018.

Veritas says its top backup products; NetBackup and Backup Exec, have attained Amazon Web Services (AWS) Storage Competency status. Veritas is an AWS Partner Network (APN) Advanced Technology Partner offering solutions validated by the AWS Storage Competency. NetBackup and Backup Exec support multiple AWS storage classes, including Simple Storage Service (S3), S3 Standard-Infrequent Access (S3 Standard-IA), and Glacier.

Virtual Instruments, involved in hybrid infrastructure performance monitoring and management, says its VirtualWisdom Platform Appliance v5.7 has completed the Common Criteria certification process. he completion of the Common Criteria certification under the NIAP-approved collaborative Protection Profile for Network Devices (NDcPP) gives governments and end users confidence that the VirtualWisdom Platform Appliance has passed documentation and testing requirements.

Distributor Exertis Hammer has expanded its Western Digital offering to include rights to distribute IntelliFlash across EEA, South Africa and Israel. It can now distribute the entire Western Digital Data Centre Systems (DCS) range. Paul Silver, ex-VP EMEA for WD-acquired Tegile, and now Senior Director EMEA DCS Sales at WD says; “Exertis Hammer holds an established position … at the forefront of storage technology distribution.”

People

Gabriel Chapman has left NetApp and joined Gartner as a Senior Director Analyst on the Data Center and Cloud Operations team within the Gartner for Technical Professionals research group. He had been a Principal Architect – SolidFire Office of the CTO at NetApp, and a Senior Manager for Cloud Infrastructure. He spent time at Cisco and SimpliVity before that.

Davis Johnson has joined Cohesity as its VP for Federal Sales. He has Riverbed, Oracle and NetApp public sector experience in his 30-year CV. Cohesity’s public sector customer base grew by nearly 200 per cent in the second half of the last fiscal year.

Customers

Exagrid (deduping backup to disk arrays) says it has provided its products to American Standard since 2009. This customer is a subsidiary of LIXIL, and has produced residential and commercial products for kitchen and bath for over 140 years. ExaGrid integrates with all of the most frequently used backup applications, including Veeam, which American Standard uses to back up its virtual environment, and Veritas NetBackup, which is used for the remaining physical servers.